By Olga Beregovaya

When working with AI, ethical standards play an increasingly critical role in managing bias and preventing harm.

Implementing artificial intelligence (AI) provides loads of opportunities, from automating critical business processes to creating multilingual digital humans. However, it also presents several ethical concerns and issues.

Lack of ethical standards in the development of AI applications and production or collection of the underlying data can lead to decision bias within ranking algorithms, such as evaluating job applicants or approving or declining a bank loan application.

According to Gartner, by 2022, 85% of AI projects will be delivering erroneous outcomes caused by the model training data bias., algorithmic bias, or demographics of those who developed the algorithms.1 And Forrester predicts that in 2022, the market for ethical AI solutions will see huge growth.2 So, the need for ethical standards in AI has never been more crucial.

This article addresses the issues and concerns regarding ethical AI and its future and how it will impact global content transformation processes.

What Is Ethical AI?

To begin, let’s start with a definition of ethical AI. In short, ethical AI is a set of core principles, values, and implementation techniques that companies will need to consider and address when deploying AI technologies to preserve human dignity while ensuring no harm is caused to the end user.

To begin, let’s start with a definition of ethical AI. In short, ethical AI is a set of core principles, values, and implementation techniques that companies will need to consider and address when deploying AI technologies to preserve human dignity while ensuring no harm is caused to the end user.

These measures are normally centered on global laws, data protection regulations, employee safety regulations, and possible discrimination against traditionally marginalized communities including BIPOC, LGBTQIA+, women, and those with disabilities. They would also focus on the company’s own legal and medical liabilities.

Why Is Ethical AI So Important?

More businesses are implementing AI to create solutions that elevate their offerings to gain a competitive edge, which can be incredibly profitable.

However, if you try to scale-up too quickly, particularly if you are a global company offering AI-enabled services across different languages, you could be putting your reputation on the line.

In addition, lack of ethical standards in development of AI technologies and capabilities can potentially lead to bias within ranking algorithms (as in job application example above), or within recommendation engines.

For example, in 2019 Optum was investigated for their algorithm that reportedly recommended that doctors and nurses pay closer attention to certain patients based on race.3

In the same year, Goldman Sachs was investigated for their algorithm that supposedly granted larger credit limits to men than women on their Apple cards.4

Amazon Alexa, Microsoft’s Siri, and Google’s voice assistant have also come under fire for discriminating against women. All these tech giants used female voices for their voice assistants, which some argued reaffirmed the stereotype that women are subservient.5

And finally, one of the most famed AI training data sourcing incidents was brought to us by Facebook. In 2018, the company granted the political firm, Cambridge Analytica, access to the personal data of over 50 million users.6

Because of these recent issues, according to MarketingWeek’s coverage of responsible AI, some of the world’s leading conglomerates like Microsoft, Twitter, Google, and Instagram are creating new algorithms that focus on the ethical side of AI.7

These new algorithms aim to intercept problems that could arise when collecting and using thousands of training data sources in their machine learning models.

Issues and Concerns in AI Ethics

Now you have an understanding of how not taking ethics into account in AI can have a negative impact on a business. Let’s take a look at three main pain points that can cause ethical problems when developing and implementing AI.

-

AI Model Output Bias

Machine learning, the current foundation of AI, effectively trains neural network-based models on the underlying massive bodies of data.

Machine learning, the current foundation of AI, effectively trains neural network-based models on the underlying massive bodies of data.

And while this can offer many benefits, it can also lead to bias across the whole system in potentially detrimental and unexpected ways. For instance, AI model bias has been identified in photograph captioning and criminal punishment.

Data bias refers to any kind of frequent legacy patterns that prevail in the training data and subsequently skew the model towards certain phenomena, the ones most discussed recently would be related to social unfairness, treating certain groups differently based on their age, race, gender, sexual preferences, or other characteristics. These patterns are learned by language models from the training data and are one of the causes of the machine learning (or AI) output bias, be it conversational AI, prediction, or recommendation.

Algorithmic bias in AI refers to repeatable and systematic errors produced by the machine learning algorithm, which can be caused by multiple factors (system design, the way the training data is labeled and used, lack of regular system audits, or the demographics of the members of the teams who created the application).

Both types of biases lead to the same result — the output can cause harmful, discriminatory effects on victims of these biases while reducing trust in government, corporations, and institutions that use the biased products.

-

Discouragement and De-Skilling

Harvard Business Review underscored the importance of upholding emotional intelligence (EQ) when working with AI applications:

“Those that want to stay relevant in their professions will need to focus on skills and capabilities that artificial intelligence has trouble replicating — understanding, motivating, and interacting with human beings.”8

EQ is described as our natural ability to empathize and influence human emotions.

It doesn’t matter if they’re your own emotions or another person’s emotions. With high EQ, you should be able to identify, understand, and influence emotions, in a variety of languages, at a level that no best-in-class AI technology can match yet.

On the flip side, there are AI-powered tools that can help humans realign their moral compass and augment their EQ. For example, customer service AI platforms are capable of detecting nuances in a caller’s voice.

This enables the platform to provide insights and guidance to customer service representatives when connected to customers in real time. As such, agents can preemptively de-escalate frustration, capitalize on purchase intent, and generally be more in tune with the customer’s emotions.

-

Technical Safety

Two of the biggest concerns with implementing AI is whether the applications and platforms will work as intended, and/or whether user physical safety will be addressed, thoroughly tested, and confirmed. This can be challenging, especially for industries and companies that are heavily dependent on AI. For example, unfortunately, people have died in semi-autonomous car crashes due to AI-enabled vehicles failing to make safe decisions.

That is why AI ethics is critical. These global standards must be put in place to regulate AI use and ensure technical safety across countries, industries and applications. Otherwise, the outcomes could be detrimental or even catastrophic to the wellbeing of end users.

The Next Big Thing: Multilingual Metaverse

The “next big thing” that is taking the digital world by storm is the Metaverse. Creating the ethical Metaverse in one language is already tricky, but creating the multilingual Metaverse with ethical AI practices in place can be even more challenging.

The “next big thing” that is taking the digital world by storm is the Metaverse. Creating the ethical Metaverse in one language is already tricky, but creating the multilingual Metaverse with ethical AI practices in place can be even more challenging.

But there are ways to overcome these barriers when you decide to delve into the multilingual Metaverse, many approaches have already been implemented in the gaming space and the precursors of the Metaverse, such as Second Life.

How AI Ethics Impacts the Language Space

AI ethics plays a crucial role in handling the ethical implications of AI practices within the natural language space. Some ways AI ethics can help companies maintain moral practices across natural language understanding (NLU), natural language generation (NLG), and translation procedures include the following:

-

Identify Social Bias

In 2016, Microsoft revealed its conversational content generation experiment — an AI-powered Twitter bot called “Tay.” And, on the same day, the program started tweeting offensive and racist messages.9 It set a dangerous precedent for future AI projects in the language space.

That is, continuing to develop multilingual content and conversational AI applications without the ability to identify and control cultural issues and social biases. There are AI tools available with capabilities to identify and analyze moral issues within text in different languages and alert stakeholders promptly.

For instance, you can perform problematic content detection, which allows humans and training bots to find well-known biases within the linguistic expression of medically, socially, and politically sensitive questions.

The training data can include gender and racial non-inclusiveness, suggested corrections on dangerously ambiguous language, and collected information on signals relevant to improving worker experience and quality evaluation processes.

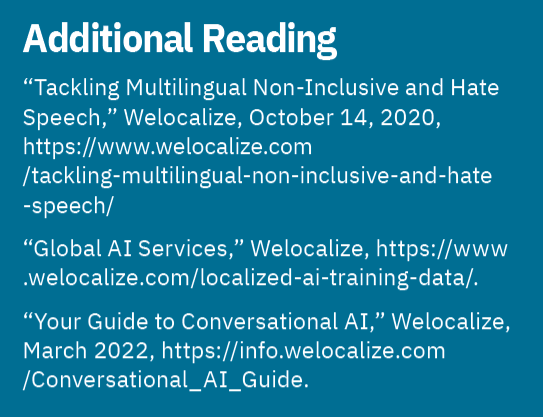

Many state-of-the-art machine translation (MT) engines can even handle gender-friendly translation issues and errors accurately. Welocalize utilizes different components of AI to detect non-inclusive language and offensive terms and their variants in language-specific engine training corpora. They then make replacement recommendations and provide synonym definitions for more accurate understanding.

Generally, systemic bias is unlikely to turn into a major translation automation problem, mainly because in the majority of cases, the process includes the “human-in-the-loop,” and because of risk mitigation approaches.

-

Monitoring Issues in User-Generated Social Media Content

User-generated content holds more influence than branded content over customer perceptions and purchase decisions. Some examples are:

- Customer reviews

- Social media comments

- Discussion threads in forums

- Social media posts

If you’re a translation buyer, you usually can’t control the input quality upstream of social media user-generated content and online commentaries. This is where AI comes in, since language service providers can use cutting-edge technology to automatically scan phrase or word signals for fake content, obscene language, or dangerous social bias.

You can use your user-generated content as constantly growing training corpora for AI applications that will identify biased behavior in your target markets, conduct foreign language sentiment analysis, and adapt future marketing messaging and social media content for better engagement.

References

References

- Gartner. 2018. “Gartner Says Nearly Half of CIOs are Planning to Deploy Artificial Intelligence.” https://www.gartner.com/en/newsroom/press-releases/2018-02-13-gartner-says-nearly-half-of-cios-are-planning-to-deploy-artificial-intelligence

- Forrester. 2021. “Predictions 2022: Successfully Riding The Next Wave of AI.” https://www.forrester.com/blogs/predictions-for-2022-successfully-riding-the-next-wave-of-ai/

- Evans, Melani, and Anna Wilde Mathews. 2019. “New York Regulator Probes UnitedHealth Algorithm for Racial Bias.” Wall Street Journal, October 26, 2019. https://www.wsj.com/articles/new-york-regulator-probes-unitedhealth-algorithm-for-racial-bias-11572087601.

- Telford, Taylor. 2019. “Apple Card Algorithm Sparks Gender Bias Allegations Against Goldman Sachs.” Washington Post, November 11 2019. https://www.washingtonpost.com/business/2019/11/11/apple-card-algorithm-sparks-gender

-bias-allegations-against-goldman-sachs/ - Welocalize. 2021. “Gender Representation + Bias in AI. Why Voice Assistants are Female.” https://www.welocalize.com

/gender-representation-bias-in-ai-why-voice-assistants-are-female/. - Cadwalladr, Carole, and Emma Graham-Harrison. 2018. “Revealed: 50 Million Facebook Profiles Harvested for Cambridge Analytica in Major Data Breach.” The Guardian, March 17, 2018. https://www.theguardian.com/news/2018/mar/17/cambridge-analytica-facebook-influence-us-election

- Hammet, Ellen. 2019. “The Ethics of Algorithms and the Risks of Getting it Wrong.” MarketingWeek.com, Published May 2, 2019. https://www.marketingweek.com/ethics-of-algorithms/

- Beck, Megan, and Barry Libert. 2017. “The Rise of AI Makes Emotional Intelligence More Important.” Harvard Business Review, February 15, 2017. https://hbr.org/2017/02/the-rise-of-ai-makes-emotional-intelligence-more-important.

- Hunt, Elle. 2016. “Tay, Microsoft’s AI Chatbot, Gets a Crash Course in Racism from Twitter.” The Guardian, March 24, 2016. https://www.theguardian.com/technology/2016/mar/24/tay-microsofts-ai-chatbot-gets-a-crash-course-in-racism-from-twitter

Olga Beregovaya (olga.beregovaya@welocalize.com) is VP, AI Innovation at Welocalize, Inc. She is seasoned professional with over 20 years of leadership experience in language technology, natural language processing (NLP), machine learning (ML), localization, and AI data generation and annotation. She is passionate about growing business through driving change and innovation, and an expert in building things from scratch and bringing them to measurable success. Olga has experience on both the buyer and the supplier side, giving her unique perspective around establishing strategic buyer/supplier alliances and designing cost-effective Global Content Lifecycle Programs. She has built and managed global production, engineering, and development teams of up to 300 members specializing in MT,