Abstract

Purpose: Recent changes in health care in the US have made it important for health information to become easier to access, understand, and use. Making medical decisions without adequate information can lead to poor health outcomes. Providers are being incentivized to improve the quality and value of patient-centered communication and care. Technical communication practitioners can collaborate with interdisciplinary professionals to help these initiatives succeed.

Method: A patient education software application was developed with an interdisciplinary collaboration between a medical animation company, a surgery clinic, and an academic researcher who is also a technical communication practitioner. User-centered design principles were employed in the development of the application. Interviews were conducted to reveal insights about the application’s influence on the language participants used to discuss the procedure.

Results: Participants who used the software application to learn about the procedure were able to consistently recognize and recall more informational and procedural knowledge than participants who did not use the application.

Conclusion: Collaborations similar to this can enhance the design, creation, development, and assessment of technical communication materials in patient education settings to help improve the odds that patients will understand more about their health and be able to make better decisions and can contribute valuable information to the technical communication curriculum. Best practices in technical communication can inform user-centered design, development, and assessment of educational materials in a variety of settings.

Keywords: multidisciplinary collaborations, patient education, user-centered design

Practitioner’s Takeaway:

- Design, creation, development, and assessment of patient education materials can be enhanced with collaborations between technical communication practitioners and industry partners.

- Using examples of interdisciplinary collaborations in the technical communication curriculum can help students develop much-needed workplace literacies.

- Interdisciplinary collaborations can increase awareness about what technical communication practitioners can do to improve the product development process in many fields.

Introduction

Health care in the US is a constantly evolving system due to high costs of insurance, treatment, and medications; high costs related to litigation; population expansion and an increasing number of aging people; improvements in technology; and growth in the medical professions (Conklin, 2002). Recently, scholars have suggested that, due to changes in the health care system in the US, it’s even more important to find ways for health care providers to implement health literacy practices that make health information easier to access, understand, and use (Berkman, Sheridan, Donahue, Halpern, & Crotty, 2011; Briglia, Perlman, & Weissman, 2015; Johnson, 2014; Koh et al., 2013; Meloncon & Frost, 2015; Rowell, 2015). The American Recovery and Reinvestment Act of 2009 and the Affordable Care Act of 2012 offer incentives for providers to improve access, accountability, quality, and value of patient-centered care (Kocher, Emanuel, & DeParle, 2010). Health communication is one of the objectives in the U.S. Department of Health and Human Services Healthy People 2020 initiative (USDHHS, 2014). Low health literacy in combination with poorly designed health education and informed consent materials causes difficulties in patient understanding and poor health outcomes (Berkman, Sheridan, Donahue, Halpern, & Crotty, 2011; Johnson, 2014). Patients often make medical decisions when they may not have adequate information (Clayman et al., 2013; Wee et al., 2009). Examining patient education materials from the perspective of the principles of clear communication and user-centered design is critical (Lazard & Mackert, 2015).

Using best practices in theories of teaching and learning and in workplace settings can help the design, creation, development, and assessment process (Johnson, 2014). Technical communication professionals can collaborate with interdisciplinary professionals and “help improve patient-centered language and practices across a multitude of media and document types, and to contribute to solving such problems as the health literacy crisis . . .” (Meloncon & Frost, 2015, p. 7).

In addition, using authentic content in the technical communication classroom can help students learn what Cargile Cook (2002) identified to be six layered literacies (basic, rhetorical, social, technological, ethical, and critical literacies) that are necessary to apply classroom knowledge to authentic settings. Cargile Cook suggests that students participate in “activities that promote collaborative team-building skills and technology use and critique” (2002, p. 8). She also notes that visual literacy must be included in every one of the six literacies. Basic literacies include being able to read, write clearly, and design appropriate documents. Rhetorical literacies add another layer of complexity—the writer also needs to be able to use analysis strategies to make appropriate choices for taking the audience, purpose, and organizational context into further consideration in the design and writing process. Social literacies include collaborations and working in teams. In addition to being able to use appropriate technologies for workplace projects, technological literacies include making appropriate choices for the design and development of the technology and assessing how user-centered those designs are. Ethical literacies permeate the layers and are demonstrated with choices that show an awareness of “legality, honesty, confidentiality, quality, fairness, and professionalism” (STC Ethical Principles for Technical Communicators, as cited in Cargile Cook, 2002). Critical literacies are demonstrated when the students can acknowledge and consider power structures and analyze possible improvements for reducing social and technological barriers. The collaboration between working practitioners and the classroom setting can create students who will be able to successfully navigate future roles.

Some of the tools and ideas used in teaching and learning can easily be transferred to other fields, including patient education (Johnson, 2014). Therefore, collaborations between interdisciplinary academic and practicing professionals can enhance the design, creation, development, and assessment of technical communication materials in patient education settings to help improve the odds that patients will understand more about their health and be able to make better decisions.

This paper presents an interdisciplinary collaboration between industry partners in a bariatric surgery patient education setting and an academic researcher who is also a practicing professional in the technical communication field. As a practicing technical communicator, I worked with a multimedia company and a medical provider to develop and revise a software application that would help the clinic’s patients learn about the pre- and post-surgical lifestyle changes and implications of the procedure before making a decision about whether or not to have the surgery (informed consent). As an academic researcher, I wanted to see what the patients learned from the educational materials that were created to teach them about bariatric surgery and if this improvement in the materials would make a difference in the language they used to discuss the procedure. I will present examples from the data and discuss how best practices can inform user-centered design, development, and assessment of patient education materials and how collaborations similar to this one can contribute valuable information to the technical communication curriculum.

First, it is important to understand a bit about the background of patient education, health literacy, and informed consent. Then I will explain some background of the specific participants in the study, bariatric surgery, obesity, and the intervention used. Then I will present the data and results and discuss implications and future directions.

Patient education, health literacy, and informed consent

According to the American Medical Association website (2007), in the US, over 90 million people have limited health literacy skills. This means that most people do not understand even the most basic health information. Patient education materials are usually written at a level much higher than the average person in the United States can understand. Low health literacy directly correlates with poor patient health outcomes (Berkman, Sheridan, Donahue, Halpern, & Crotty, 2011; Frankel, 1984). Improved health literacy has been shown to make a difference in both patient satisfaction and in patient health outcomes. So, health literacy is not only about receiving and comprehending health information but also about being able to engage with the material and use the information to make better health decisions. To understand more about health literacy, let’s start with looking at literacy in general. The word literacy has a complex set of ideas behind it. Many years ago, James Gee (1989) explained literacy by, first, defining the important difference between acquisition and learning. This distinction between acquisition and learning will provide the foundation for the definition of literacy I use in this paper:

Acquisition is a process of acquiring something subconsciously by exposure to models and a process of trial and error, without formal teaching. It happens in natural settings that are meaningful and functional. This is how most people come to control their first language. Learning, on the other hand, is a process that involves conscious knowledge gained through teaching, though not necessarily from someone officially designated a teacher. This teaching involves explanation and analysis, that is, breaking down the thing to be learned into its analytic parts. It inherently involves attaining some degree of meta-knowledge about the matter. (1989, p. 20)

According to Gee, we acquire our first, primary language, or our L1, orally and mostly subconsciously, through enculturation, or being surrounded by others who use the same L1 conventions and picking them up naturally. Gee calls this our “primary discourse” (p. 22). Literacy, on the other hand is learned, not acquired. According to Gee, “literacy is control of secondary uses of language (i.e., uses of language in secondary discourses)” (p. 23). He explains that there are many different types of literacy, and that in order to “control” these secondary uses of language, the learner must also have “some degree of being able to ‘use,’ or to ‘function’ with” that language (p. 23).

Health literacy has been defined as the ability not only to read and understand health information but to transfer that knowledge and “act on it”—to use it to make informed decisions (NIH, 2006). Therefore, being able to function with the complex language of health information can be considered a secondary literacy.

Anyone who has tried to teach someone something, whether it be by training, academic coursework, or workplace knowledge, knows that it is difficult to measure learning. Often, the only evidence that learning may have taken place is the language learners can produce by the end of the educational process. Sociocultural theory, based on the research and theories of Russian psychologist and semiotician Lev Vygotsky, is a useful framework to analyze second language acquisition (SLA) research (Lantolf, 2000). Vygotsky’s research included the idea that both social and cultural contexts influence development (Wertsch, 1985). He also recognized that learning complex scientific terminology is similar to learning a new language. Many scholars have expanded on his work more recently. One area of Vygotsky’s work that has been used in SLA is that of mediation. Mediation occurs when someone (or in this case something, a tool) intervenes and aids the process of connecting new information to already existing information. When learners interact with a mediator (could be a teacher, a peer, language, or a tool), gain new information, and connect it to their current knowledge, they can perform more complex mental tasks than they could have performed previously (1986). Eventually, that knowledge can be transferred to other tasks so they are able to independently do what they could previously do only with the mediator (Vygotsky, 1978, 1986). This knowledge transfer is what the medical professionals wanted the patients to be able to do.

The medical professionals wanted the patients to not only learn about the pre- and post-surgical lifestyle changes and implications of the surgery before they made the decision about whether or not to consent to it, but they also wanted the patients to connect this new knowledge to their current knowledge and to eventually be able to transfer this health information to their own contexts and lifestyles. The idea of a computer as a mediator is not new (Salomon, Perkins, & Globerson, 1991), so in this study, I was hoping to see if the software application may have had an effect on how they used language to demonstrate their learning.

Medical professionals have been revising written materials and decision aids for many years, and researchers have been looking at these enhanced patient education materials. Improvements include trying to make them more clear and concise, adding visuals and videos, and creating multimedia materials, only to show inconsistent results in patient understanding regardless of the types of educational materials used (see, for example, Flory & Emanuel, 2004). In addition, principles of visual design theory are important to help create a positive user experience that promotes active learning (Bellwoar, 2012; Lazard & Mackert, 2015). Some studies have looked at patient satisfaction with the education process (Eggers et al., 2007), quality of online information (Willerton, 2008), patient anxiety levels (Eggers et al., 2007; Wright, 2012), and recall of information in multiple choice tests (Bader & Strickman-Stein, 2003), but few studies have looked at surgical consent forms since Grunder (1980), and few have attempted to measure what patients learn by looking at interview discourse.

Often, the focus of improving educational materials is on the readability of those materials (Windle, 2008), which is important because of the specialized language in the medical field (Wright, 2003; 2012). However, simplifying the language of the information is often not enough to help patients (Jenson, 2012, as cited in Lazard & Mackert, 2015). The materials must be created and developed with user-centered design principles in mind as well (Lazard & Mackert, 2015). Even if medical professionals use the Flesch-Kincaid, SMOG, or Fry readability formulas, the educational materials may not teach patients enough to make an informed decision. Anyone who has had even a simple surgical procedure likely realizes that informed consent documents often still do not “inform” patients clearly. These documents are frequently still written in a small font on a long form with legal terminology and medical jargon and are signed right before the procedure for liability reasons, not for educational purposes. Even if medical professionals allow time for questions, not all patients know what to ask. Another important aspect of this collaboration is the continued improvement of the patient education and informed consent process.

Bariatric surgery and obesity

Bariatric surgery is one treatment for severe obesity that involves permanent lifestyle changes that, if not understood thoroughly, could have devastating impacts on the patient’s future (Eggers et al., 2007). Obesity is a difficult term to define because the definition varies. The most commonly used definition comes from the dominant discourse in the medical field and is based on BMI, or Body Mass Index. According to this view, a BMI over 30 indicates obesity; if it is over 35, it indicates severe obesity. The dominant discourse also states that patients who are categorized as being severely obese and who also have other health issues (such as high blood pressure, heart disease, etc.) are at a higher risk for death due to those comorbidities. The participants in this study all had a BMI over 35 and were all categorized by their physicians as being severely obese. They all had other comorbidities and, thus, they were learning about bariatric surgery as a weight-loss assistance option. This procedure reduces the effective size of the stomach to decrease feelings of hunger. If patients are considering this type of treatment option, before making the decision, they need to understand the critical lifestyle changes & pre- and post-surgical requirements as well as what happens in the actual procedure.

This clinic was committed to making sure their patients understood all of the implications of the surgery as part of their education process. They wanted the patients to be better informed and to learn the specific, targeted information needed to make appropriate health decisions, so they wanted to see if the software application made a difference. The objective of this collaboration was both to improve the application and to assess data from two groups: participants who used the multimedia software application to learn about the procedure they were considering (intervention group) and participants who did not use that software program (control group). The control group learned about the surgery through the clinic’s usual methods: They met with medical professionals and received written information. Interviews were conducted with both groups to see if there was a difference in the language that participants produced from pretest to posttest.

Development process

Patients who elect to have bariatric surgery must be aware of the lifestyles changes they will need to make to avoid complications. The hope with the educational software application was that those who used it would eventually be better able to understand these lifestyle changes well enough to be able to transfer the information to their own lifestyles and reduce the potential for complications. The application was initially developed by the animation company in conjunction with a board of surgeons and nurse educators from the clinic. It was designed to target a variety of learning styles by using video, audio, text, animations, and knowledge checks.

The animation company was a small business that did not have a full-time technical communicator on staff and did not have a formal process for documentation or usability testing. They contracted me as a technical writing practitioner to do some editing on the language elements of the product before the product launched. They wanted a quick, final edit of the text in the knowledge checks, the informed consent checks (an optional feature), and the captions and labels in the application. They also wanted me to do a usability check to make sure everything was functional. As I got involved in this process, it became clear that there were other design and functionality improvements that could be made, so I suggested we do a usability test with real users to use the principles of user-centered design before doing final edits. The animation company was on a time schedule for the product launch, and all of the changes had to be approved by the board of surgeons and nurse educators, so after my initial assessment, we decided to do a small usability test to find only the most critical issues before the first launch and to do another, more in-depth test, before the next release.

Revisions were necessary to make the application more appropriate for the targeted audience and purpose before we could begin the first round of usability testing. The initial product was designed to act as both a sales tool for promoting the company to other clinics and as a functional product for clinics that had already chosen to use it for patient education. The first change we made was to create two separate logins so the patient login would go to the functional application and the clinic login would go to a completely separate promotional site that included samples from the application and other information appropriate to the clinics. I suggested revisions to the promotional site, including changing terminology to be more appropriate for those users (primarily doctors who owned their own practices or ran a clinic) and avoiding the technical jargon of software development.

As for the application itself, in addition to basic functionality issues, I suggested labeling sections in each chapter to break the information into smaller chunks, bringing the language to a more readable level (Flesch-Kincaid) by defining terms and abbreviations, reducing the complex medical terminology, reducing the length of the sentences, changing all text to match the audio, adding captions to sections where there were none, adding white space to reduce the amount of text presented at one time, using more content-related functional visuals, including more participatory elements and knowledge checks, and making final edits for parallelism, grammar, spelling, and mechanics. Some items needed to be revised for a later release, such as re-recording the audio in one section that lasted nearly 15 minutes—the revision would break the content up into shorter segments.

For the usability test, we used a single-room, observational setup for functional (not extensive) validation testing since the product was so close to release (Rubin & Chisnell, 2008). We also included a small section for users’ explicit opinions of the application. I created the objectives and a task list and moderated the tests with one of the other animation company employees who was involved in the development of the application. Once testing was complete, I wrote a report suggesting improvements based on the results. The employee and I also collaborated to create documentation of the entire process so it could be duplicated (and improved) for future releases and/or other applications they were working on. Six users (two males, four females) participated in the test. During the test, I initiated the tasks, observed the users completing the tasks, and took notes. The other employee provided user technical support and also observed and took notes of any technical difficulties. Tasks included starting the application, pausing the videos, adjusting the volume, replaying sections, navigating menus, participating in knowledge checks, and answering five short questions about their perceptions of the application, how easy they found it, what they liked and didn’t like about it, and any suggestions for improvement.

Recommended changes included adding pop-up feedback statements (and auditory information) to notify users if they answered correctly or incorrectly, error messages when the application experienced lag-time or buffering issues and instructions to correct the issues, instructions about how to re-watch sections, subsections in each chapter, and links in the navigation so users could easily return to a specific place if they had to go back and find something in a previous section. Since the application was Web-based, the company was able to make changes and corrections quickly. When the revised version of the software was released, I began the small study to see what the differences were between participants who used the software application and those who used the clinic’s traditional methods to learn about the surgical procedure.

Methodology

The hypothesis was that the intervention group (those who used the multimedia software application) would produce “better” answers than the control group, who did not use the software. The assessment tools were created by using the content in the multimedia software application, including the knowledge check and informed consent questions that the medical professionals chose. I made sure that there were questions that targeted several types of knowledge (Marzano & Kendall, 2007). An improved or “better” answer would demonstrate the ability to recognize, recall, and use the complex medical information about bariatric surgery as evidenced by more technical terminology (vocabulary), more clearly explained examples, and more specific details about the information related to the surgery and necessary lifestyle changes.

After IRB approval, nurses in the clinic initially recruited interested patients. If the patients expressed interest, I would then share the IRB-approved materials with them to be sure they were still interested. The clinic allowed me to conduct the testing before and after the patients’ regularly scheduled appointment times. Twenty-nine participants completed this study, eighteen of whom were in the intervention group and eleven of whom were in the control group. Demographics were not controlled for—this was a small sample of current patients from the clinic who were interested in the procedure. The nurses randomly assigned the patients to the control group or the intervention group. I conducted both pre- and post-interviews in addition to multiple-choice and fill-in-the-blank assessments. Due to page limitations, this paper will look only at the interview data.

After transcribing all of the interview discourse, I developed a coding system to create a baseline for the pretest answers so I could judge the posttest answers in comparison. For the pretest, I used 0, +, and *: 0 indicated an incorrect answer; + indicated a partially correct answer; and * indicated a completely correct answer, according to the answers the medical professionals said they were looking for. For the posttest, I used =, +, and -, where = indicated an unchanged answer (not improved, not worse) answer, + indicated a better answer, and – indicated a worse answer. Criteria for a better answer included more concise vocabulary or technical terminology, more (quantity) or more clearly explained examples, and/or more specific details about the information related to the surgery and the necessary lifestyle changes. I then selected the questions that had the largest number of improved answers according to the above criteria and examined the language used to see if there were any indications that the software application may have mediated the learning process. The next section will discuss those findings.

Results and Discussion

A total of 56 participants began the study, 22 in the test group and 34 in the control group. It was my hope to have 20 participants in each group complete all three pretests and posttests of the study; however, it was not possible to get 20 in each group for a variety of reasons that I had no control over in the clinic. Participants sometimes had to leave for appointments with their doctors, for lunch, or for other reasons during testing. Out of 22 original test group participants, only 18 participants actually completed the pretest and posttest interviews. Out of 34 original participants in the control group, only 11 were able to complete the pretest and posttest interviews. Statistical analyses were computed to help account for these inequalities in sample sizes. The interview data presented here is from the participants who were able to complete both the pretest interview and the posttest interview.

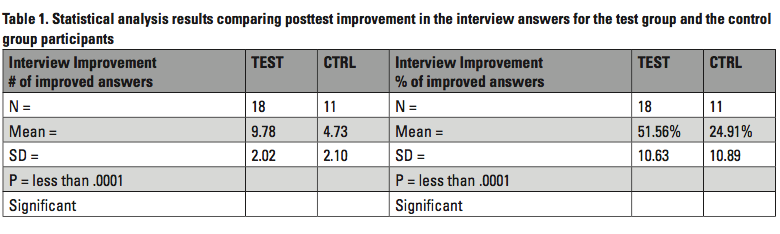

First, I looked at the data by the percentage of participants that improved answers and how many questions had improved answers in the posttest. As shown in Table 1, approximately fifty-two percent (52%) of the participants in the intervention group had improved answers in the posttest. Approximately twenty-five percent (25%) of the participants in the control group had improved answers in the posttest. A two-tailed, independent samples t-test at 95% confidence interval showed statistically significant improvement in the test group over the control group (p<.0001). Results were computed both with and without Welch’s equation to correct for the slightly unequal variance (standard deviation squared).

More interesting than the numbers, however, was the language used by the participants. That is best demonstrated by some examples of informational and procedural knowledge.

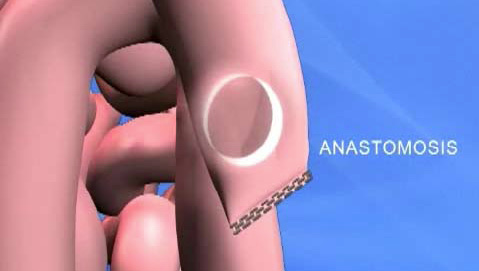

Informational knowledge—vocabulary/definitions: What is an anastomosis?

Informational knowledge can occur in the form of vocabulary and definitions (Marzano & Kendall, 2007). The question that asked for knowledge of vocabulary and a definition and showed the highest number of improved answers in the intervention group was “What is an anastomosis?” The medical professionals want the patients to understand this question because of the critical implications. An anastomosis is the connection between two organs, and in this case, that is where their “new” stomach is joined with a connection the size of a dime. All food must pass through that small connection. This requires chewing thoroughly and swallowing only small amounts of food after the procedure for the rest of their lives to avoid complications.

For this particular question in the posttest, 67% of the intervention group participants improved their answers. In the pretest, no one knew what an anastomosis was. It’s not surprising that they learned what it was; it was surprising, however, that only 9% of the control group had any idea what an anastomosis was by the posttest. That difference in improvement led me to believe that the software application may have been more effective at getting the information across. Two samples of the posttest answers are shown below. The pretest answers for these were both “I don’t know” (or equivalent).

- Example 1: That’s that uh, round circle they put on your other thingy, the, uh, asimosity? Or whatever it is? It’s uh, couple inches down from the, soph-jes, where they do that, cut, and then on the stem they make a circle, you know what I’m talking about?

- Example 2: That’s gonna be my little dime-size thing that my food’s gonna pass through.

In Example 1, the idea of the anastomosis is clearly contextualized by the video in the computer software application where a circle is drawn and explained in relationship to the connection of two organs (see Figure 1).

The answer in Example 2 demonstrates how the software may have given the participant the beginnings of the schema needed to understand the idea of an anastomosis in relationship to any necessary actions. Neither of the answers are perfect textbook definitions, but both participants clearly present an understanding of what the term means, which is a better answer than each of them gave in the pretest, where they did not know.

Example 1 demonstrates a preliminary understanding of the definition of the term. Example 2 demonstrates a bit more knowledge, that the food will pass through a connection the size of a dime, which will be helpful after the surgery to reduce complications caused by not understanding the size of the connection. It may not be important for the participants to remember the actual term as long as they understand the implications of the process and can explain that in plain language; however, if the medical professionals want the patients to know the particular term, it may be important to introduce the term multiple times so the patients can develop the necessary schema to remember the jargon.

These examples indicate that the software application could have acted as a mediator to help the participants understand the meaning of anastomosis enough to produce these improved answers. Again, it’s not important to know everything about it at this stage. Learning doesn’t happen all at once—but this gives them some information that they can continue to build on.

Procedural knowledge: Describe your surgery to me. What exactly is the surgeon going to do?

In addition to informational knowledge, procedural knowledge is also important, has different forms and functions, and is much more complex than informational knowledge like definitions (Marzano & Kendall, 2007). The participants in this study needed to understand the entire procedure they were considering because of the long-term, often permanent nature of their decision, so we included the question that asked them to describe exactly what the surgeon would do. In this question, 83% of the intervention group improved their answers in the posttest, while 55% of the control group participants improved their answers. Most of the participants had at least some information about the procedure in the pretest. Again, the interesting elements can be found in the language of the intervention group. Below are two examples of both the pretest answer and the posttest answer for this question (from intervention group participants).

- Example 3 PRE: He’s gonna do a laposcopy, disconnect part of my stomach and reroute it to uh, another part.

- Example 3 POST: He’s gonna do the laposcopy procedure, put in the sutures, put a camera in, use the clippers, cut off below the esophagus, make the stomach small, reattach it, er, cut off another part of the small intestine and reattach it to thee, stomach.

In addition to some new vocabulary, Example 3 also produced a series of procedural steps in the correct order; and in the same order that they appeared in the software application, indicating that the intervention may have had some influence on the learning process. Another example demonstrates this as well:

- Example 4 PRE: Well, he’s going to cut part of the stomach off to make a pouch, and well, reroute it from the small and large intestines. As far as I know.

- Example 4 POST: Well, he’s going to uh, cut part of your stomach off, and the juju, the juju, jujunum is going to be bypassed up to the anamosis, and then it’s gonna go through part of it, it’ll bypass your uh, dadelay, da, the “d” one, and then, uh, that’s when, cause most of your nutrients are stored in the jejulium, and then the, then it goes into the ileum and then down, so it’s actually goes to a, since it’s smaller now, it can bypass it’s the other part that goes into that to grab the nutrients it needs.

Even though this participant could not remember exactly what some of the words were, it is clear that the meaning of the new vocabulary is being developed. Both of the improved answers demonstrate technical vocabulary and procedural information.

The participant in Example 4 struggles with technical terms: “anomosis” for anastomosis, “the ‘d’ one” for duodenum, and “juju, jujunum…jejulium” for jejunum. However, the struggle is more with the pronunciation and memory of the word itself, not with the meaning, as indicated by the last part of that answer. This indicates that the participant is “actively engaged” in the reception of the content (Bellwoar, 2012, p. 325). The participant’s description of what happens is accurate, the procedural information from the video was produced in order, and more specific details were produced in the posttest.

Conclusion

Participants in this study who used the multimedia software application to learn about their procedure were able to more consistently produce better answers about their surgery and the pre- and post-surgical lifestyle requirements than those in the control group. A better answer consisted of recognizing and recalling more informational and procedural knowledge by producing better definitions, examples, vocabulary, and more specific details in the posttest answers.

The implications of these findings could indicate that collaborations that improve educational materials may be beneficial for use in patient education and informed consent, and that the targeted improvement in the training materials may have helped mediate the complex information so the participants were better able to demonstrate what they are in the process of learning. In addition, these results can help inform the technical communication curriculum.

By partnering with industry to create classroom projects with engaging content and current issues in the field, students may better learn how to apply rhetorical strategies to workplace contexts. In addition, using this type of experiential learning content in the classroom could help students consider layered literacies (Cargile Cook, 2002) in authentic settings. Showing students examples of how these types of collaborations can work may help them begin to develop more basic and rhetorical literacies as they see what types of choices were made and why, social literacies as they analyze the successful elements of the teamwork and collaboration, technological literacies as they analyze how the users were able to work with and learn from the technology and critique how the design could have been further improved, and ethical and critical literacies as they analyze choices made and possible improvements for reducing possible social and technological barriers for users.

Because of the very small size and scope of the study, much more work would need to be done to confirm and generalize the results. Any patient education materials that will be used with the hope of teaching patients enough to help them with decisions about their health care options must be designed and tested to ensure that they are going to be readable, usable, and understood. Ideally, an iterative testing methodology should be implemented early in the development process to ensure user-centered design.

Although this was a small study of only one researcher’s interdisciplinary collaboration, it shows that it is possible for academic researchers and practicing professionals in technical communication to inform the patient education process by collaborating with medical and technical partners to create better educational materials and assessments. Similar collaborations could be implemented in other disciplines as well.

References

Bader, J. & Strickman-Stein, N. (2003). Evaluation of a new multimedia formats for cancer communications. Journal of Medical Internet Research, 5(3), e16. doi: 10.2196/jmir.5.3.e16

Bellwoar, H. (2012). Everyday matters: Reception and use as productive design of health-related texts. Technical Communication Quarterly, 21, 325–345. doi: 10.1080/10572252.2012.702533

Berkman, N.D., Sheridan, S.L., Donahue, K.E., Halpern, D.J., & Crotty, K. (2011). Low health literacy and health outcomes: An updated systematic review. Annals of Internal Medicine, 155(2), 97–107.

Briglia, E., Perlman, M., & Weissman, M. (2015). Integrating health literacy into organizational structure. Physician Leadership Journal, 2(2), 66–69.

Cargile-Cook, K. (2002). Layered literacies: A theoretical frame for technical communication pedagogy. Technical Communication Quarterly, 11, 5–29. doi: 10.1207/s15427625tcq1101)1

Clayman, M., Holmes-Rovner, M., Kelly-Blake, K., McCaffery, K., Nutbeam, D., Rovner, D., Sheridan, S., Smith, S., & Wolf, M. (2013). Addressing health literacy in patient decision aids. BMC Medical Informatics & Decision Making, 13(2), 1–14.

Conklin, T. (2002). Health care in the United States: An evolving system. Michigan Family Review, 7(1), 5–17.

Flory, J. & Emanuel, E. (2004). Interventions to improve research participants’ understanding in informed consent for research: A systematic review. Journal of the American Medical Association, 292, 1593–1601. doi: 10.1001/jama.292.13.1593

Gee, J.P. (1989). What is literacy? The Journal of Education, 171(1), 18–25.

Grunder, T.M. (1980). On the readability of surgical forms. New England Journal of Medicine, 302, 900–902.

Johnson, A. (2014). Health literacy, does it make a difference? Australian Journal of Advanced Nursing, 31(3), 39–45.

Kocher, R., Emanuel, E.J., & DeParle, N.M. (2010). The Affordable Care Act and the future of clinical medicine: The opportunities and challenges. Annals of Internal Medicine, 153, 536–539.

Koh, H., Baur, C., Brach, C., Harris, L., & Rowden, J. (2013). Toward a systems approach to health literacy research. Journal of Health Communication: International Perspectives, 18(1), 1–5. doi: 10.1080/10810730.2013.759029

Lantolf, J. P. (Ed.). (2000). Sociocultural theory and second language learning. Oxford: Oxford University Press.

Lazard, A.J. & Mackert, M.S. (2015). E-health first impressions and visual evaluations: Key design principles for attention and appeal. Communication Design Quarterly, 3(4), 25–34.

Marzano, R. J., & Kendall, J. S. (2007). The new taxonomy of educational objectives (2nd ed.). Thousand Oaks, CA: Corwin Press.

Meloncon, L. & Frost, E. (August 2015). Charting an emerging field: The rhetorics of health and medicine and its importance in communication design. Communication Design Quarterly, 3(4), 7–14.

Rowell, L. (2015). Step into patients’ shoes: How to understand and apply a patient-centered perspective. Benefits Quarterly, 31(4), 33–37.

Rubin, J. & Chisnell, D. (2008). Handbook of usability testing (2nd ed.). Indianapolis, IN: Wiley.

Salomon, G., Perkins, D. N., & Globerson, T. (1991). Partners in cognition: Extending human intelligence with intelligent technologies. Educational Researcher, 20(3), 2–9.

U.S. Department of Health and Human Services (2014). HealthyPeople 2020. Washington, D.C.: U.S. Department of Health and Human Services, Office of Disease Prevention and Health Promotion. Retrieved from https://www.healthypeople.gov/2020

Vygotsky, L. (1978). Mind in society. Cambridge, MA: Harvard University Press.

Vygotsky, L. (1986). Thought and language. Cambridge, MA: The MIT Press.

Wee, C. C., Pratt, J. S., Fanelli, R., Samour, P. Q., Trainor, L. S., & Paasche-Orlow, M. K. (2009). Best practice updates for informed consent and patient education in weight loss surgery. Obesity, 17(5), 885–888. doi: 10.1038/obv.2008.567

Willerton, R. (2008). Writing toward readers’ better health: A case study examining the development of online health information. Technical Communication Quarterly, 17, 311–334. doi: 10.1080/10572250802100428

Windle, P. (2008). Understanding informed consent; Significant and valuable information. Journal of PeriAnesthesia Nursing, 23(6), 430–433.

Wright, D. (2003). Criteria and ingredients for successful patient Information. Journal of Audiovisual Media in Medicine, 26(1), 6–10.

Wright, D. (2012). Redesigning informed consent tools for specific research. Technical Communication Quarterly, 21(2), 145–167. doi:10.1080-10572252.2012.641432

About the Author

Corinne Renguette is an assistant professor and the director of the technical communication program in the Purdue School of Engineering and Technology at Indiana University-Purdue University Indianapolis. She has worked as a technical writer and editor, and she develops and teaches courses and training programs in written and oral technical communication, intercultural technical communication, English for specific purposes, and applied linguistics. Her research focuses on the assessment of educational materials, especially those that use innovative pedagogies and technologies, in both academic and workplace environments. She is available at crenguet@iupui.edu.

Manuscript received 2 May 2016; revised 15 July 2016; accepted 10 August 2016.