By Candice A. Welhausen and Kristin Marie Bivens

ABSTRACT

Purpose: This study uses a qualitative content analysis approach to analyze existing user-generated content (UGC) for a civilian first responder mobile health or mHealth app, PulsePoint Respond. We argue that online review comments for these apps, the type of UGC we analyzed, can provide a rich source of untapped data for practitioners working in UX. We offer a UGC commenting heuristic that can help practitioners more effectively identify users’ functional and productive usability concerns.

Method: We analyzed review comments (n=599) about PulsePoint Respond posted on the iOS platform between September 2016 and November 2019. Using open card sorting for data reduction, we eliminated 307 comments. We then created preliminary codes for the remaining 292 comments and used affinity diagramming to discuss, define, and finalize categories in order to analyze the final sample.

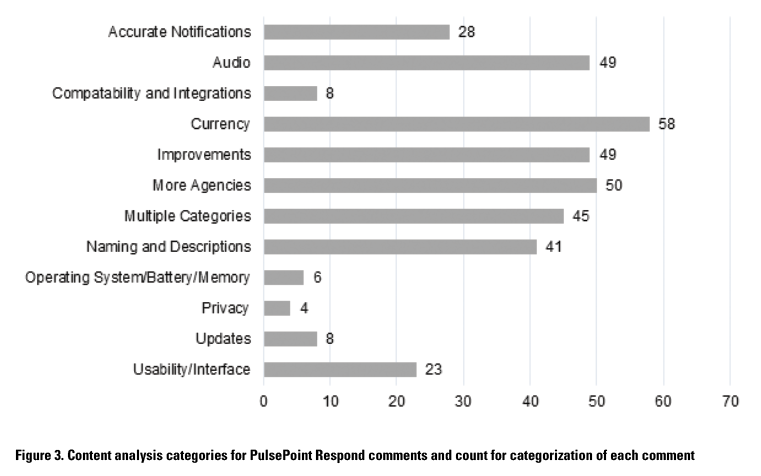

Results: We created a total of 14 categories, including “Unusable” or not actionable comments (307) and comments that were classified as “Multiple Categories” (45). The remaining 12 categories included Accurate Notifications (28), Audio (49), Compatibility and Integrations (8), Currency (58), Improvements (49), Location (27), More Agencies (50), Naming and Descriptions (41), Operating System/Battery/Memory (6), Privacy (4), Updates (8), and Usability/Interface (23).

Conclusion: We found that functional usability considerations remain important for users. However, many users also commented on the limitations of particular functionalities and/or described actions they sought to perform that were not supported by the app. Drawing from our analysis, we propose a UGC commenting heuristic that can help practitioners more effectively identify users’ functional and productive usability concerns.

Keywords: mHealth Apps; UGC; Content Analysis; UGC commenting heuristic; UX

Practitioner’s Takeaway

- Practitioners can draw from existing online review comments posted for mHealth apps to learn more about users and their functional and productive usability concerns.

- Practitioners can use the UGC commenting heuristic offered in this article to assist and/or prompt users to provide more actionable and valuable information in their online reviews that can be extracted and used to inform practitioners’ UX work with mHealth apps.

INTRODUCTION

Since their inception, mobile health applications—often referred to as mHealth apps—have been touted for their potential to improve health outcomes (Fiordelli, Diviani, & Schulz, 2013). As these tools have continued to proliferate (see Pohl, 2019), they have frequently been presented as enabling a culture of participatory health that can empower users to make more informed decisions by enabling particular kinds of health promotion and disease prevention behaviors (e.g., diet and exercise tracking) and/or assisting users in managing chronic illnesses and conditions. This intervention-driven emphasis has, in turn, prompted an extensive body of usability research on mHealth apps focused on improving the functionality of these applications (e.g., see Jake-Schoffman et al., 2017).

Ensuring that these apps work as intended—that is, that they are easy to use (see Mirel, 2004)—remains critically important. However, the emphasis on functional-level concerns in these studies may reflect the interests of app developers and creators, including healthcare providers and other subject matter experts, rather than users. Indeed, scholarship in the rhetoric of health and medicine has documented the ways that patients have modified some mHealth technologies to better meet their needs (Arduser, 2018; Bivens, Arduser, Welhausen, & Faris, 2018) and argued that some users of a crowd-sourced, flu-tracking program use this tool to make their own risk assessments (Welhausen, 2017).

Given our focus on audience in technical and professional communication (TPC), usability is a key area of interest (Redish & Barnum, 2011), and many practitioners are likely performing user experience (UX) work (Lauer & Brumberger, 2016). TPC-focused usability research has found that users often have their own goals and objectives when they use digital technologies, which may differ from what designers intended (Simmons & Zoetewey, 2012). In all likelihood, users of mHealth apps, too, engage in similar kinds of practices. However, this perspective has not been explored in TPC scholarship.

This article addresses this opening from a UX perspective, which Lauer and Brumberger (2016) state “[i]deally,. . . strives to accommodate how users appropriate information products and content in unanticipated ways and for their own purposes as well as how those products position users to act in the world by the way they are designed and the options they allow for” (p. 249). More specifically, we analyze the self-reported experiences, feedback, and perceptions of users of PulsePoint Respond (e.g., see Welhausen & Bivens, 2019), one of two civilian first responder apps developed by the PulsePoint Foundation, by conducting a content analysis of review comments about the app posted on the iOS platform.

Through our analysis of this user-generated content (UGC), we found that functional usability considerations remain important for users. More specifically, many review comments described specific problems users encountered while performing or attempting to perform certain tasks. Yet we also found that some reviews focused on the limitations of particular functionalities and/or described actions that the user wanted to perform but were not supported by the app. We argue that this finding emphasizes the need to better understand users’ “productive usability” (Simmons & Zoetewey, 2012) experiences with these tools and that analyzing online review comments can provide a rich source of untapped data in facilitating this effort. More specifically, the focus of this study aligns with Gallagher et al.’s (2020) claim that content analysis can help technical communicators better understand users and interactions between users in online environments.

To demonstrate this and in what follows, we next describe PulsePoint’s two apps—Respond and AED (Automated External Defibrillator)—before situating our work within previous approaches to UGC in TPC and describing our methodology including our norming process for conducting our content analysis. We then present our analysis of the PulsePoint Respond comment sample and discuss the implications of our analysis. Finally, we provide a UGC commenting heuristic that practitioners can apply in spaces where users provide comments such as feedback forms or on app download pages to guide their commenting practices. We propose that practitioners can use our heuristic to prompt users to provide more substantive and actionable feedback in review comments, which can then be used to better identify users’ functional and productive usability concerns.

ABOUT PULSEPOINT

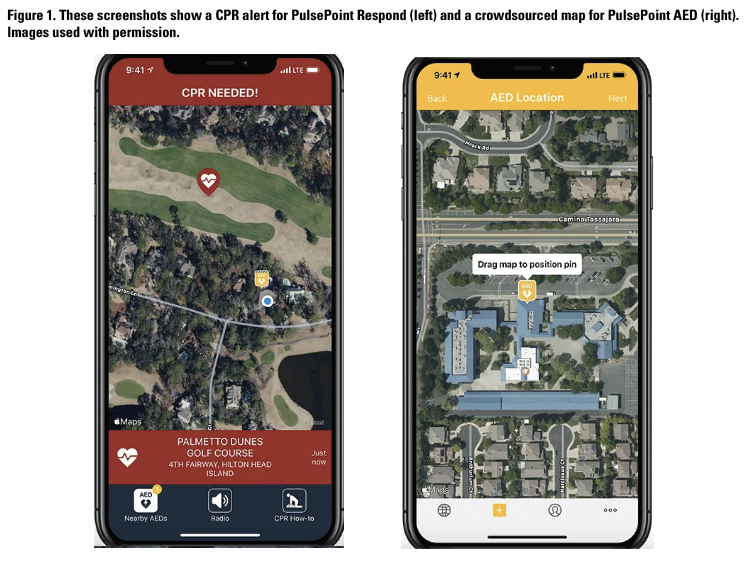

PulsePoint (PulsePoint.org) was created as a not-for-profit organization that released two location-aware apps in 2010 designed to work together to reduce deaths from sudden cardiac arrest (SCA): PulsePoint Respond and PulsePoint AED. PulsePoint Respond alerts users who are willing to perform cardiopulmonary resuscitation (CPR) when someone nearby (within one-fourth of a mile) is experiencing SCA. This app is integrated with local emergency services and sends a smartphone alert (triggered by a 911 call) to users who have registered to provide CPR when someone in their nearby area is experiencing SCA (see Figure 1; left). SCA kills 70-90% of people who experience it because frequently CPR or defibrillation is not administered in time to save the person’s life (Sudden Cardiac Arrest Foundation, 2019). Indeed, studies investigating volunteer-based networks of lay-trained CPR responders found that individuals who experience SCA and receive CPR from bystanders showed increases in survival (Hansen et al., 2015).

PulsePoint AED uses crowdsourcing to document locations of AED machines (Figure 1), which users who respond to an alert for CPR can access as needed. This app allows users to view AEDs in their area and to also add these devices’ locations. This crowdsourced map information is then reviewed for accuracy and approval (i.e., vetted) before it is added to the AED app. Users contributing crowdsourced information about an AED also need to describe the AED’s location as well as provide a panned image of its location and immediate surroundings. PulsePoint AED’s functionalities differ from PulsePoint Respond. Consequently, this article only addresses the latter.

TECHNICAL COMMUNICATION AND USER-GENERATED CONTENT

Scholarship in TPC that focuses on UGC—that is, “media content created or produced by the general public rather than by paid professionals and primarily distributed on the Internet,” as Daughtery, Easton, and Bright (2008, p. 16) defined the concept—has increasingly recognized the ways that this information is shaping the workplace practices of technical communicators. Indeed, this personal, publicly available information (Naab & Sehl, 2017, see p. 1258) has become a common venue that organizations use to provide support/documentation (White & Cheung, 2015) with many companies acknowledging that UGC is often highly valued by consumers (Ledbetter, 2018). For instance, TPC-focused research has extensively explored the ways that credibility and trustworthiness are established in product reviews, a specific genre of UGC (see Mackiewicz, 2010a; 2010b; 2014; 2015; Mackiewicz, Yeats, & Thornton, 2016). Consequently, practitioners are increasingly “analyz[ing], synthesiz[ing], and respond[ing] to user-generated content as part of their daily duties,” as Mackiewicz, Yeats, and Thornton (2016, p. 72; see also Mackiewicz, 2015) pointed out. Further, as Gallagher et al. (2020) stated in their study on UGC and “big data audience analysis”: “In the twenty-first century, technical communicators need to read, respond, curate, manage, and monitor user-generated content . . . ” (p. 155).

Indeed, while UGC had previously been seen as undermining the work of practitioners (Carliner, 2012), more recent scholarship has argued that this information can, in fact, inform technical communicators’ approaches to content creation and management. As Mackiewicz (2015) put it in her article on strategies to evaluate “helpfulness votes” for consumer products, “Technical communicators are playing a substantial role in the development and management of UGC” (p. 4-5). For instance, Frith (2017) has argued that crowdsourced online forums allow technical communicators to take on “new roles as ‘community managers’” (p. 12; see also Frith, 2014). White and Cheung’s (2015) study on user-generated fantasy sports media presented options for more effectively engaging readers, and Getto and Labriola (2019) offered a heuristic for creating “user-driven content strategies” (p. 385).

Scholarship in TPC has also argued that UGC can help technical communicators better understand the subject matter of the content they are working with as well as lend insight into audience as Lam and Biggerstaff’s (2019) study on software development illustrated. This emphasis on UGC to increase audience awareness can also be seen in Ledbetter’s (2018) study on YouTube make-up tutorials and Gallagher et al.’s (2020) quantitative analysis of online comments responding to articles in The New York Times. More specifically, Ledbetter (2018) connected UGC to usability by arguing that the creation of user-generated videos suggests that “we need to broaden and deepen our understanding of what usable means [which] will enable us to better understand and account for practices . . . that diverse audiences value” (italics in original; p. 288). Gallagher et al. (2020), on the other hand, focusing on a different genre of UGC, proposed a method for analyzing big datasets. They argued that their approach “can assist technical communicators with better understanding the habits and exchanges of their participatory users because this understanding can help them design better commenting practices, procedures, and functionalities” (p. 155; emphasis added)—a goal we share and account for in the UGC commenting heuristic we present later.

Content analysis is an established methodology in TPC research that has been used both qualitatively (e.g., Geisler, 2018) and quantitatively (e.g., Brumberger & Lauer, 2015)—including by Gallagher et al. (2020)—to “expose hidden connections among concepts, reveal relationships among ideas that initially seem unconnected, and inform the decision-making processes associated with many technical communication practices” (Thayer, Evans, McBride, Queen, & Spyridakis, 2007, p. 267). This method has also routinely been used in UGC-focused research in communications-related fields (Naab & Sehl, 2017) and in usability studies on mHealth apps (e.g., Jake-Schoffman et al., 2017; Liew et al., 2019; Middelweerd et al., 2014) albeit with a focus on the content of the app rather than the content of patient/user feedback. Indeed, the extent to which UGC might be used qualitatively to better understand users’ practices within the context of mHealth apps from a TPC perspective has not been explored.

This study addresses this gap by reporting the results of a project in which we analyzed online review comments for a civilian first responder mHealth app that draws upon off-duty healthcare workers and those trained in CPR to assist during SCA (Welhausen & Bivens, 2019). More specifically, we use a scaffolded, qualitative content analysis approach to argue that this specific genre of UGC can lend insight into users’ functional and productive usability practices, which can, in turn, inform the workplaces practices of practitioners engaged in UX work. Like Gallagher et al. (2020), we start by using UGC to understand audiences and their use of a specific technology—the PulsePoint Respond app. However, our qualitative approach allowed us to tease out specific categories for classifying comments that we then use to develop a UGC commenting heuristic. Practitioners can use this heuristic to assist and/or prompt users to provide more actionable and valuable information that can be extracted and used to inform their UX work with mHealth apps.

DATA ANALYSIS METHOD

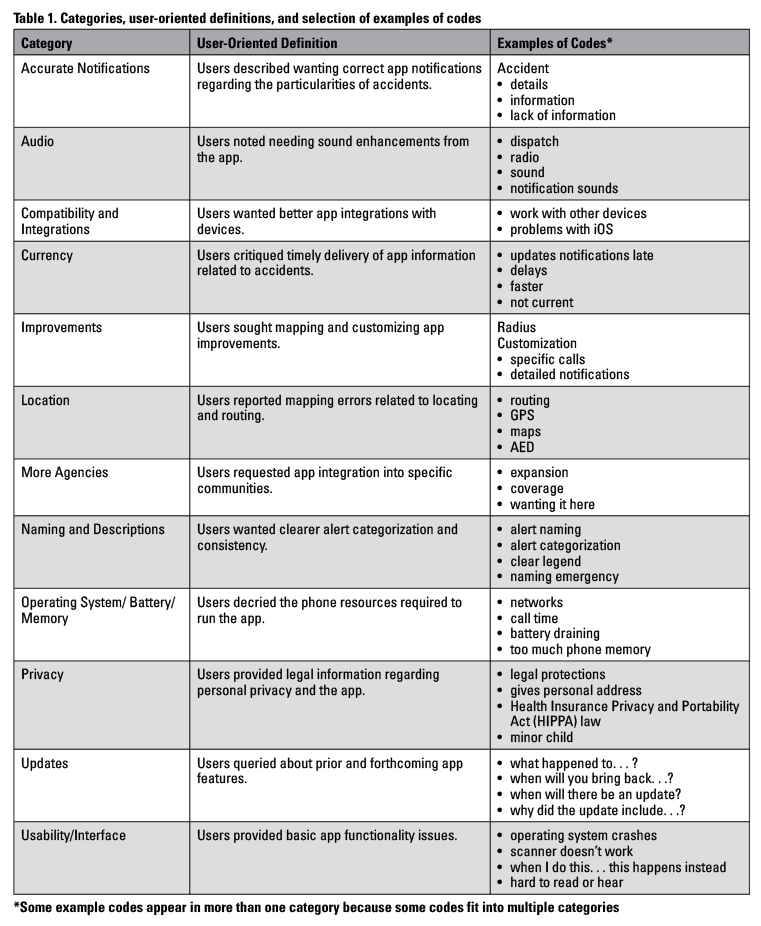

Our sample consisted of 599 review comments from the iOS platform posted between September 23, 2016 and November 5, 2019 (the date we stopped compiling comments).1 Our research team for the final analysis portion of the study consisted of the two authors and two undergraduate student research assistants. Because our project was funded and included working with students, we used a hybrid method of 1) open card sorting and 2) affinity diagramming to analyze the UGC we collected as a research team. Independently and prior to meeting as a group, each team member used open card sorting to familiarize themselves with the comments and independently create preliminary codes. We then convened as a group and conducted a training session during which we discussed our independent open card sorting results, talked through coding discrepancies, agreed upon final categories, and defined each category. These categories are shown in Table 1. After this step, we used affinity diagramming to classify the same preliminary dataset of 486 comments that each research team member had previously independently coded via open card sorting. At this point, we printed out the comments, attached these comments to sticky notes, and divided the stack of these notes into fourths. Each member of our research team was then responsible for categorizing one of the stacks (i.e., approximately one-fourth of the preliminary dataset) via affinity diagramming. Based on our hybrid card sorting-affinity diagramming process and discussions, Table 1 below includes the final categories, the user-oriented definitions of the categories that we created, and examples of codes we used to generate these definitions.

Narrowing qualitative data (i.e., the qualitative data reduction process) is typically used to begin analyzing field notes and transcriptions (Miles & Huberman, 1994). Additionally, we simplified our dataset by eliminating user comments that did not provide substantive or actionable feedback. To do this work, during open card sorting each member of the research team removed comments (both positive and negative) from their coding stack that only gave a general, holistic assessment/opinion about the app and did not provide specific, substantive detail(s) about the user’s experience. If a team member was unsure as to whether a comment was substantive, during the training session another team member reviewed the comment, and both team members worked together to reach consensus. We categorized these non-substantive comments as “unusable” because they provided general praise or disdain without a reason (e.g., “app works great!” or “very informative”) or developers for the PulsePoint Foundation would not have been able to make changes to the app based on the comment’s content. To illustrate, one respondent stated: “We live near a major thoroughfare that has a bunch of accidents randomly throughout the day and night. This app lets me know proactively to avoid the thoroughfare.” Another stated: “This app got me motivated to sign up for CPR classes. Love listening to the local radio activity. Now when fire and ambulance go by my office, I know what they are responding to. Awesome!” These statements, while not included in our content analysis categories described in Table 1, are still valuable examples of the kinds of comments many users made and were used to inform the prompts included in our UGC commenting heuristic described later.

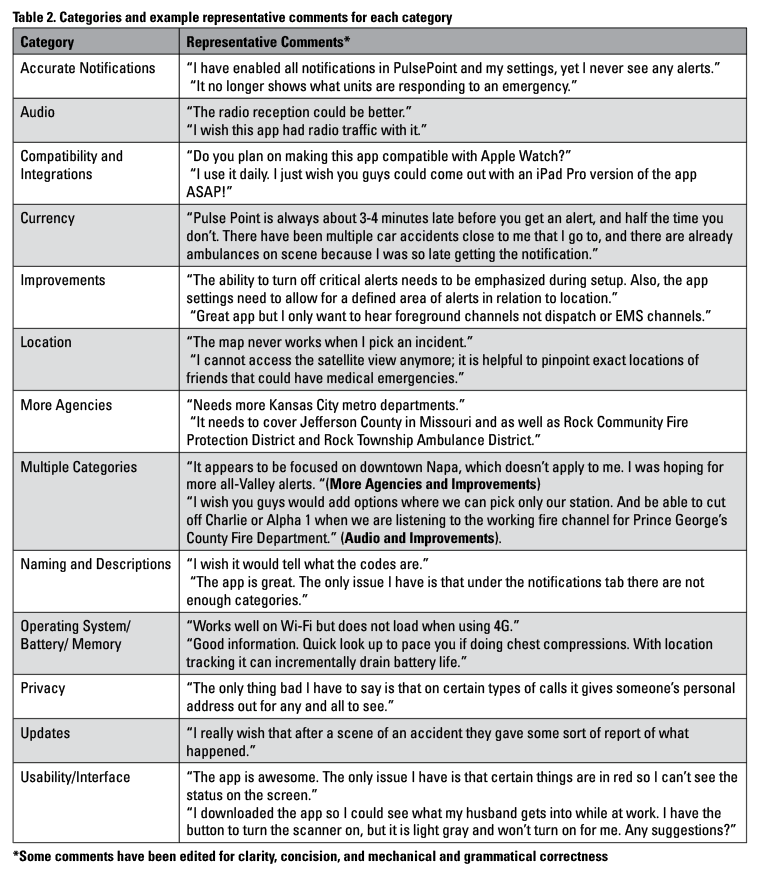

Our analytical process in creating the categories described in Table 1 involved iteratively generating and defining categories directly from our dataset of UGC rather than using those included in an existing usability heuristic such as Morville’s (U.S. Dept. of Health and Human Services, 2021) well-known honeycomb diagram, for example, or Nielsen’s (2012) classifications. It also provided an opportunity for our variously skilled research team to acquaint ourselves with the comments in our dataset prior to affinity diagramming and to scaffold the overall process for the novice undergraduate researchers on our team (we describe this pedagogical scaffolding process in Bivens & Welhausen, 2021). Comments that focused only on the AED app were also eliminated, and a total of 599 total comments (an additional 113 comments that had recently been harvested from the iOS platform were also coded) were then categorized independently based on the in-person norming we conducted. In total, 307 comments were not usable, which was over 50% of the comments we downloaded. Thus, our final dataset consisted of 292 comments that were classified using the categories shown in Table 2 below, which also includes example representative comments. All the comments assigned to each category during the affinity diagramming stage were reviewed by the authors. To resolve discrepancies and disagreements, the team discussed the problematic comment and came to consensus as a group to assign the category.

RESULTS

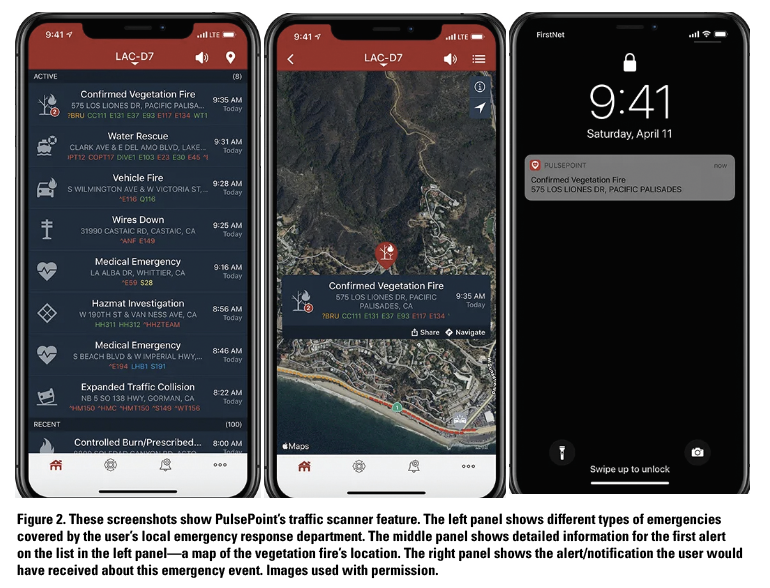

As we stated earlier, both PulsePoint apps were created primarily to reduce deaths from SCA. Thus, the PulsePoint Foundation positions its apps primarily as civilian first responder tools. However, we found that most of the UGC in our dataset addressed specific features of the traffic scanner, a functionality that lists major emergency events covered by the user’s local emergency response department (e.g., “traffic collision,” “medical emergency,” “vegetation fire”). More specifically, when users launch PulsePoint Respond, the traffic scanner feature loads first (see Figure 2; left panel). Users can use their touchscreen to select an alert in order to see more detailed information, which appears on a map with its location (Figure 2; middle panel). Users can also set the app’s notifications feature to receive alerts for specific kinds of emergencies (see Figure 2; right panel).

PulsePoint Foundation positions its apps primarily as civilian first responder tools. However, we found that most of the UGC in our dataset addressed specific features of the traffic scanner, a functionality that lists major emergency events covered by the user’s local emergency response department (e.g., “traffic collision,” “medical emergency,” “vegetation fire”). More specifically, when users launch PulsePoint Respond, the traffic scanner feature loads first (see Figure 2; left panel). Users can use their touchscreen to select an alert in order to see more detailed information, which

appears on a map with its location (Figure 2; middle panel). Users can also set the app’s notifications feature to receive alerts for specific kinds of emergencies (see Figure 2; right panel).

Indeed, most review comments discussed the traffic scanner feature with only a few comments in our dataset specifically describing the user’s experience responding to or attempting to respond to an alert to perform CPR. To illustrate, one reviewer stated: “I was at work [when] the alert went off . . . CPR needed suite 1100 . . . I ran to suite 1100 . . . [she was] unresponsive and pulseless . . . I started CPR . . . did 2 rounds . . . she had pulses. Wow. That was amazing!” These kinds of responses, however, appeared very infrequently. Thus, it could be argued that PulsePoint Respond is primarily an emergency alert tool rather than an emergency intervention app. At the very least, it is both, which demonstrates that some users use the app in more routine, less intervening ways–that is, to check the status of traffic and/or emergency events in their area much like they might use Waze or Google Maps and not necessarily to provide CPR to those who experience SCA.

To now provide specific counts for each of our categories described in Tables 1 and 2, Figure 3 shows the number of responses for each of the major categories described in Table 2. More specifically, most comments in our UGC dataset critiqued the Currency of the app—that is, the delivery of app information related to accidents—followed by More Agencies, Audio, and Improvements, respectively. Privacy was the least common category in our analysis followed by Operating System/Battery/Memory, Updates, and Compatibility and Integrations.

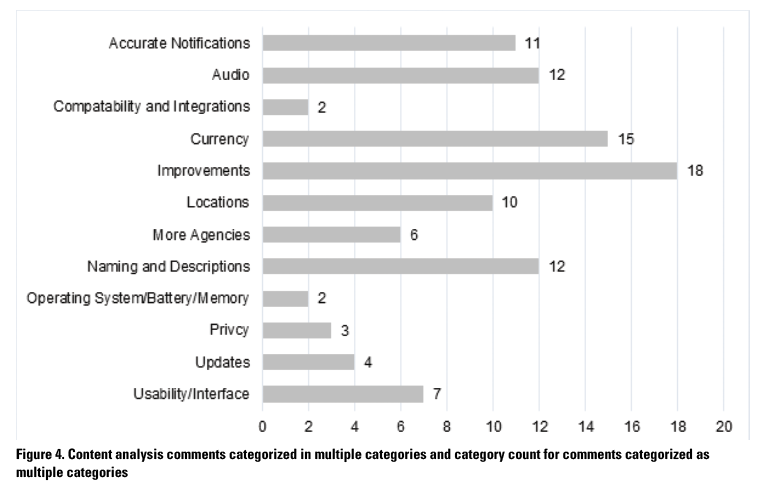

Forty-five (45) comments were categorized as Multiple Categories—that is, the comment could be classified in more than one of our categories. These counts are shown in Figure 4. More specifically, for the 45 Multiple Categories comments, Figure 4 shows the breakdown across the other categories, that is the multiple categories the comments were placed in. For example, if a comment was classified as fitting into Multiple Categories, there were at least two categories used to classify the comment. However, some comments were categorized as fitting in three, four, or even five other categories. To illustrate, 18 of the 45 comments in the Multiple Categories were classified as Improvements, 15 were classified as Currency, 12 as Audio and Naming and Descriptions, and 11 as Accurate Notifications.

DISCUSSION

DISCUSSION

In this study, we explored the citizen first responder app PulsePoint Respond from a broad UX perspective, which Law et al. (2009) have defined as “dynamic, context-dependent, and subjective” (p. 719). Indeed, PulsePoint Respond is unique among mHealth apps because although it is still intervention-focused, this tool (along with PulsePoint AED) was designed to be used in a very specific healthcare context to address a very specific purpose—connecting citizens with other citizens who can provide life-saving care (i.e., CPR and/or defibrillation). Overall, the results of our analysis demonstrate that users find value in the app well beyond the CPR functionality alert as the vast majority of review comments focused on the traffic scanner as outlined in Figures 3 and 4. This major finding alone, we suggest, reflects the move toward productive usability—that is, users are using the app specifically in ways that meet their needs. More to the point, it demonstrates that while PulsePoint Respond has been positioned as primarily an emergency response tool, it is being used in routine ways.

Continuing for a moment with our observation of routine rather than emergency use, our preliminary results published elsewhere (Welhausen & Bivens, 2019) also found that some reviewers self-identified as current or retired emergency response workers. More specifically, these reviewers discussed using the app to make, respond to, or to be informed about emergency response decisions, describing the ways they used the app to “dispatch” and/or “clear” calls. Interestingly, some of these users also reported using the app to perform their emergency response jobs. As one reviewer stated: “I use this app at work . . . to know what units are on calls.” These users have specialized knowledge, experience, and expertise, which made their review comments particularly valuable in informing our UGC commenting heuristic.

The categories in Table 1, too, demonstrate that functional-level usability concerns remain important. More specifically, we suggest that review comments classified as Accurate Notifications, Audio, Location, Operating System/Battery/Memory, and Usability/Interface are concerned with how these features work—that is, “how well … user[s] can navigate through a variety of tasks that [this] end product was designed to facilitate” (Lauer & Brumberger, 2016, p. 249). Conversely, categories like Compatibility and Integrations, Currency, Improvements, More Agencies, Naming and Descriptions, Privacy, and Updates, we propose, focus specifically on productive usability considerations. More to the point, rather than describing specific problems with the current functional capabilities of the app, the comments in these categories forecast actions that users want to perform that are not currently supported. To illustrate using our definitions from Table 1 and our examples from Table 2, users want the app to be integrated with other devices (e.g., Compatibility and Integrations) like the Apple Watch, for instance. They want more timely delivery of app information related to accidents (e.g., Currency). They also want the app to attend to Privacy considerations in the way that some information is presented, and they want particular kinds of Updates like being able to access after-accident reports, as the example comment for this category in Table 2 demonstrates. Making changes to the app to address the feedback in these categories is not just a matter of fixing the app’s programming as it currently exists. Rather, responding to these categories requires including new functionalities as well as modifying existing ones. Thus, the comments in these categories describe the “productive” ways that users want to use PulsePoint Respond.

The categories in Table 1, too, demonstrate that functional-level usability concerns remain important. More specifically, we suggest that review comments classified as Accurate Notifications, Audio, Location, Operating System/Battery/Memory, and Usability/Interface are concerned with how these features work—that is, “how well … user[s] can navigate through a variety of tasks that [this] end product was designed to facilitate” (Lauer & Brumberger, 2016, p. 249). Conversely, categories like Compatibility and Integrations, Currency, Improvements, More Agencies, Naming and Descriptions, Privacy, and Updates, we propose, focus specifically on productive usability considerations. More to the point, rather than describing specific problems with the current functional capabilities of the app, the comments in these categories forecast actions that users want to perform that are not currently supported. To illustrate using our definitions from Table 1 and our examples from Table 2, users want the app to be integrated with other devices (e.g., Compatibility and Integrations) like the Apple Watch, for instance. They want more timely delivery of app information related to accidents (e.g., Currency). They also want the app to attend to Privacy considerations in the way that some information is presented, and they want particular kinds of Updates like being able to access after-accident reports, as the example comment for this category in Table 2 demonstrates. Making changes to the app to address the feedback in these categories is not just a matter of fixing the app’s programming as it currently exists. Rather, responding to these categories requires including new functionalities as well as modifying existing ones. Thus, the comments in these categories describe the “productive” ways that users want to use PulsePoint Respond.

APPLYING A USER-GENERATED CONTENT COMMENTING HEURISTIC

APPLYING A USER-GENERATED CONTENT COMMENTING HEURISTIC

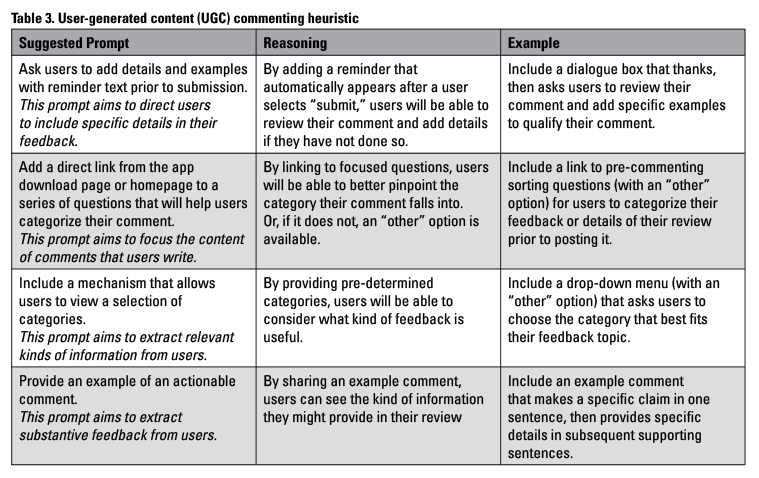

In this section, we draw from the content of the comments describing these productive usability needs to propose the UGC commenting heuristic shown below in Table 3. More specifically, Table 3 describes prompts that can be transformed into a menu that allows users to categorize their comment type and/or adapted into a series of example comments pinned on the app home or download pages. Integrating these prompts can then guide the response of users who choose to leave comments.

In this section, we draw from the content of the comments describing these productive usability needs to propose the UGC commenting heuristic shown below in Table 3. More specifically, Table 3 describes prompts that can be transformed into a menu that allows users to categorize their comment type and/or adapted into a series of example comments pinned on the app home or download pages. Integrating these prompts can then guide the response of users who choose to leave comments.

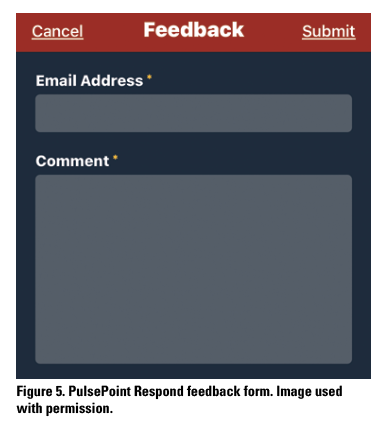

To illustrate, content managers might use elements of the heuristic in a drop-down menu like the comment field in PulsePoint Respond’s feedback form (see Figure 5). The app’s feedback form might also include predetermined categories or a dialogue box reminder to include specific examples. Elements of the commenting heuristic can also be used to generate pinned example comments that might be included, when possible, on an app’s home or download pages such as the page on the PulsePoint Foundation’s website where users can download the organization’s two apps (see www.pulsepoint.org/download). These prompts can easily be integrated by other apps’ content managers and web designers.

As another example showing how our commenting heuristic might be implemented, technical marketing writers could also apply the heuristic to learn more about the audiences they research by prompting users to describe their complaints and compliments in feedback forms (as shown in Figure 5). For example, PulsePoint Respond users find the app useful. Yet they also found functionalities that can be improved. Cueing these users to include detailed descriptions to contextualize and qualify their complaint or compliment would benefit technical marketing writers as they seek to understand their audiences. These writers might also incorporate options for users who leave feedback to include demographic information. Indeed, as our sample scenarios demonstrate, our heuristic in Table 3 is designed to help practitioners gather detailed, focused, relevant, substantive feedback from mHealth app users by directing and structuring the commentary they provide in their reviews.

STUDY LIMITATIONS, JUSTIFICATION, AND FINAL THOUGHTS

Although usability research can be completed remotely (for example, TryMyUI or Validately), generally more traditional approaches require direct interactions with users. More specifically, in order to learn how users use a particular artifact (e.g., software/support documentation, video tutorials, mHealth apps), usability researchers need to observe users’ interactions with that artifact. Yet as we discuss in the literature review, scholarship in TPC contends that UGC offers new opportunities for technical communication practitioners to develop and manage content for their users and to learn more about them. At the same time, because UGC does not necessarily provide the same kinds of information as usability testing, for example, relying on UGC to understand users’ practices and experiences does pose limitations.

In our study, for instance, although we have endeavored to ensure consistency in our interpretation of review comments through our coding and categorization method described earlier, it was not possible to follow-up with reviewers either to clarify their comments and/or to acquire additional information. Thus, the comments in our UGC dataset may not reflect how most PulsePoint Respond users are experiencing the app’s functionalities. Further, individual users might comment on a particular problem and/or experience that is unique to them but may not necessarily be representative. Such discrepancies are easier to determine when UX researchers are using traditional usability testing. Since all participants are performing the same tasks, users tend to have similar characteristics (e.g., all participants are novice or expert users), and usability practitioners can directly interact with users.

That said, our approach in this article does not seek to replace other usability methods like observations and/or usability testing, for instance. Rather, we have sought to uncover information about users’ experiences that may not be procured through these more structured approaches that are designed specifically to tease out functional usability problems. Indeed, because review comments are open-ended, users can, in theory, focus on the aspects of the app that most interest them rather than being guided by specific evaluation tasks and criteria created by app developers, which may not reflect users’ values and interests.

At the same time, review comments, too, are subjective, opinion-based, and may not necessarily be useful. As we stated earlier, our total pool of UGC was initially 599 comments. However, our final dataset was whittled down considerably after eliminating comments that offered no actionable information. Indeed, we were surprised that over half of the comments that we harvested were unusable. In reality, tens of thousands of reviews have been posted about the PulsePoint apps to the iOS platform, and we consulted first with the PulsePoint Foundation and later with a programmer to determine if all of these comments could be scraped. The programmer who assisted us could only download the most recent 500 comments, and the PulsePoint Foundation did not have access to the remainder. After our initial download, we were able to pull some new comments. However, ultimately, we were limited in the total number we could harvest. Had we been able to acquire all of these comments, we would have then needed to use a quantitative analytical approach [such as that developed by Gallagher et al. (2020)], which would have required us to rely on “computational approaches” (p. 156), as they did, to extract themes—a “labor-intensive” process that can be prohibitive for multiple reasons, as they explain (see p. 166-167).

Gallagher et al.’s (2020) methodology was well suited for their subject matter and the goals of their study. However, working with our much smaller dataset had some clear benefits. It allowed us to eliminate unusable comments—a task, arguably, that would not have been possible had we been working with a very large dataset of UGC. Indeed, using a software program to extract keywords would have shown us major linguistic patterns in our UGC and thus would have lent a different kind of insight into our data. However, this technology would not have been able to differentiate between actionable and not actionable comments. Rather, our qualitative analytical approach allowed us to tease out nuanced themes (codes) directly from the UGC we used, which we then refined into detailed categories and ultimately a UGC commenting heuristic. Thus, we suggest that our study does not offer a competing perspective to Gallagher et al. (2020) but a complementary approach that may be more appropriate for particular kinds of usability studies that draw on UGC and smaller sample sizes. At the same time, we acknowledge that our commenting prompts might not be of interest to technical communicators with physical access to users during usability testing. However, if physical access to users is not possible—the ongoing COVID-19 pandemic provides an example—it might be advisable to consider and use other sources of user feedback.

Finally, it is important to acknowledge that the comments we collected from the iOS platform span a three-year time frame, and during this period multiple upgrades have been made to the app. As this article goes to press, PulsePoint Respond is currently on version 4.12, whereas on September 3, 2016, the app was on version 3.16 (Apple Store Preview, 2021). Thus, some of the functional and productive usability concerns we discuss may have already been addressed. Indeed, the traffic scanner function is now featured more prominently on their website (perhaps in response to the organization recognizing its popularity among users) and in their description of the app on Apple’s App Store than when we conducted our analysis for this project. Nonetheless, our research highlights the importance and utility of these comments and offers strategies for soliciting more substantive, valuable feedback from users who wish to provide app reviews and comments, generally. As an additional point here, app developers and usability practitioners will also be aware of changes that have already been made to the app and its different iterations. Thus, they can group review comments chronologically and make decisions about how to implement review feedback based on the most current version of the app.

In Naab and Sehl’s (2017) systematic review of UGC research in communication-related fields, they point out—and we agree—that disciplinary lines naturally cross where research questions intermingle regarding research on recipients and UGC. In this way, our work continues along the same line as Gallagher et al.’s (2020) study while also contributing to ample UGC content analysis in technical communication writ large as well as the push toward integrating users “as collaborators” (Getto & Labriola, 2019, p. 396) in the creation of information.

ACKNOWLEDGMENTS

We are grateful for an early career grant from the Association of Computing Machinery’s Special Interest Group on Design of Communication, which enabled this research project, as well as Auburn University’s English Department’s Recognition and Development Committee for funding Welhausen’s research travel to Chicago. We appreciate the PulsePoint Foundations’s willingness to work with us and for their work on PulsePoint Respond. Further, we gratefully acknowledge Gustav Karl Henrik Wiberg’s work retrieving the iOS user comments and his troubleshooting attempts to gather as many comments as possible. We also share our appreciation for Yocelyn Cabañas and Qahir’s Muhammad analytical work, as well as Ailey Hall’s and Luke Richey’s labor as the student research assistants for this project. The Newberry Library and Harold Washington College—One of the City Colleges of Chicago also provided space for various research team meetings; we are thankful for their willingness to do so. And finally, we are thankful for the anonymous reviewers and their comments, Sam Dragga’s editorial guidance, and Miriam Williams’ support at various stages as we worked within the publication process.

REFERENCES

Apple Store Preview. (2021) PulsePoint respond. What’s new. Version history. Retrieved from https://apps.apple.com/us/app/pulsepoint-respond/id500772134

Arduser, L. (2018). Impatient patients: A DIY usability approach in diabetes wearable technologies. Communication Design Quarterly, 5(4),

31-39. https://doi.org/10.1145/3188387.3188390

Bivens, K. M., Arduser, L., Welhausen, C. A., & Faris, M. J. (2018). A multisensory literacy approach to biomedical healthcare technologies: Aural, tactile, and visual layered health literacies. Kairos: A Journal of Rhetoric, Technology, and Pedagogy, 22(2). http://kairos.technorhetoric.net/22.2/topoi/bivens-et-al/literature.html

Bivens, K.M., & Welhausen, C.A., (2021). Using a hybrid card sorting-affinity diagramming method to teach content analysis. Communication Design Quarterly. Advance Online Publication. https://doi.org/10.1145/3468859.3468860

Brumberger, E., & Lauer, C. (2015). The evolution of technical communication: An analysis of industry job postings. Technical Communication, 62(4), 224-243.

Carliner, S. (2012). The three approaches to professionalization in technical communication. Technical Communication, 59(1), 49-65.

Daugherty, T., Eastin, M. S., & Bright, L. (2008). Exploring consumer motivations for creating user-generated content. Journal of Interactive Advertising, 8(2), 16-25.

Fiordelli, M., Diviani, N., & Schulz, P. J. (2013). Mapping mHealth research: A decade of evolution. Journal of Medical Internet Research, 15(5). https://doi.org/10.2196/jmir.2430

Frith, J. (2014). Forum moderation as technical communication: The social web and employment opportunities for technical communicators. Technical Communication, 61(3), 173-184.

Frith, J. (2017). Forum design and the changing landscape of crowd-sourced help information. Communication Design Quarterly Review, 4(2),

12-22. https://doi.org/10.1145/3068698.3068700

Gallagher, J. R., Chen, Y., Wagner, K., Wang, X., Zeng, J., & Kong, A. L. (2020). Peering into the internet abyss: Using big data audience analysis to understand online comments. Technical Communication Quarterly, 29(2), 155-173. https://doi.org/10.1080/10572252.2019.1634766

Geisler, C. (2018). Coding for language complexity: The interplay among methodological commitments, tools, and workflow in writing research. Written Communication, 35(2), 215-249.

Getto, G., & Labriola, J. T. (2019). “Hey, such-and-such on the internet has suggested . . . ”: How to create content models that invite user participation. IEEE Transactions on Professional Communication, 62(4), 385-397. 10.1109/TPC.2019.2946996

Hansen, C. M., Kragholm, K., Pearson, D. A., Tyson, C., Monk, L., Myers, B., Nelson, D., Dupre, M. E., Fosbøl, E. L., Jollis, J. G., Strauss, B., Anderson, M. L., McNally, B., & Granger, C. B. (2015). Association of bystander and first-responder intervention with survival after out-of-hospital cardiac arrest in North Carolina, 2010-2013. JAMA, 314(3), 255-264. https://doi.org/10.1001/jama.2015.7938

Jake-Schoffman, D. E., Silfee, V. J., Waring M. E., Boudreaux E. D., Sadasivam, R. S., Mullen, S. P., Carey, J. L., Hayes, R. B., Ding, E. Y., Bennett, G. G., & Pagoto, S. L. (2017). Methods for evaluating the content, usability, and efficacy of commercial mobile health apps. JMIR mHealth uHealth, 5(12). https://doi.org/10.2196/mhealth.8758

Lam, C., & Biggerstaff, E. (2019). Finding stories in the threads: Can technical communication students leverage user-generated content to gain subject-matter familiarity? IEEE Transactions on Professional Communication, 62(4), 334-350. 10.1109/TPC.2019.2946995

Law, E. L. C., Roto, V., Hassenzahl, M., Vermeeren, A. P., & Kort, J. (2009, April). Understanding, scoping and defining user experience: a survey approach. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (pp. 719-728).

Lauer, C., & Brumberger, E. (2016). Technical communication as user experience in a broadening industry landscape. Technical Communication, 63(3), 248-264.

Ledbetter, L. (2018). The rhetorical work of YouTube’s beauty community: Relationship-and Identity-Building in user-created procedural discourse. Technical Communication Quarterly, 27(4), 287-299. https://doi.org/10.1080/10572252.2018.1518950

Liew, M. S., Zhang, J., See, J., & Ong, Y. L. (2019). Usability challenges for health and wellness mobile apps: mixed-methods study among mhealth experts and consumers. JMIR mHealth and uHealth, 7(1), e12160. doi:10.2196/12160

Mackiewicz, J. (2010a). Assertions of expertise in online product reviews. Journal of Business and Technical Communication, 24(1), 3-28.

Mackiewicz, J. (2010b). The co-construction of credibility in online product reviews. Technical Communication Quarterly, 19(4), 403-426.

Mackiewicz, J. (2014). Motivating quality: The impact of amateur editors’ suggestions on user-generated content at Epinions.com. Journal of Business and Technical Communication, 28(4), 419-446.

Mackiewicz, J. (2015). Quality in product reviews: What technical communicators should know. Technical Communication, 62(1), 3-18.

Mackiewicz, J., Yeats, D., & Thornton, T. (2016). The impact of review environment on review credibility. IEEE Transactions on Professional Communication, 59(2), 71-88. 10.1109/TPC.2016.2527249

Middelweerd, A., Mollee, J. S., van der Wal, C. N., Brug, J., & Te Velde, S. J. (2014). Apps to promote physical activity among adults: A review and content analysis. International Journal of Behavioral Nutrition and Physical Activity, 11(1), 97.

Miles, M. B., & Huberman, A. M. (1994). Qualitative Data Analysis: An Expanded Sourcebook. Sage.

Mirel, B. (2004). Interaction Design for Complex Problem Solving: Developing Useful and Usable Software. Morgan Kaufmann.

Naab, T. K., & Sehl, A. (2017). Studies of user-generated content: A systematic review. Journalism, 18(10), 1256-1273. https://doi.org/10.1177/1464884916673557

Nielsen, J. (2012, January 4). Usability 101: Introduction to usability. Retrieved from http://www.nngroup.com/articles/usability-101-introduction-to-usability/

Pohl, M. (2019). 325,000 mobile health apps available in 2017–Android now the leading mHealth platform. Research 2 Guidance. Retrieved from https://research2guidance.com/325000-mobile-health-apps-available-in-2017/

Redish, J., & Barnum, C. (2011). Overlap, influence, intertwining: The interplay of UX and technical communication. Journal of Usability Studies, 6(3), 90-101.

Simmons, M W., & Zoetewey, M. W. (2012). Productive usability: Fostering civic engagement and creating more useful online spaces for public deliberation. Technical Communication Quarterly, 21(3), 251-276. https://doi.org/10.1080/10572252.2012.673953

Sudden Cardiac Arrest Foundation (2019). Retrieved from https://www.sca-aware.org/

Thayer, A., Evans, M., McBride, A., Queen, M., & Spyridakis, J. (2007). Content analysis as a best practice in technical communication research. Journal of Technical Writing and Communication, 37(3), 267-279.

U.S. Dept. of Health and Human Services. (2021, June 4). User Experience Basics. Usability.gov. Retrieved from https://www.usability.gov/what-and-why/user-experience.html

Welhausen, C. A. (2017). At your own risk: User-contributed flu maps, participatory surveillance, and an emergent DIY risk assessment ethic. Communication Design Quarterly Review, 5(2), 51-61. https://doi.org/10.1145/3131201.3131206

Welhausen, C. A., & Bivens, K. M. (2019, October). Using content analysis to explore users’ perceptions and experiences using a novel citizen first responder app. In Proceedings of the 37th ACM International Conference on the Design of Communication (pp. 1-6).

White, R., & Cheung, M. (2015). Communication of fantasy sports: A comparative study of user-generated content by professional and amateur writers. IEEE Transactions on Professional Communication, 58(2), 192-207. 10.1109/TPC.2015.2430051

ABOUT THE AUTHORS

Candice A. Welhausen is an assistant professor of English at Auburn University with an expertise in technical and professional communication. Before becoming an academic, she was a technical writer/editor at the University of New Mexico Health Sciences Center in the department formerly known as Epidemiology and Cancer Control. Her scholarship is situated at the intersection of technical communication, visual communication and information design, and the rhetoric of health and medicine. She is available at caw0103@auburn.edu.

Kristin Marie Bivens is the scientific editor for the Institute of Social and Preventive Medicine at the University of Bern, Switzerland; and she is an associate professor of English at Harold Washington College—one of the City Colleges of Chicago (on leave). Her scholarship examines the circulation of information from expert to non-expert audiences in critical care contexts (e.g., intensive care units, sudden cardiac arrest, and opioid overdose) with aims to offer ameliorative suggestions to enhance communication. She is available at kristin.bivens@ispm.unibe.ch.

Manuscript received 30 September 2020, revised 10 November 2020; accepted 10 December 2020.