doi: https://doi.org/10.55177/tc734125

By Satu Rantakokko

ABSTRACT

Purpose: Extended reality (XR) is a promising new medium that creates environments combining real and virtual elements or offers a completely virtual environment for people to experience. In the field of technical communication, XR offers a plethora of possibilities, such as augmenting critical instructions in a work environment.

On the downside, XR brings about challenges. For example, issues of privacy and security require more attention due to the risks involved with XR devices continuously collecting data from the users and their surroundings. More knowledge concerning the use of XR as a medium to deliver technical instructions is required. In this article, I address this need by explaining how XR handles data.

Methods: To find out how XR handles data, I used relevant previous research (33 papers and four books) as data for thematic analysis. I coded data systematically according to how XR has been used before and the phases that can be seen in the process of data handling in XR when it is used as a medium for technical instructions.

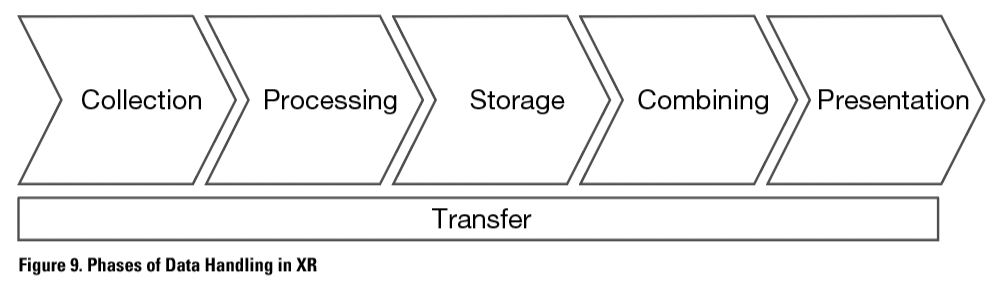

Results: The data handled in XR can be divided into instructional data, such as assembly instructions, and collected data that XR equipment collects while someone is using it. Data handling in XR can be seen as a process. Based on the thematic analysis, I found six different phases of data handling: collection, processing, storage, transfer, combining, and presentation.

Conclusion: The phases of data handling in XR illustrate in general what happens to the data in XR and what kind of data the equipment collects. My findings add to our understanding of XR as a medium to deliver technical instructions. They also offer a usable framework for mapping the differences between XR and other media as a way to deliver technical instructions.

KEYWORDS: technical instructions, extended reality, mixed reality, virtual reality, augmented reality, augmented virtuality

Practitioner’s Takeaway:

- New technologies, such as extended reality (XR), are changing the field of designing technical instructions, and more information is needed.

- XR offers new possibilities for designing technical instructions, but it also poses new challenges.

- An in-depth review of the phases of data handling in XR will help us better understand how data, such as technical instructions and data collected by XR equipment, are handled in XR technology.

- This understanding helps to prevent mistakes, such as leakage of sensitive data, by increasing understanding of how XR works as a medium for delivering technical instructions.

INTRODUCTION

During the past few years, there has been a rising interest in environments with virtual elements in the field of technical communication. Types of extended reality (XR), such as augmented reality (AR) and virtual reality (VR), have been used more and more in delivering technical instructions. Extended reality is becoming a significant part of the work and skillset of many professionals who design technical instructions. To begin using XR as a medium, a technical communicator must understand the types of additional requirements XR applications need for handling data.

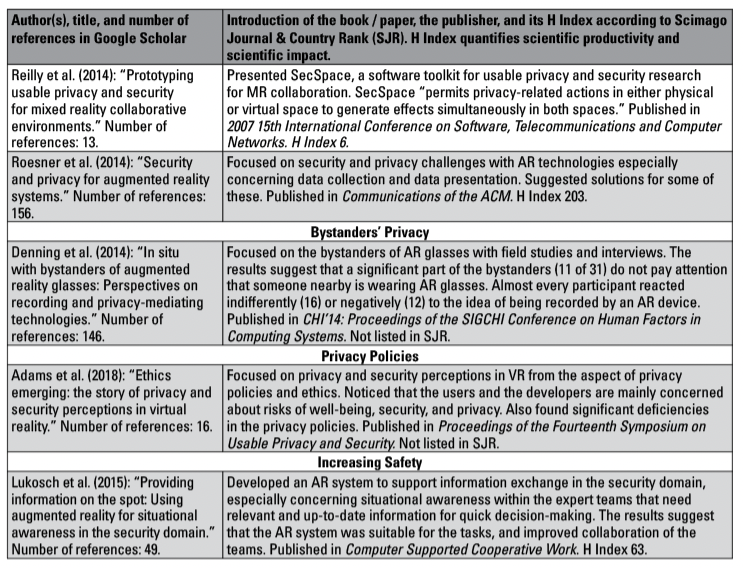

Extended reality is an umbrella term that includes both completely artificial virtual reality and mixed reality (MR) that combines real and virtual elements. As Fast-Berglund, Gong, & Li (2018, p. 32) defined it, the term “extended reality” refers to “all real-and-virtual combined environments and human-machine interactions generated by computer technology and wearables.” In the use of technical communication, XR offers numerous possibilities. For example, in Figure 1, there is a screenshot from a Youtube video of augmented reality for aircraft maintenance, remote support, and training. This video illustrates how augmented elements can show where and how to use tools needed to complete the task.

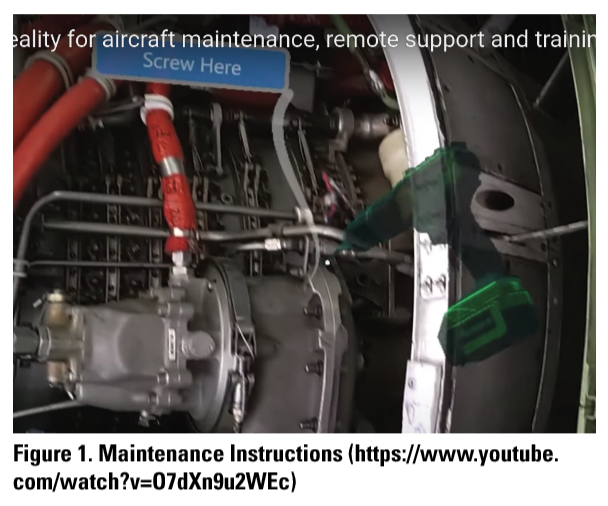

Milgram and Kishino’s (1994, p. 1321) virtuality continuum, as shown in Figure 2, illustrated the relations between different types of XR. The continuum organized the types of XR by increasing degrees of virtualization. Augmented reality (AR) means layering virtual objects on top of a real environment, while augmented virtuality (AV) adds real objects on top of a virtual environment. I will introduce the central terms and types of XR in more detail in the literature review section of the article.

Milgram and Kishino’s (1994, p. 1321) virtuality continuum, as shown in Figure 2, illustrated the relations between different types of XR. The continuum organized the types of XR by increasing degrees of virtualization. Augmented reality (AR) means layering virtual objects on top of a real environment, while augmented virtuality (AV) adds real objects on top of a virtual environment. I will introduce the central terms and types of XR in more detail in the literature review section of the article.

According to Azuma’s (1997) definition, AR combines virtual and real elements in real time, interactively and three-dimensionally. AR is the best-known type of mixed reality. Azuma’s definition is valid for the whole of XR (both MR and VR), except that VR is completely virtual and aims to exclude real elements. Papagiannis (2017) highlighted the importance of imagination and creativity for the uses of XR. It does not only have to mimic reality. Instead, it is possible, for example, to see the invisible or hidden. It is possible to fly and break the laws of gravity. However, there are also potential downsides. XR continuously collects data about the users and their surroundings. That data is needed, for example, to be able to situate the virtual elements in the right places, at the right time.

This collected data can include very sensitive information and is potentially vulnerable to attacks. The importance of privacy and security issues in the design of technical instructions has, therefore, rapidly increased. However, as Ahn, Gorlatova, Naghizadeh, Chiang and Mittal et al. (2018, p. 2) stated, “the field of AR security and privacy is still in its infancy,” even though AR is the most researched field of XR.

In order to address changes occurring within the field, there is a need to study XR as a whole, since different types of XR are increasingly being developed and used in conjunction with each other. During the past couple of years, a few studies have been published to meet this need. As Burova et al. (2020, p. 1) mentioned, “So far, these concepts have been studied separately despite the potential of uniting AR and VR under one comprehensive platform, including cost savings due to shorter development time, increased efficiency in training, and the possibility to integrate safety related aspects deeper into an organization’s culture and processes.”

However, it seems that most research papers on the topic focus only on a certain type of XR, on a certain task (see, for example, Albert et al., 2014). This focus gives a lot of detailed information, but a more comprehensive vision is needed. As Tham et al. (2018) pointed out, professionals working to communicate complex information have recognized the need for more knowledge about emerging technologies, including XR. We can address this need by taking a holistic approach to XR—by examining and taking an overview of how XR handles data. Because there are currently no theoretical perspectives related to XR data handling, I use the grounded theory approach to explore how XR handles data and propose a theoretical framework for future studies in this area. According to Glaser and Strauss (1968, p. 40), “as categories and properties emerge, develop in abstraction, and become related, their accumulating interrelations form an integrated central theoretical framework—the core of the emerging theory. The core becomes a theoretical guide to the further collection and analysis of data.”

The term data has a dual meaning in this article. First, it covers the instructions to be delivered by using XR, for clarity here referred to as instructional data. Second, it covers the data collected by the XR equipment, here referred to as collected data. The purpose of this research is to help professionals who design technical instructions increase their understanding of how XR works as a medium for delivering instructions.

I use the term data handling to describe what happens with the data when using XR equipment. For example, the data is stored, transferred, and presented to the user. I discuss the data handling process in XR from a wide perspective, focusing on the common features of different types of XR. To this end, I conducted a thematic analysis of previous research. As a result, I found six phases of data handling in XR: collection, processing, storage, transfer, combining, and presentation. I introduce these phases in detail in the Results section.

In this article, I focus especially on privacy and security issues because they represent an additional concern brought about by this technology, as traditional modes of technical instructions did not record data about the user, bystanders, and the environment. This focus, however, does not imply that privacy and security issues are more important than other aspects of using a new medium.

LITERATURE REVIEW

In this section, I introduce some of the most recent research concerning extended reality, mixed reality, virtual reality, augmented reality, and augmented virtuality, used in relevant ways for delivering technical instructions.

Extended Reality (XR)

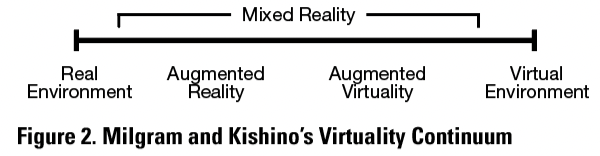

Extended reality is an umbrella term for environments with virtual elements. In Figure 3, I added extended reality in the continuum based on Milgram and Kishino’s (1994) virtuality continuum. Combining different types of XR into one project instead of focusing on just one type at a time is a relatively new phenomenon, and there are not many applications like this so far.

Burova et al. (2020) focused on industrial maintenance by developing and evaluating AR content in virtual reality with an xR Safety Kit framework, “a multipurpose VR platform with AR simulation and gaze tracking to support AR prototyping and training” (p. 2). They justified combining VR with AR by pointing out that, in this way, benefits of these different types of XR can be used while minimizing the downsides of both. According to Burova et al. (2020), VR is a viable environment for learning and training thanks to the flexibility and realism of experience, while AR-guidance in-field can improve speed, quality, and safety of work, resulting in decreased physical and mental workload. On the downside, there are challenges in AR development in authoring, context awareness, and interaction analysis.

Burova et al. (2020) focused on industrial maintenance by developing and evaluating AR content in virtual reality with an xR Safety Kit framework, “a multipurpose VR platform with AR simulation and gaze tracking to support AR prototyping and training” (p. 2). They justified combining VR with AR by pointing out that, in this way, benefits of these different types of XR can be used while minimizing the downsides of both. According to Burova et al. (2020), VR is a viable environment for learning and training thanks to the flexibility and realism of experience, while AR-guidance in-field can improve speed, quality, and safety of work, resulting in decreased physical and mental workload. On the downside, there are challenges in AR development in authoring, context awareness, and interaction analysis.

In their research, Burova et al. (2020) focused on evaluating in-field guidance and safety warnings; their results “show the potential of utilizing VR coupled with gaze tracking for efficient industrial AR development” (p. 2). Their xR Safety Kit framework aimed to approach the challenges in interaction analysis by prototyping AR solutions within VR. Benefits of this kind of a solution include flexibility, reducing development time and costs, and having a safe and controlled way to test techniques. Burova et al.’s (2020) research is a good example of why there is a need for a wider perspective: there are benefits in using more than just one type of XR at a time.

Virtual Reality (VR)

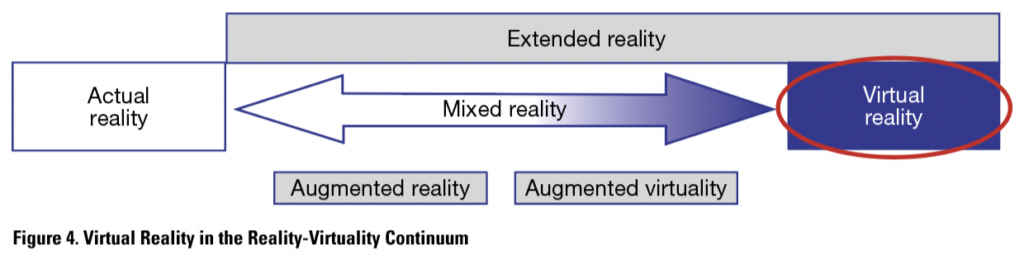

Virtual reality is the other end of the continuum, as illustrated in Figure 4. While actual reality, at the left of the continuum, has no virtual elements, VR consists only of virtual elements. VR has been used in many cases in training for new tasks. It is useful especially when training sessions would be expensive, difficult, or dangerous to conduct in a real environment.

In their research, Porter et al. (2020) focused on the effectiveness of using VR in learning electrostatics. Porter et al. (2020) also studied the relationship between preliminary VR training, earlier gaming experience, and the effectiveness of the learning process in circumstances when the training was not related to the topic to be learned. In general, pretraining improved gains and gaming experience with pretraining even more so. However, gamers who did not receive preliminary training performed worse than other groups, with negative gains. In comparison, untrained non-gamers had small positive gains. In general, some statistical improvement on student performance was witnessed as well as “evidence that VR training is beneficial for student acclimation to VR-based instruction” (p. 8). Porter et al. (2020) concluded that VR-based instructions have no effect on student understanding, meaning that VR-based instructions are not more effective compared to other media at teaching 3D topics. Porter et al.’s (2020) research brings some interesting considerations for designing technical instructions.

In their research, Porter et al. (2020) focused on the effectiveness of using VR in learning electrostatics. Porter et al. (2020) also studied the relationship between preliminary VR training, earlier gaming experience, and the effectiveness of the learning process in circumstances when the training was not related to the topic to be learned. In general, pretraining improved gains and gaming experience with pretraining even more so. However, gamers who did not receive preliminary training performed worse than other groups, with negative gains. In comparison, untrained non-gamers had small positive gains. In general, some statistical improvement on student performance was witnessed as well as “evidence that VR training is beneficial for student acclimation to VR-based instruction” (p. 8). Porter et al. (2020) concluded that VR-based instructions have no effect on student understanding, meaning that VR-based instructions are not more effective compared to other media at teaching 3D topics. Porter et al.’s (2020) research brings some interesting considerations for designing technical instructions.

First, while XR-based instructions in studies show significant improvement in many aspects compared to traditional instructions, they do not always guarantee improvement in results. Therefore, it is important to consider which medium for instructions is most useful for the purpose at hand.

Second, there is a need to know the target user group. The target user group’s experience matters, even in non-related skills like gaming, and training to use the technology itself may be needed.

Mixed Reality (MR)

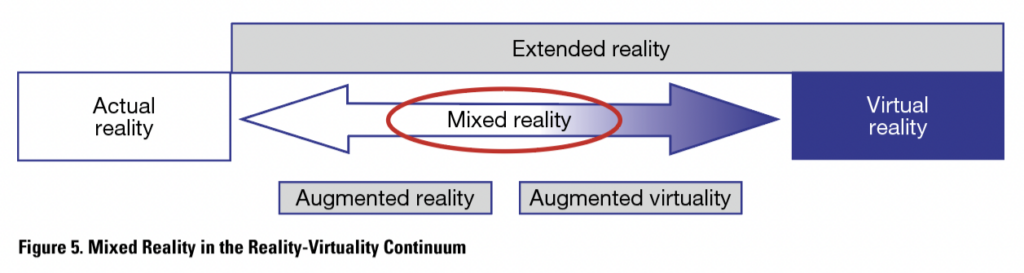

Mixed reality exists between actual reality and virtual reality, as seen in Figure 5. When it is stated that a study or application uses MR, it can mean that the application draws from different types of MR or that the application does not fit directly with either of them.

Schoeb et al. (2020) compared MR-based instructions on performing bladder catheter placement to instructions received from an instructor. They used a video-based approach because, as they said, their system did not have the capability to recognize real objects. Therefore, it did not truly “augment” the environment that interacted with real objects, so they categorized it as a mixed reality system. They stated that in practical, medical, and surgical tasks, training often takes place on the patient. This is because other methods, such as simulators, require so many resources that are not widely available. Therefore, cost-effective methods are in high demand. In their results, Schoeb et al. (2020) indicated slightly better learning outcomes with an MR-based solution, with a higher degree of consistency. According to Schoeb et al. (2020) the functionality of this technology is an advantage because it does not require teaching personnel, which makes teaching more flexible and cost efficient. However, they found that it would be difficult to use MR widely in everyday teaching because of shortcomings of the hardware and necessary infrastructure. Schoeb et al. (2020) concluded that MR is an efficient tool for instructing bladder catheter placement, but more developmental progress is needed to improve the usability of current MR systems.

Schoeb et al. (2020) compared MR-based instructions on performing bladder catheter placement to instructions received from an instructor. They used a video-based approach because, as they said, their system did not have the capability to recognize real objects. Therefore, it did not truly “augment” the environment that interacted with real objects, so they categorized it as a mixed reality system. They stated that in practical, medical, and surgical tasks, training often takes place on the patient. This is because other methods, such as simulators, require so many resources that are not widely available. Therefore, cost-effective methods are in high demand. In their results, Schoeb et al. (2020) indicated slightly better learning outcomes with an MR-based solution, with a higher degree of consistency. According to Schoeb et al. (2020) the functionality of this technology is an advantage because it does not require teaching personnel, which makes teaching more flexible and cost efficient. However, they found that it would be difficult to use MR widely in everyday teaching because of shortcomings of the hardware and necessary infrastructure. Schoeb et al. (2020) concluded that MR is an efficient tool for instructing bladder catheter placement, but more developmental progress is needed to improve the usability of current MR systems.

Schoeb et al.’s (2020) research also mentioned the technological limitations of using MR technology, including the fact that more interaction, which is one of the central features of XR, could have been helpful. This emphasizes the importance of fully using the features of the medium chosen to deliver technical instructions. When considering some type or types of XR, knowledge of their benefits and deficits is needed.

Augmented Reality (AR)

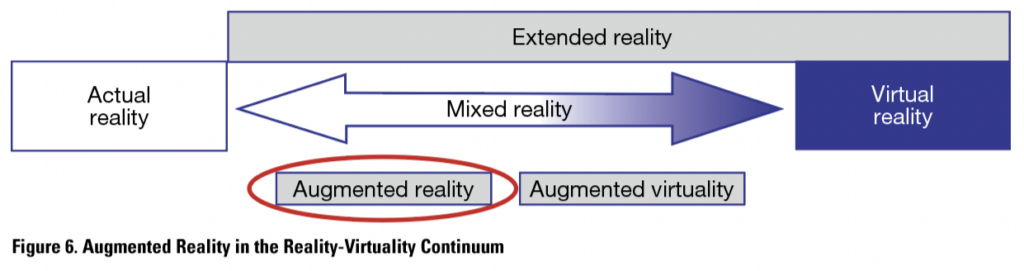

Augmented reality already has a long tradition in delivering technical instructions. In the reality-virtuality continuum, AR is situated closer to actual reality than virtual reality, as shown in Figure 6, because it is based on actual reality augmented with virtual elements.

Chu, Liao, and Lin (2020) studied AR in delivering manual assembly instructions for a complicated task. They focused on Duogong, which is a remarkable architectural design of ancient China, which still plays a vital role in the development of traditional buildings. It is so complex that according to Chu et al. (2020), people tend to have difficulty understanding and learning from 2D drawings and paper documentation.

Chu, Liao, and Lin (2020) studied AR in delivering manual assembly instructions for a complicated task. They focused on Duogong, which is a remarkable architectural design of ancient China, which still plays a vital role in the development of traditional buildings. It is so complex that according to Chu et al. (2020), people tend to have difficulty understanding and learning from 2D drawings and paper documentation.

Chu et al. (2020) stated that “assembly instructions, drawings, and operation descriptions are usually presented in a 2D format, or on paper, or both. Such a presentation form fails to precisely demonstrate the spatial relationships among the three-dimensional (3D) components comprising a product, which are critical to its manual assembly” (p. 2). According to Chu et al. (2020), this may increase the assembly time and human errors. Chu et al. (2020) argued that AR applications with their interactive 3D space could solve this problem.

In their study, Chu et al. (2020) proposed two categories of assistive functions: part search and assembly demonstration. Chu et al. (2020) measured assembly time and accuracy, with accuracy as the priority, comparing paper-based instructions, a 3D viewer, and AR-based instructions. After taking a closer look, Chu et al. (2020) discovered that part recognition was slowest with the paper form, and part-fetching took the longest time with the AR system. The AR-based system had the lowest number of assembly errors, but the differences were not statistically significant.

The participants had a relatively lower mental load with paper-based instructions, which, according to Chu et al. (2020), may indicate that people are still accustomed to the paper presentation. Most of the participants considered the part confirmation and assembly demonstration of AR-based instructions highly useful and helpful, but some of them expressed physical fatigue due to placing the parts and assemblies under the camera. According to Chu et al. (2020), participants felt physically and mentally tired by repetitively holding parts under the camera for confirmation purposes.

In this system, the recognition speed was slow, which the participants found frustrating and resulted in longer total assembly time. Chu et al. (2020) concluded that the system design of AR-based instructions needs to be improved to reduce the computational time required by object recognition.

Since multiple studies show improvements in assembly instructions using AR, Chu et al.’s (2020) study indicates the importance of having good instructional design and having fluently functioning applications. It also signals that at least in the beginning of using new types of instructions, traditions matter. When people are accustomed to reading certain types of instructions, adopting new kinds of instructions and technology may take time.

Augmented Virtuality (AV)

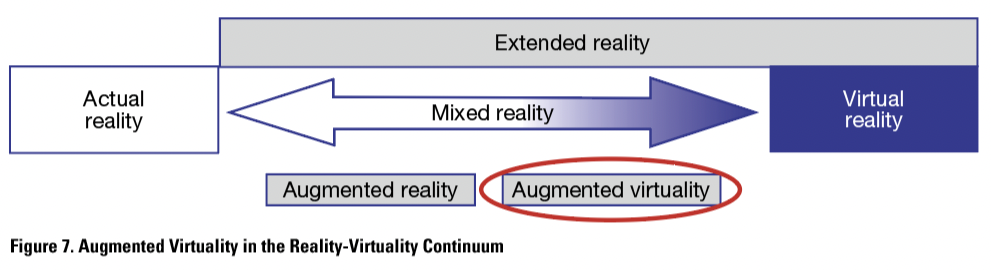

Augmented virtuality is a relatively little used and known type of XR. While augmented reality augments actual reality with virtual elements, augmented virtuality augments virtual reality with real elements. Therefore, in the reality-virtuality continuum, it is situated closer to virtual reality than actual reality, as seen in Figure 7.

Gralak (2020) focused on finding a solution to the greater number of ship collisions caused by increased vessel traffic. According to Gralak (2020), ships are equipped with decision support systems to enhance navigational safety, but these systems “do not provide the navigator with sufficient data for reliable and complete assessment of the ship’s spatial position relative to nearby objects” (Gralak, 2020, p. 2). There is a need for visual observation data, but it may not be feasible, for example, in poor visibility. Therefore, Gralak (2020) aimed to develop an AV-based spatial decision support system to be used in the navigator’s decision-making process.

Gralak (2020) focused on finding a solution to the greater number of ship collisions caused by increased vessel traffic. According to Gralak (2020), ships are equipped with decision support systems to enhance navigational safety, but these systems “do not provide the navigator with sufficient data for reliable and complete assessment of the ship’s spatial position relative to nearby objects” (Gralak, 2020, p. 2). There is a need for visual observation data, but it may not be feasible, for example, in poor visibility. Therefore, Gralak (2020) aimed to develop an AV-based spatial decision support system to be used in the navigator’s decision-making process.

Gralak (2020) found AV to be more expanded than AR, with more capabilities for this purpose. The main reason for using AV instead of AR was that navigators use visual observation during the decision-making process and, therefore, their view cannot be blocked with virtual elements. Instead, in AV, the “technology provides the observer with a display and interaction in a predominantly or fully virtual world, correlated with reality, which can be enriched with elements of the real environment, presented in a predefined form” (Gralak, 2020, p. 4). The results of this study (Gralak, 2020, p. 15) indicated statistically significant improvement on the safety of navigation; it also indicated that choosing the right type of XR is important because different tasks and goals have different needs.

These examples of recent research illustrate that XR and its different types are used in many ways in delivering instructions. The studies also highlight that it is crucial to understand the features of the technology chosen for the medium to deliver technical instructions and to fully use its benefits for the task at hand. When the features are not fully understood, the potential benefits can be lost. For these considerations, knowledge of how data is handled in XR is needed.

METHODOLOGY

To identify how XR handles instructional data and collected data, I applied thematic analysis (see, for example, Nowel et al., 2017). I focused on how this subject is discussed in earlier relevant research. According to Nowell et al. (2017), thematic analysis is a relevant qualitative research method that can be used when analyzing large qualitative data sets. Nowell et al. (2017) introduced a pragmatic process for conducting a trustworthy, step-by-step approach to thematic analysis, which I have applied in this research. Based on an extensive literature search, I selected relevant sources for literature-based thematic modeling. I explain the methodological process, conducted in six phases, in detail in the next subsection.

Analysis of Literature

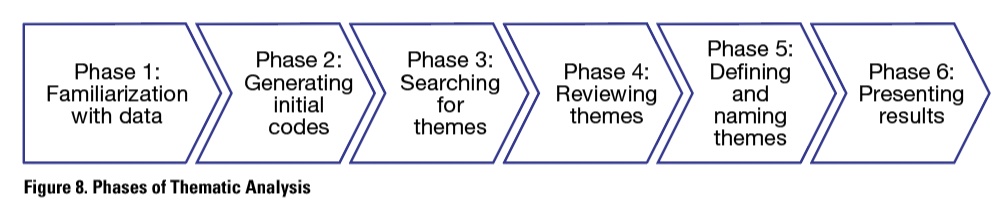

The phases of thematic analysis, according to Nowell et al. (2017), are listed in Figure 8. In this section, I provide details about how I applied these phases of thematic analysis in this study.

Phase 1: Familiarization with data

Phase 1: Familiarization with data

I became familiar with the data by studying earlier research of XR to gain a comprehensive overview. I searched the literature using Google Scholar and the information-seeking portal Finna (https://finna.fi/Content/about?lng=en-gb), which covers millions of items from hundreds of organizations, such as archives, libraries, and museums.

In this way, I was able to access a wide range of both open access and limited access research. My search words included the types of XR separately (extended reality, mixed reality, virtual reality, augmented reality, and augmented virtuality) and combined with terms such as technical instructions, instructions, technical communication, and maintenance. In addition, I applied a type of snowball sampling by exploring the research found in the references of previous papers.

I selected 20 papers and four books for closer analysis. The criterion of selection was to enable a broad view of the subject, mostly focusing on, but not limited to, the aspect of XR in mediating technical instructions. However, the focus was not only on earlier and current uses but also on possibilities and challenges that could be relevant to this field.

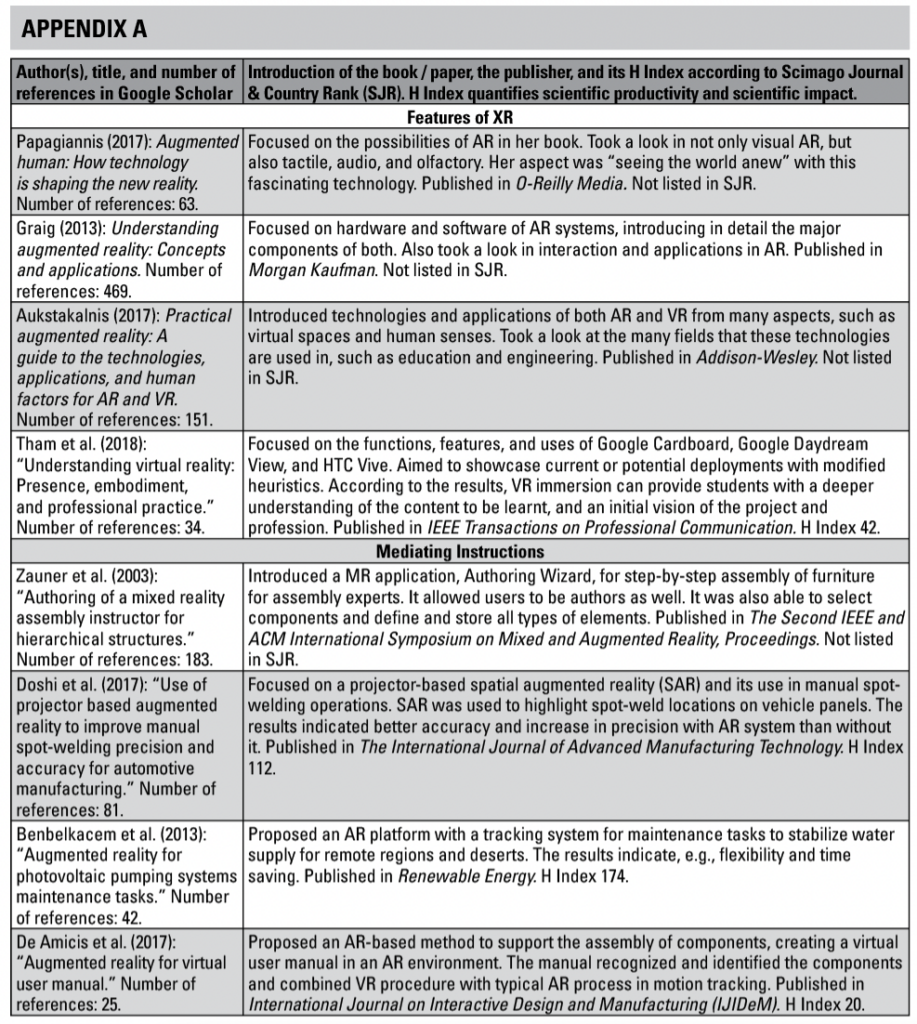

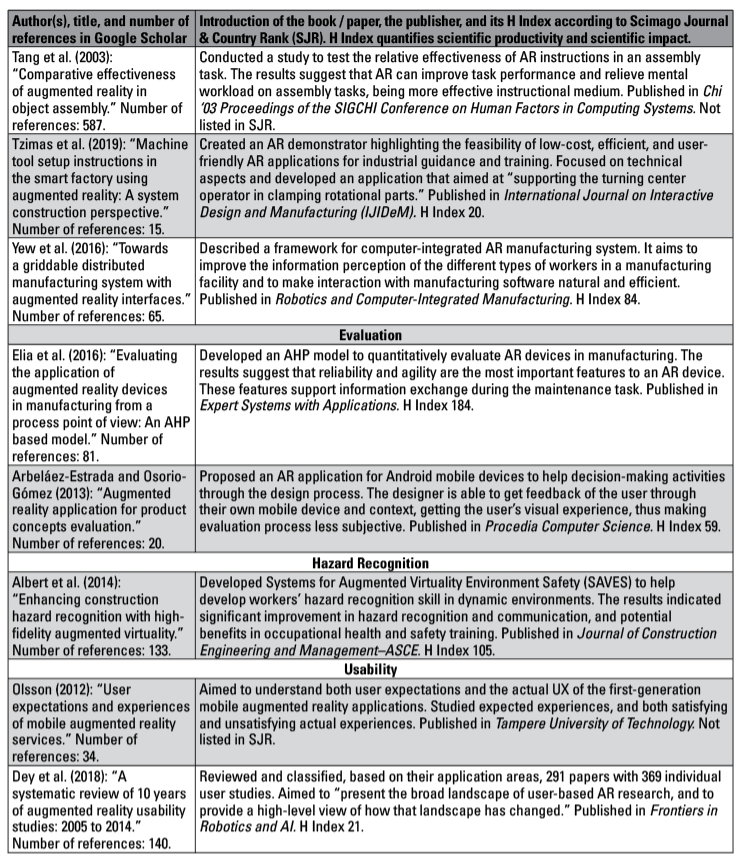

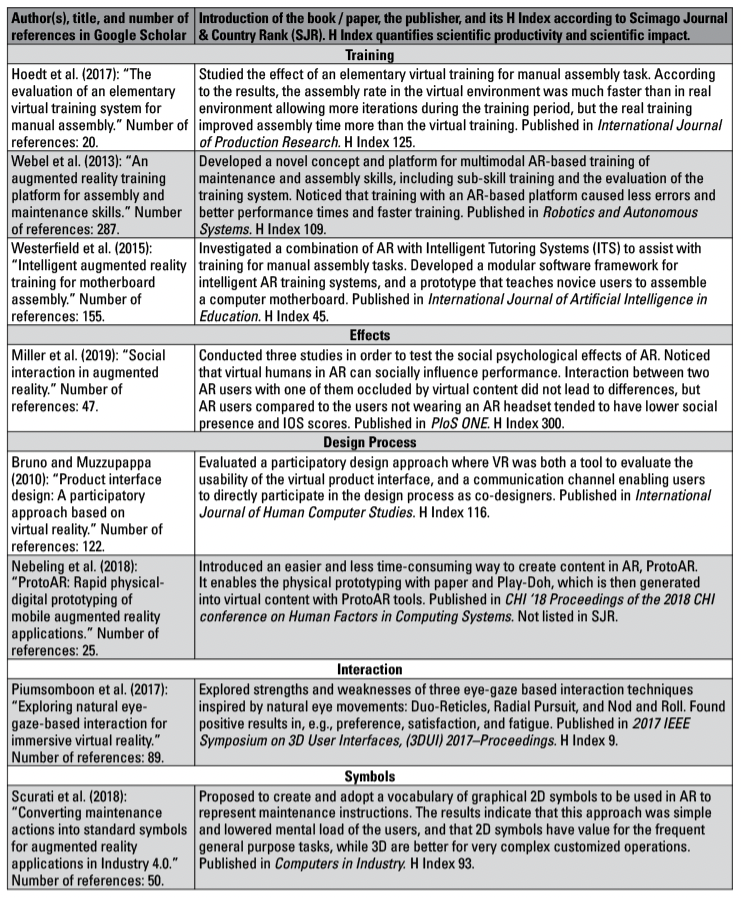

Thus, I included other kinds of sources as well, including sources concerning technology, future possibilities, and collaboration via XR. I excluded, for example, sources that focused strictly on technology at a very deep level and sources covering specific fields such as architecture or entertainment. My rationale for applying these criteria was to map common features of a wide range of XR from different points of view. The sources selected are introduced briefly in Appendix A.

Phase 2: Generating initial codes

In phase 2 of the analysis, I generated initial codes by excerpting large, relevant sections discussing the features, effects, and results of the studies, and placed them in a mind map. Next, I divided these sections into subcategories and organized same or similar features under the same nodes in a mind map. This is not a typical method for coding, but it let me systematically go through different sources and find the features they repeatedly discussed, possibly with different terms, as is typical in the field of XR. For example, the basic terms, such as mixed reality and augmented reality, are often confused.

Therefore, I needed to focus on greater overarching codes and what they entail, instead of repeated expressions. Moreover, I needed to keep the purpose of the research in mind. For example, there were several codes to be found that focused deeply on technological processes and equipment in detail, such as different types of technological solutions of screens on an XR headset. That kind of aspect, however, leads to a focus on differences instead of common features. Initial codes that I found were, for example, “what kind of data is available” and “how is data expressed.”

Phase 3: Searching for themes

In searching for themes from the initial codes, it became evident that the approach to data should be process-based. This is because the data is handled in phases that follow each other. Based on the coding of the data, I found six different phases of data handling in XR: collection, processing, transfer, storage, combining, and presentation of data. I elaborate these phases in detail in the Results section.

Phase 4: Reviewing themes

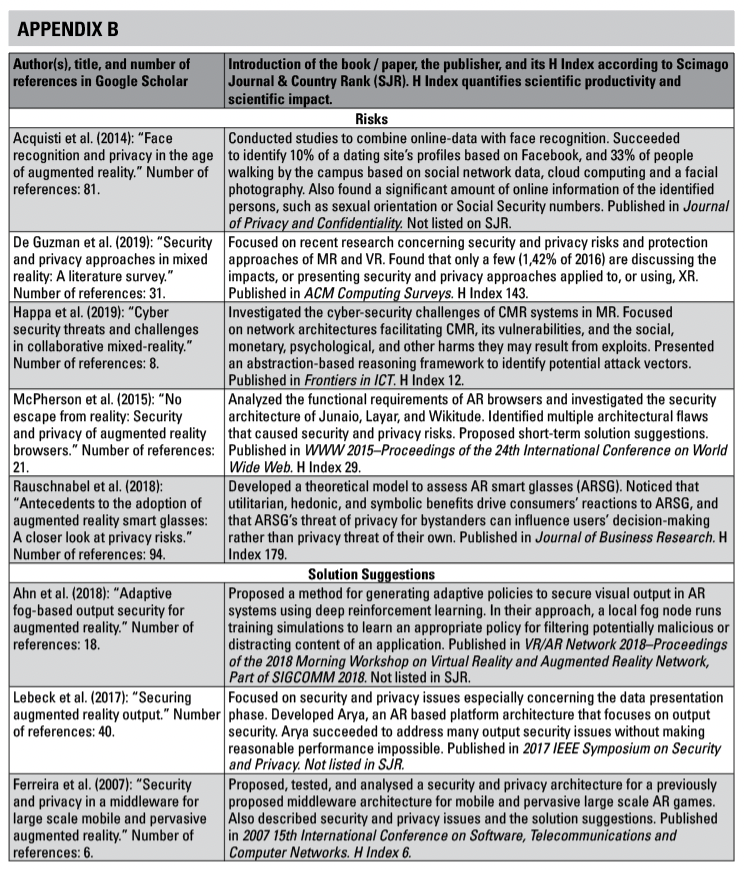

After the phases of data handling in XR were found, they needed to be considered in order to find out if they appear to form a coherent pattern. In this case, I needed to make sure that the aspect of a process can be used in seeing how the data is handled in XR and that all potentially relevant phases of XR data handling were found. Therefore, I decided to test the results with other kinds of sources. Since the risks of privacy and security were a repeated aspect rising during the analysis, I chose the research concerning these issues as the test data. For this purpose, I selected 13 papers concerning security and privacy issues generated by XR use and reviewed them to see if the issues arising from them could be fitted into the phases of data handling in XR. These papers are introduced in Appendix B. In this review phase of the validity of the identified categories, I was able to conclude that the named categories reliably represent the data and are, therefore, useful for this study.

Phase 5: Defining and naming themes and Phase 6: Presenting results

In these phases of the analysis, themes are usually defined, named, and reported. In this research, they were already named in the third phase. I define and report the results in the next section of this paper.

RESULTS

The purpose of this research is to explore and identify the ways in which data is handled when using XR to deliver technical instructions. In particular, it is important to consider the additional requirements in terms of data handling for designers of technical instructions. The analysis of previous research indicates that there are six distinct phases: collection, processing, transfer, storage, combining and presentation of data, illustrated in Figure 9.

The phases of XR data handling are sequential. The phase of data transfer may be conducted on several occations, for example in order to process or store the data. I discuss each of these phases below in relation to security and privacy issues.

The phases of XR data handling are sequential. The phase of data transfer may be conducted on several occations, for example in order to process or store the data. I discuss each of these phases below in relation to security and privacy issues.

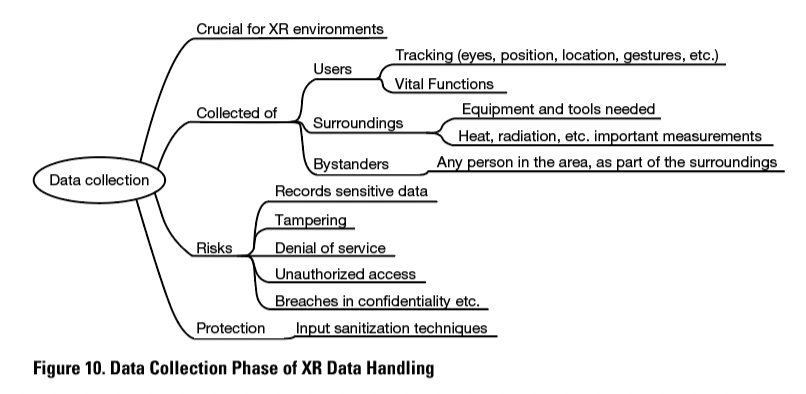

The Collection Phase of XR Data Handling

The first phase of XR data handling is data collection. The data handled in XR includes the instructional data and the collected data. Data collected may be about the user, the surroundings, or bystanders. Figure 10 shows the central aspects of the data collection.

Constant data collection about the users is crucial for XR environments. As Aukstakalnis (2017, p. 196) explained, sensors are a vital enabling technology in XR systems. Their purpose is to track, for example, the user’s location, position, orientation, head and hand movements, gestures, eye-movements, actions, and full body dynamics. Without this kind of tracking, XR would not be able to create a real-like, immersive, and interactive experience where all the virtual objects are in the right place at the right time (see, for example, Piumsomboon et al., 2017, p. 36; Reilly et al., 2014, p. 275; De Guzman, Thilakarathna, & Seneviratne, 2019, pp. 8, 13). The instructional application can, for example, collect data from critical situations in a work environment, such as an increased amount of radiation and rising heat.

However, data collection also opens doors to recording sensitive information, for example, from the user’s home and workplace. Everything the user sees and hears can be captured, recorded, and potentially attacked. De Guzman et al. (2019, p. 6) mentioned emails and chat logs as examples of this kind of sensitive data. As another example, the VR-integrated wearable technology company CleverPoint (https://cleverpoint.pro/) advertises on its website that its technology is designed to “collect and analyze (the) user’s response to VR content,” including a neurofeedback function and biometric sensors. It can register, for example, the anger or anxiety of the user.

De Guzman et al. (2019, pp. 10, 14) stated that the main threats in data collection, mostly caused by the constant scanning, are security threats such as tampering, denial of service, and unauthorized access. Other threats besides the security threats are breaches in confidentiality, linkability, detectability, identifiability, and user content unawareness. Bystanders are a part of the surroundings from the XR equipment’s perspective, and, therefore, they have to be observed as any other object. This means that bystanders’ sensitive data can also be collected, without them possibly even realizing it.

Although there are risks, these risks may be at least partly prevented. There are several ways to protect the data collected. De Guzman et al. (2019, p. 11) suggested, for example, input sanitization techniques, such as “information reduction or partial sanitization, facial information to facial outline only, complete sanitization or blocking, or skeletal information instead of raw hand video capture.”

Data collection is the starting point of XR data handling. At the same time, it is the fundamental enabler of all the possibilities of XR and also the basic reason for the privacy and security issues of XR-based technical instructions. The data that must be collected because of the nature of XR is a potential risk for privacy and security, therefore, it needs to be protected.

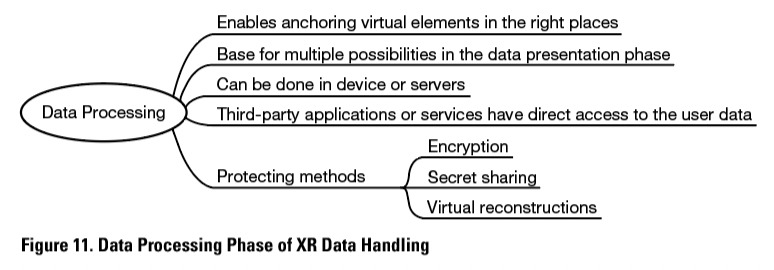

The Processing Phase of XR Data Handling

After the data has been collected, it is then processed. As De Guzman et al. (2019, p. 15) explained, to operate and deliver outputs accurately in real-time, XR equipment also must process the data it keeps collecting. Processing the data is needed to make the data understandable for the XR equipment to create an immersive, real-like, interactive experience. The main points of this data handling phase are seen in Figure 11.

As McPherson, Jana, and Shmatikov (2015, p. 749) stated, processing enables anchoring virtual elements in the right places, which makes it easier to find the right information when it is needed. For example, not only could necessary tools and parts be named, but also pointed out with, for example, an arrow or another symbol. Speed in processing reduces the delay, so that virtual elements appear in the right places, at the right time, when presented to the user.

De Guzman et al. (2019, p. 15) suggested that the same privacy threats of information disclosure, linkability, detectability, and identifiability still hold in the data processing phase as in the data collection phase. According to De Guzman et al. (2019), during the processing, third-party applications or services have direct access to even the most sensitive user data, if no relevant protection is implemented.

Moreover, the data is not always processed on the device. Instead, it can be transmitted to servers for processing. Reasons to outsource the data processing can be, for example, business-related. McPherson et al. (2015, pp. 749–750) mentioned a few examples: interpolating advertisements, charging content providers, and keeping usage statistics.

Designers of technical instructions can try to protect the data during the phase of data processing by using, for example, encryption-based techniques, secret sharing, or virtual reconstructions, as Adams, Bah, & Barwulor (2018) suggested.

One example of encryption-based techniques is homomorphic encryption, where the data is encrypted upon collection, and a third-party service does not need to decrypt it to carry out computations. This is useful for remote data processing, if the data processors are not reliable (Adams et al., 2018, pp. 15–16). With secret sharing, the data is split between two or more sources, so that the complete data does not have to exist in either source. This technique can be used to secure multi-party computation (Adams et al., 2018, p. 16). In the technique of virtual reconstructions, the real capture of an object is replaced with an artificial one, keeping possibly sensitive objects hidden (Adams et al., 2018, p. 17).

The phase of data processing creates the basis for multiple possibilities in the data presentation phase. Data processing is essential in XR, but it also includes many privacy and security risks.

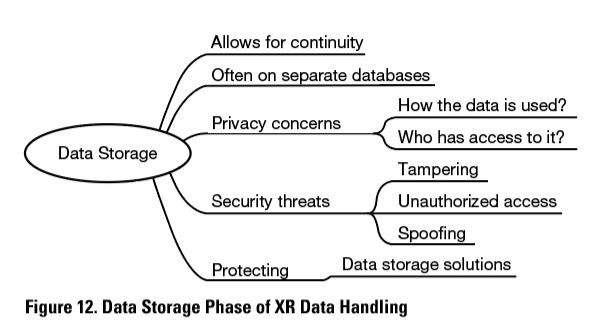

The Storage Phase of XR Data Handling

The collected and processed data may need to be stored. The phase of data storage allows for continuity. The instructions and other relevant data are preserved and do not need to be collected and processed repeatedly.

Data storage keeps the data accessible at all times. This enables useful features, such as the ability to continue teaching a new task from where it was last left off. A fluent instruction process can make the XR-based instructions feel more reliable.

According to Adams et al., the data is often stored by the applications in separate databases (2018, p. 17). In those cases, the user has minimal or no control over this stored data. The data storage raises privacy concerns about how the data is used and who has access to it. Attackers may obtain access to the stored data, or the users may be tricked into disclosing information to unauthorized parties. De Guzman et al. (2019, p. 17) listed tampering, unauthorized access, and spoofing as examples of security threats related to data storage.

However, there are data storage solutions to help protect the stored data when trustworthiness is not ensured, such as personal data stores. These give the user control over their own data and allow them to decide which applications have access to it (Adams et al., 2018, p. 17).

Planning data storage is an important part of designing XR data handling. Designers of technical instructions can choose to store only relevant data about the users and their surroundings. The designers of technical instructions and the users both can also take measures to protect the data and prevent access from unauthorized parties.

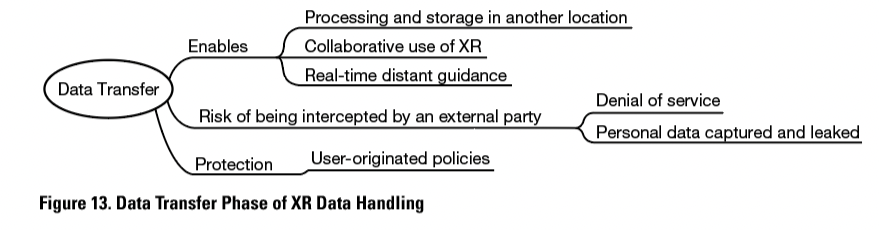

The Transfer Phase of XR Data Handling

Data may or may not be transferred for different purposes, such as for processing, storage, and presentation. The central points of the data transfer phase are illustrated in Figure 13. Collaboration in XR can be seen as transferring data, such as technical instructions, from user to user. This provides an opportunity for personal counseling in complicated tasks.

As Reilly et al. (2014, p. 275) pointed out, MR is a form of shared space: it combines real and digital elements both local and remote. By merging real and virtual worlds, it creates an interactive and real-time environment. In a similar vein, VR may be used for collaborative purposes as well. Papagiannis (2017, pp. 84–85) emphasized that shared visual spaces allow participants to show data instead of just telling others about the data.

Data transfer to the users is important to give them access to the instructions realistically. If the users work in remote locations, it is important to make sure that at least the most vital instructions can be accessed offline. This way instructions are not useless if the web connection fails. Keeping vital data available offline and minimizing the negative effects of possible interruptions in data transfer increases trustworthiness of XR-based technical instructions.

Data transfer works in two directions: for the user and from the user. Securing data transfer is important because there is always the possibility that data transfer will be intercepted by an external party. Disruption of data transmission can, at worst, be fatal, if it happens during a dangerous work situation. Even minor deficiencies can lead to personal or business-related data falling into the wrong hands or to a third party adding inappropriate content to the instructions.

For example, in shared spaces an adversarial user could “tamper, spoof, or repudiate malicious actions,” causing legitimate users denial of service or having their personal data captured and leaked (Adams et al., 2018, p. 21). McPherson et al. (2015, p. 750) stated that the users usually have no way to find out which data is sent to servers. This could reduce users’ reliance on XR as a mediator of technical instructions. Keeping users informed of what data is transferred and to whom could improve users’ trust and reliance on applications.

One important set of tools for data transfer protection is user-originated policies, such as Emmi (Environmental Management for Multi-user Information environments), which allow users to hide specific virtual objects in a collaborative environment, or Kinected Conference, which enables gesture-based requests for temporary private sessions during collaborative actions. Another example is feed-through signaling, such as SecSpace, which allows “a more natural approach to user management of privacy in a collaborative MR environment” (De Guzman et al., 2019, p. 21).

Data transfer enables the processing and storage of the data in another location. It also offers a chance for collaborative use of XR, and it is vital for real-time distant guidance in work situations. Real-time transfer in both directions makes it more difficult to protect sensitive data, since the other users see, may capture, and perhaps even save everything. The data is vulnerable to being captured by a third party during the transfer.

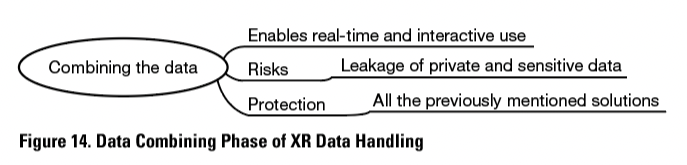

The Combining Phase of XR Data Handling

By combining data from the user’s environment, location, and movements, instructions can assist in a real-time and interactive manner. Roesner, Kohno, and Molnar (2014, pp. 95–96) stated that by combining several input and sensory devices, future systems could, for example, detect privacy or security conditions that the user should be warned about, such as camera lenses or a laser microphone pointed at them or physical deception attempts like card-skimming devices in the ATM. This phase is introduced in Figure 14.

Combining data from technical instructions with a work environmental view enables access to interactive instructions. This is useful especially in complicated tasks when the users need the instructions shown instead of spoken or written to increase the possibility of understanding. With XR, the users can also be informed of security, privacy, and safety risks in the environment, and be guided to solve these situations. These benefits can add to user safety and evoke a more positive attitude toward XR-based instructions.

Combining data from different sources can lead to leakage of private and sensitive data (see, for example, Acquisti, Gross, & Stutzman, 2014, pp. 13–14). All the previously mentioned solutions and suggestions for privacy and security challenges may have a positive effect on the data-combining challenge. For example, denying unauthorized access to collected and stored data protects it from being captured and combined with data from other sources.

Data combining enables and supports some of the most significant possibilities that XR can offer, yet at the same time, risks are posed by its ability to combine sensitive data to achieve more knowledge about the user, an organization, or a bystander. Protection methods in the previous XR data handling phases decrease risks of data-combining by hostile parties.

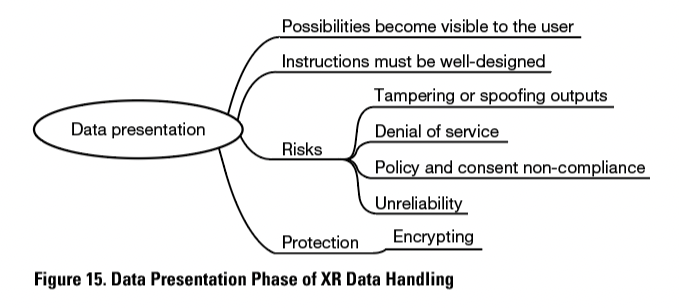

The Presentation Phase of XR Data Handling

Finally, the data is presented to the user. The data can include sensory information, such as visual, audio, tactile, and olfactory (Happa, Glencross, & Steed, 2019, p. 11). In this phase, the multiple possibilities enabled by data collection, processing, and combining become visible to the user. Features of XR can, in many cases, increase user safety remarkably. Albert et al. (2014, p. 9) highlighted that workers are often unable to recognize hazards, and hence are exposed to safety risks. They studied enhancing construction hazard recognition with high-fidelity augmented virtuality and found that “the intervention significantly improved hazard recognition and communication” (Albert et al., 2014, p. 9). The data presentation phase is introduced in Figure 15.

Presentation of the data makes accessing it easier, for example, by showing vital instruction data directly to the users when the situation requires that data, allowing user to have easy access to situation-related alarms and warnings. Well-presented data is easy to find and notice in the situations the users operate in.

Aukstakalnis (2017, p. 332) stated that 80%–85% of human perception, learning, cognition, and activities are mediated through vision. Using unambiguous symbols, focusing on visual elements that are, if needed, supported with auditory and tactile elements, can also increase the probability of understanding the instructions. In reliable instructions, the presentation is at all times, or at least almost all times, functional and uninterrupted.

Badly designed, malicious, or bug-ridden applications can compromise user security. For example, the application can require too much of the user’s attention, causing distractions, or it can block crucial objects of the environment, such as road-signs, from being noticed (Ahn et al., 2018, pp. 1–2). Lebeck, Ruth, Kohno, and Roesner (2017, p. 322) mentioned, as an example, that the AR game Pokémon Go captured the attention of its users to the extent that the game posed recurring safety risks in traffic, and even physiological damage can be caused to the user by “sensory overload, caused by flashing visuals, shrill audio, or intense haptic feedback signals.” De Guzman et al. (2019, p. 18) stated that user safety can be compromised by tampering or spoofing outputs because of the loose output access control of current ‘reality’ systems. Compromised reliability can result in threats, for example, denial of service, and policy and consent non-compliance. If an untrusted or malicious application obtains access to other outputs, it can potentially modify those outputs, making them unreliable, or overlay content (De Guzman et al., 2019, p. 7; McPherson et al., 2015, p. 750). A well-functioning presentation can make the instructions seem even more trustworthy while tampered output can reduce the probability of relying on the data.

There are several ways to protect the data in the data presentation phase, such as encrypting the content so that it can only be decrypted by the XR systems of the intended recipients. Extended reality also offers some improvements in privacy compared to other forms of instruction. For example, shoulder surfers and rubber-neckers are blocked from seeing the password, since, as Roesner et al. (2014, pp. 95–96) noted, applications are visible to the users’ eyes only.

Data presentation is the visible part of technical instructions to the user. Well-functioning instructions give the needed data at the right time but do not flood the user with unimportant data, blocking their view or demanding attention.

CONCLUSION

In this article, I have explained how extended reality handles instructional data and collected data to increase knowledge needed for designing XR-based technical instructions. I also identified the phases in which the data is handled. These phases are collection, processing, storage, transfer, combining, and presentation.

The purpose of identifying these phases of data handling in XR is to help technical communicators understand what happens to the data with the XR technology and how it is different from other media for delivering instructions. The issues of safety and privacy were discussed in detail because they are a new challenge in XR-based technical instructions, compared to other formats.

This study has some limitations. First, it offers only one way to look at XR as a medium to deliver technical instructions. There are other possible aspects as well, such as comparing technological benefits and requirements of different types of XR. Second, since the focus in this study is on general and common features of XR, it excludes focusing on possible differences in the phases of XR data handling of different types of XR.

Many privacy and security risks can be minimized by taking actions in the early stages. There are signs that privacy and security issues will increase in the future when the technology becomes more ubiquitous and widespread, and these issues may be exacerbated with artificial intelligence or data mining. Thus, more research is needed on the issues of privacy and security.

Further research is also needed to take a closer look at how these phases of XR data handling affect designing technical instructions. Furthermore, more research on comparing features of different types of XR is needed to help technical communicators choose the type or types of XR that would best support delivering their specific technical instructions.

Still, seeing data handling as a process enables a detailed review of XR as a medium to deliver technical instructions, which may help with organizing the design process of XR-based technical instructions.

REFERENCES

Acquisti, A., Gross, R., & Stutzman, F. D. (2014). Face recognition and privacy in the age of augmented reality. Journal of Privacy and Confidentiality, 6(2), 1–20.

doi: 10.29012/jpc.v6i2.638

Adams, D., Bah, A., & Barwulor, C. (2018). Ethics emerging: the story of privacy and security perceptions in virtual reality. Proceedings of the Fourteenth Symposium on Usable Privacy and Security. https://www.usenix.org/system/files/conference/soups2018/soups2018-adams.pdf

Ahn, D., Gorlatova, M., Naghizadeh, P., Chiang, M., & Mittal, P. (2018). Adaptive fog-based output security for augmented reality. VR/AR Network 2018–Proceedings of the 2018 Morning Workshop on Virtual Reality and Augmented Reality Network, Part of SIGCOMM 2018, Association for Computing Machinery, Inc., 1–6.

doi: 10.1145/3229625.3229626

Albert, A., Hallowell, M. R., Kleiner, B., Chen, A., & Golparvar-Fard, M. (2014). Enhancing construction hazard recognition with high-fidelity augmented virtuality. Journal of Construction Engineering and Management, 140(7).

doi: 10.1061/(ASCE)CO.1943-7862.0000860

Arbeláez-Estrada, J. C., & Osorio-Gómez, G. (2013). Augmented reality application for product concepts evaluation. Procedia Computer Science, 25, 389–398.

doi: 10.1016/j.procs.2013.11.048

Aukstakalnis, S. (2017). Practical augmented reality: A guide to the technologies, applications, and human factors for AR and VR. Boston: Addison-Wesley.

Azuma, R. (1997). A survey of augmented reality. Presence: Teleoperators and Virtual Environments, 6(4), 335–385. https://www.cs.unc.edu/~azuma/ARpresence.pdf

Benbelkacem, S., Belhocine, M., Bellarbi, A., Zenati-Henda, N., & Tadjine, M. (2013). Augmented reality for photovoltaic pumping systems maintenance tasks. Renewable Energy, 55, 428–437. doi: 10.1016/j.renene.2012.12.043

Bruno F., & Muzzupappa, M. (2010). Product interface design: A participatory approach based on virtual reality. International Journal of Human-Computer Studies, 68(5), 254–269. doi: 10.1016/j.ijhcs.2009.12.004

Burova, A., Mäkelä, J., Hakulinen, J., Keskinen, T., Heinonen, H., Siltanen, S., & Turunen, M. (2020). Utilizing VR and gaze tracking to develop AR solutions for industrial maintenance. CHI ‘20: Proceedings of the 2020 CHI Conference on Human Factors in Computing System, pp. 1–13. doi: 10.1145/3313831.3376405

Chu, C-H., Liao, C-J., & Lin, S-C. (2020). Comparing augmented reality-assisted assembly functions – A case study on Dougong Structure. Applied Sciences, 10(10), 3383. doi: 10.3390/app10103383

De Amicis, R., Ceruti, A., Francia, D., Frizziero, L., & Simões, B. (2017). Augmented reality for virtual user manual. International Journal on Interactive Design and Manufacturing (IJIDeM), 12(4), 689–697. doi: 10.1007/s12008-017-0451-7

De Guzman, J. A., Thilakarathna, K., & Seneviratne, A. (2019). Security and privacy approaches in mixed reality: A literature survey. ACM Computing Surveys, 52(6), 1–37. doi: 10.1145/3359626

Denning, T., Dehlawi, Z., & Kohno, T. (2014). In situ with bystanders of augmented reality glasses: Perspectives on recording and privacy-mediating technologies. CHI’14: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, 2377–2386. doi: 10.1145/2556288.2557352

Dey, A., Billinghurst, M., Lindeman, R. W., & Swan, J. E. (2018). A systematic review of 10 years of augmented reality usability studies: 2005 to 2014. Frontiers in Robotics and AI, 5(37). doi: 10.3389/frobt.2018.00037

Doshi, A., Smith, R. T., Thomas, B. H., & Bouras, C. (2017). Use of projector based augmented reality to improve manual spot-welding precision and accuracy for automotive manufacturing. The International Journal of Advanced Manufacturing Technology, 89(5–8), 1279–1293. doi: 10.1007/s00170-016-9164-5

Elia, V., Gnoni, M. G., & Lanzilotto, A. (2016). Evaluating the application of augmented reality devices in manufacturing from a process point of view: An AHP based model. Expert Systems with Applications, 63(C), 187–197. doi: 10.1016/j.eswa.2016.07.006

Fast-Berglund, Å., Gong, L., & Li, D. (2018). Testing and validating extended reality (XR) technologies in manufacturing. Procedia Manufacturing, 25, 31–38. doi.org:10.1016/j.promfg.2018.06.054

Ferreira, P., Orvalho, J., & Boavida, F. (2007). Security and privacy in a middleware for large scale mobile and pervasive augmented reality. 2007 15th International Conference on Software, Telecommunications and Computer Networks, 1–5.

doi: 10.1109/SOFTCOM.2007.4446125

Glacer, B. G., & Strauss, A. L. (1968). The discovery of grounded theory: Strategies for qualitative research. Nursing Research, 17(4), 364. doi:10.1097/00006199-196807000-00014

Graig, A. B. (2013). Understanding augmented reality: Concepts and applications. Morgan Kaufmann.

Gralak, R. (2020). A method of navigational information display using augmented virtuality. Journal of Marine Science and Engineering. 8(4), 237. doi:10.3390/jmse8040237

Happa, J., Glencross, M., & Steed, A. (2019). Cyber security threats and challenges in collaborative mixed-reality. Frontiers in ICT, 6(5). doi: 10.3389/fict.2019.00005

Hoedt, S., Clayes, A., Van Landeghem, H., & Cottyn, J. (2017). The evaluation of an elementary virtual training system for manual assembly. International Journal of Production Research, 55(24), 7496–7508. doi: 10.1080/00207543.2017.1374572

Lebeck, K., Ruth, K., Kohno, T., & Roesner, F. (2017). Securing augmented reality output. 2017 IEEE Symposium on Security and Privacy (SP), 320–337.

doi: 10.1109/SP.2017.13

Lukosch, S., Lukosch, H., Datcu, D., & Cidota, M. (2015). Providing information on the spot: Using augmented reality for situational awareness in the security domain. Computer Supported Cooperative Work, 24(6), 613–664. doi: 10.1007/s10606-015-9235-4

McPherson, R., Jana, S., & Shmatikov, V. (2015). No escape from reality: Security and privacy of augmented reality browsers. WWW ‘15 Proceedings of the 24th International Conference on World Wide Web, 743–753.

doi: 10.1145/2736277.2741657

Milgram, P., & Kishino, F. (1994). A taxonomy of mixed reality visual displays. IEICE Transactions on Information and Systems, E77-D(12), 1321–1329. https://search.ieice.org/bin/summary.php?id=e77-d_12_1321

Miller, M. R., Jun, H., Herrera, F., Villa, J. Y., Welch, G., & Bailenson, J. N. (2019). Social interaction in augmented reality. PLoS ONE, 14(5), 1–26.

doi: 10.1371/journal.pone.0216290

Nebeling, M., Nebeling, J., Yu, A., & Ruble, R. (2018). ProtoAR: Rapid physical-digital prototyping of mobile augmented reality applications. CHI ’18 Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, 353, 1–12. doi: 10.1145/3173574.3173927

Nowell, L. S., Norris, J. M., White, D. E., & Moules, N. J. (2017). Thematic analysis: Striving to meet the trustworthiness criteria. International Journal of Qualitative Methods, 16(1), pp. 1–13. doi: 10.1177/1609406917733847

Olsson, T. (2012). User expectations and experiences of mobile augmented reality services. [D.Sc. (Tech.) dissertation]. Tampere University of Technology. Retrieved from trepo.tuni.fi/bitstream/handle/10024/114370/olsson.pdf?sequence=1&isAllowed=y

Papagiannis, H. (2017). Augmented human: How technology is shaping the new reality. O-Reilly Media.

Piumsomboon, T., Lee, G., Lindeman, R. W., & Billinghurst, M. (2017). Exploring natural eye-gaze-based interaction for immersive virtual reality. IEEE Symposium on 3D User Interfaces, 36–39. doi: 10.1109/3DUI.2017.7893315

Porter, C. D., Smith, J. R. H., Stagar, E. M., Simmons, A., Nieberding, M., Orban, C. M., Brown, J., & Ayers, A. (2020). Using virtual reality in electrostatics instruction: The impact of training. Physical Review Physics Education Research, 16(2).

doi.org: 10.1103/PhysRevPhysEducRes.16.020119

Rauschnabel, P. A., He, J., & Ro, Y. K. (2018). Antecedents to the adoption of augmented reality smart glasses: A closer look at privacy risks. Journal of Business Research, 92, 374–384. doi: 10.1016/j.jbusres.2018.08.008

Reilly, D., Salimian, M., MacKay, B., Mathiasen, N., Edwards, W. K., & Franz, J. (2014). SecSpace: prototyping usable privacy and security for mixed reality collaborative environments. EICS ‘14: Proceedings of the 2014 ACM SIGCHI symposium on Engineering interactive computing systems, 273–282. doi: 10.1145/2607023.2607039

Roesner, F., Kohno, T., & Molnar, D. (2014). Security and privacy for augmented reality systems. Communications of the ACM, 57(4), 88–96. doi: 10.1145/2580723.2580730

Schoeb, D. S., Schwartz, J., Hein, S., Schlager, D., Pohlmann, P. F., Frandenschmidt, A., Gratzke, C., & Miernik, A. (2020). Mixed reality for teaching catheter placement to medical students: A randomized single-blinded, prospective trial. BMC Medical Education, 20(510). https://bmcmededuc.biomedcentral.com/articles/10.1186/s12909-020-02450-5

Scurati, G. W., Gattullo, M., Fiorentino, M., Ferise, F., Bordegoni, M., & Uva, A. E. (2018). Converting maintenance actions into standard symbols for augmented reality applications in industry 4.0. Computers in Industry, 98, 68–79. doi: 10.1016/j.compind.2018.02.001

Tang, A., Owen, C., Biocca, F., & Mou, W. (2003). Comparative effectiveness of augmented reality in object assembly. Chi ‘03 Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, 73–80. doi: 10.1145/642611.642626

Tham, J., Duin, A. H., Gee, L., Ernst, N., Abdelqader, B., & McGrath, M. (2018). Understanding virtual reality: Presence, embodiment, and professional practice. IEEE Transactions on Professional Communication, 61(2), 178–195.

doi:10.1109/TPC.2018.2804238

Tzimas, E., Vosniakos, G-C., & Matsas, E. (2019). Machine tool setup instructions in the smart factory using augmented reality: A system construction perspective. International Journal on Interactive Design and Manufacturing, 13(1), 121–136. doi: 10.1007/s12008-018-0470-z

Webel, S. Bockholt, U., Engelke, T., Gavish, N., Olbrich, M., & Preusche, C. (2013). An augmented reality training platform for assembly and maintenance skills. Robotics and Autonomous Systems, 61(4), 398–403. doi: 10.1016/j.robot.2012.09.013

Westerfield, G., Mitrovic, A., & Billinghurst, M. (2015). Intelligent augmented reality training for motherboard assembly. International Journal of Artificial Intelligence in Education, 25(1), 157–172. doi: 10.1007/s40593-014-0032-x

Yew, A. W. W., Ong, S. K., & Nee, A. Y. C. (2016). Towards a griddable distributed manufacturing system with augmented reality interfaces. Robotics and Computer-Integrated Manufacturing, 39, 43–55. doi: 10.1016/j.rcim.2015.12.002

Zauner, J., Haller, M., Brandl, A., & Hartman, W. (2003). Authoring of a mixed reality assembly instructor for hierarchical structures. The Second IEEE and ACM International Symposium on Mixed and Augmented Reality, 2003. Proceedings, 237–246. doi: 10.1109/ISMAR.2003.1240707

ABOUT THE AUTHOR

Satu Rantakokko, MA, is a doctoral student in Communication Studies at the University of Vaasa. Her research interests include technical instructions, extended reality, privacy and security issues, and affordances. Her dissertation focuses on the benefits and deficits of extended reality as a medium to deliver technical instructions. She currently continues her research with a grant by South Ostrobothnia Regional Fund. She is available at satu.rantakokko@student.uwasa.fi.