doi: https://doi.org/10.55177/tc148396

By Daniel Hocutt, Nupoor Ranade, and Gustav Verhulsdonck

ABSTRACT

Purpose: This study demonstrates that microcontent, a snippet of personalized content that responds to users’ needs, is a form of localization reliant on a content ecology. In contributing to users’ localized experiences, technical communicators should recognize their work as part of an assemblage in which users, content, and metrics augment each other to produce personalized content that can be consumed by and delivered through artificial intelligence (AI)-assisted technology.

Method: We use an exploratory case study on an AI-driven chatbot to demonstrate the assemblage of user, content, metrics, and AI. By understanding assemblage roles and function of different units used to build AI systems, technical and professional communicators can contribute to microcontent development. We define microcontent as a localized form of content deployed by AI and quickly consumed by a human user through online interfaces.

Results: We identify five insertion points where technical communicators can participate in localizing content:

- Creating structured content for bots to better meet user needs

- Training corpora for bots with data-informed user personas that can better address specific needs of user groups

- Developing chatbot user interfaces that are more responsive to user needs

- Developing effective human-in-the-loop approaches by moderating content for refining future human-chatbot interactions

- Creating more ethically and user-centered data practices with different stakeholders.

Conclusion: Technical communicators should teach, research, and practice competencies and skills to advocate for localized users in assemblages of user, content, metrics, and AI.

KEYWORDS: Localization, Chatbot, Assemblage, Case study, Content ecology

Practitioner’s Takeaway

- Technical communicators can create structured content and information architecture that are easily accessible to and understood by chatbots.

- Technical communicators can develop stronger, data-informed user personas to create more effectively localized chatbots.

- Technical communicators can contribute to the user-focused interface design of chatbots and can moderate chatbot transcripts in localized context and recommend machine-learning program edits in response to poor user experience.

- Technical communicators can help to gather and deploy data ethically from technologies and stakeholders to develop more intelligent content for chatbots.

INTRODUCTION

Current content practices in technical and professional communication (TPC) are impacted by shifting user preferences for personalization and contexts of use when interacting with content. Users want content localized to them in the right time, place, and preferred device and personalized to them (Verhulsdonck et al., 2021). At the same time, technologies such as data analytics and artificial intelligence (AI) make it possible to understand and respond to users’ needs by providing them with personalized content (Hocutt & Ranade, 2019; Ranade, 2019; Verhulsdonck et al., 2021). Such personalized content, sensitive to the user’s location and preferences, is an important factor in localization. For this reason, TPC and user experience (UX) researchers are starting to look at how microcontent—a form of personalized content that pushes out snippets of information tailored to user queries (Loranger & Nielsen, 2017; McConnell, 2019)—helps in localization and in what TPC researchers can contribute to the process of creating and disseminating microcontent. To extend their work and to describe localized content development practices (especially for AI applications), this article presents an exploratory case study of a context-aware chatbot, Meena, using the critical lens of assemblage theory.

Localized content draws not only from users’ needs but also from other characteristics such as location, demographics, and prior information-seeking behavior. For example, people can locate a restaurant that is near their current location; microcontent (information about that restaurant) is identified based on that user’s location and preferences using data analytics and machine learning (ML: to develop a semantic understanding of user needs at that moment). Likewise, chatbots use Natural Language Processing (NLP) combined with ML to gauge the intent of what a user needs, formulate a response, and deliver microcontent tailored to the user. Localization is guided by the exchange between varying processes like data analysis, geolocation, ML, and NLP.

Such microcontent is guided by a solid understanding of the user and by pairing that understanding with content that performs well (based on its metrics) and is machine readable so the content can be deployed by AI in various contexts. Hence, rather than see content as written solely by technical communicators, work in TPC is part of a content ecology and, for localization purposes, requires our field’s attention.

With that goal in mind, this article is organized in four sections. The first section explores the understanding of localization and argues that it is influenced by factors such as user, content, metrics, and AI. The second section uses assemblage theory to argue that user, content, metrics, and AI combine to form a dynamic, emergent agency to which technical communicators contribute, especially in AI applications. The third section explores an AI-driven chatbot, Meena, to demonstrate the activity of an assemblage. The fourth section draws specific takeaways for TPC practitioners and researchers on ways to participate in developing AI applications for localization.

LITERATURE REVIEW

Recent work has sought to localize usability through methods that engage users directly in iterative processes of research, design, testing, and deployment. Ethical participatory strategies engage users directly in research design (Rose & Cardinal, 2018; Zachry & Spyridakis, 2016). Researchers (Rose & Walton, 2018; Walton et al., 2019) noted our field’s need to address social justice through critical technical communication (TC) practices that localize and foreground user issues. Our field has published limited research to address how technologies impact localization of users (Hocutt, 2018; Ranade, 2020; Verhulsdonck, 2018). Such localization relies on metrics, cookies, tracking, geographic position system (GPS) signals, etc. that help create semantic understanding for AI-driven interaction through, for instance, digital voice assistants and chatbots that react in a sensitive manner to immediate localized user needs (e.g., Beck, 2015; Ranade & Catà, 2021). The development of user-sensitive localization has led to “locally sensitive searches with other intent signals” (Hartman, 2020, p. 53) where users have come to expect personalization. We argue such localized agency is part of a content ecology where user, content, metrics (among which are tracking and GPS), and AI work together to create new forms of localization that our field should be studying.

User Focus in Localization

In 2013, Agboka redefined localization as a “user-driven approach, in which a user (an individual or the local community) identifies a need and works with the designer or developer to develop a mutually beneficial product that mirrors the sociocultural, economic, linguistic, and legal needs of the user” (p. 44). To understand users’ perspectives, TC research, including social-justice work in TPC (Jones, 2016), proposes several methods, many that focus on collaborative community activities. Examples include the 3Ps framework of positionality, privilege, and power that pushes researchers to examine their own positionality and enactment of power and agency in a reflexive manner (Walton et al., 2019); participatory-action research that builds alliances between researchers and participants in planning, implementation, and dissemination of the research process (Agboka, 2013; Zachry & Spyridakis, 2016); decolonial approaches that offer a humanistic heuristic for researchers and practitioners to insert themselves into research (Haas, 2012); and research projects that benefit them (Rose & Cardinal, 2018). Such collaborative approaches provide important user data to move knowledge development forward.

Technological infrastructure used for collaboration activities is a crucial, yet under-studied, aspect of the data creation process. We believe that analyzing such infrastructural systems that drive localization can provide a new approach to examine AI-augmented microcontent through a social-justice lens. We explore chatbots as an important example of AI infrastructure to demonstrate users’ participation as well as the resulting localization.

THEORETICAL FRAMEWORK

The lack of research about microcontent development in the field of writing and TC has led to several challenges. First, the information design of customizable content delivery tools that use data remains absent from TC work. Second, user voice and user agency are not adequately considered in microcontent, especially for underrepresented groups. Third, the effects of the process of developing such tools on organizational structures are ignored. In fact, the last challenge can be a cause for the first two. Data-based microcontent delivery platforms can be seen as a product of an assemblage, which conducts user analysis but creates a different type of localization driven by users, AI, content, and tracking and GPS metrics.

Our approach to localization is informed by distinguishing how users, content, metrics, and AI form an assemblage (Deleuze & Guattari, 1980/1987; see also Bennett, 2010; Bryant, 2014; Latour, 2005). Because these interrelationships can be traced but produce different forms of agency, our approach is to see these interactions from a post-digital perspective that blurs the line between human and non-human actors and situates them in post-human rhetorical contexts (e.g., Johnson-Eilola & Selber, 2022; Moore & Richards, 2018). We see agency as created by technological and non-technological exchanges (addressed by Miller, 2007). Such an approach positions agency as a meaning-making activity, like a speech act (Bazerman, 2004) that is exhibited in the intermixing of user actions, content creation through AI, metrics, and engaging with an interface in a particular location. This approach to agency articulates localization for contexts where ML takes place and technical communicators must negotiate shifting technological contexts as part of a continuously emerging assemblage.

Assemblages

The concept of an assemblage represents emergent agency produced by disparate human, technical, and systemic actors. An assemblage is a self-organizing ecosystem of actors whose combined activity perform agentive acts. In other words, an assemblage is a coalition of actors assembled to accomplish an act of meaning making that dis-assembles on completion of that act. Such an assemblage also functions to create new forms of localization.

An example that illustrates assemblage agency: A person uses a smartphone’s search engine to ask for restaurant suggestions. In response, the smartphone displays a sorted list of nearby restaurants, ranked by rating and distance from the user’s location along with reviews, photos, and maps to aid decision-making. The sorted list emerges from assemblage activity across a network of human, technical, and systemic actors. Its emergent activity dissipates in the results that the search pulls, although the actors that contributed to the search engine results page (SERP) remain in kinetic stasis (see Miller, 2007), awaiting the next call-to-action by another user. Such an assemblage uses ML and helps to understand the users in relation to their activities and location and helps inform the next action. For example, the user might pick a restaurant that is less busy or one that has better reviews using location data parsed from user visits by time of day collated with restaurant reviews.

In this example, we can envision user, content, metrics, and AI as actors in assemblage agency (Hocutt, 2017, 2018) producing localization. Users are known through geolocation, browsing habits, search history, and smartphone-tracked habits as part of personal profiles or a digital twin (Fuller et al., 2020), which allows companies to make inferences and provide personalized results. Metrics about users—as timestamps, websites visited, and apps used—also make up the digital twin that is stored in the networked servers of corporate entities like Google, Facebook, Apple, Twitter, and Yelp. AI, in the form of ML algorithms with access to saved metrics from the user’s past and current activity, is deployed through search to the user’s current query and to indexed content. That content—which appears on the smartphone display in the form of restaurant listings, maps, photos, reviews, and ratings—is deployed in response to this query because AI-driven algorithmic processes match the user profile’s metrics with indexed microcontent and the query to make restaurant recommendations.

Users in the Content Ecology

Users are the first component of the assemblage. We are using the term “users” to represent “human users,” because in an assemblage, other non-human agents may be engaged in meaning-making activities. It is the human users for whom content is localized. Users engage in digital activities that can be monitored and recorded in the form of metrics. Because users are dynamic, their activities are in a continual state of flux to which the other elements of the assemblage must respond. Users generate content via digital tools as “produsers” (Toffler, 1971), as producers and consumers who co-create content and generate interaction data (Bruns, 2006). Users also generate metrics as they consume content. As users engage in daily activities supported by digital tools, their incoming and outgoing (produsing) data streams never end. In the context of assemblages, localization is a continually moving target to which AI is applied to help identify patterns and predict future actions that are then fed back to the user in a localized manner through personalized content.

We do not seek to obfuscate human agency with this portrayal of users or even to (necessarily) minimize user agency in assemblage relations. Users still decide if they will eat at the restaurant that a web search response recommended. But the moment they make that decision, content, metrics, and AI respond, collecting and providing localized information about the user’s current (and likely future) state. Thus, we understand the user as an agent in continual interaction with other performative agents, engaging collectively in meaning-making activities.

Content

TC has deployed three distinct models of content development. Earlier models saw technical communicators responsible for writing whole documents and the role of what Evia (2018) called the “craftsman model” of TPC. Based on this role, technical communicators are responsible for crafting whole documents, which often include page design. As content moved online, technical communicators moved to view content as smaller components in larger information management systems, such as DITA, for XML compliance. This compliance allows for creating different documents such as website instructions, PDFs, and print documents from the same single-sourced XML-coded content. Current approaches in TC have adopted the paradigm of topic-based authoring, where content is broken into smaller pieces (or microcontent) to be deployed directly across different media such as website windows and apps and as part of larger documents (Andersen & Batova, 2015; Batova & Andersen, 2017; Bridgeford, 2020; Evia, 2018). This component of the content-management paradigm helped with single sourcing, content reuse, and findability.

The microcontent paradigm extends component content management to contextualized and increasingly fragmented content delivery because of increased search-engine use across mobile and connected devices. Microcontent is “audio, video, or text that can be consumed in 10–30 seconds” by users (McConnell, 2019, para. 1). Microcontent is also “a type of UX copywriting in the form of short text fragments or phrases, often presented with no additional contextual support” that is increasingly used to help users interface with a wide variety of mobile and connected devices (Loranger & Nielsen, 2017, para. 1). Microcontent is a response to shifting demands of users who want to access relevant content with their mobile and connected devices without having to wade through a website. Microcontent is retrieved instantaneously, which means it must be machine readable and analyzed for effectiveness through metrics. That is, microcontent moves from a broadcast model to a localized model, where AI and metrics interact with that content and user queries to offer user’s personalized content.

Metrics

A common saying in many modern organizations is “you cannot manage what you do not measure.” Metrics form the basis of data collected to create a user’s “digital twin” (Fuller et al., 2020). Metrics are incorporated into an assemblage to create localized agency; content is deployed as a result of a semantic understanding of the users and their digital footprint (through cookies, tracking pixels, etc.). Metrics are quantitative representations of user activities and characteristics, and TC is starting to consider how intelligent delivery is facilitated by semantic understanding of the user’s history (the digital twin) and intent (what the user aims to do; Rockley & Cooper, 2012). As part of this work, we consider how metrics are now used to create personal profiles of users. For example, Google has developed the HEART framework to identify a user’s Happiness, Engagement, Adoption, Retention, and Task success through various UX metrics (Joyce, 2020).

Many websites now use a rating system to understand how happy the user is with a service and if the user eagerly promotes that site (e.g., the Net Promoter Score). Likewise, websites capture how long a person engaged with that website, how many people adopt the website by signing up, and how many active users are retained over the years, while also capturing how many users were successful in accomplishing particular tasks. As such, metrics indicate not only a user’s behavior but also their attitudes, and technical communicators need to consider how tracking such metrics can be used to develop better content and more personalized approaches.

Artificial Intelligence

Although ML is not part of the assemblage we have described, ML activity capitalizes on the relationships between other agents. Whereas “AI is the broad science of mimicking cognitive human abilities, ML is a specific subset of AI that trains a machine to learn” (Thompson et al., n.d., Artificial Intelligence and Machine Learning). ML algorithms are a branch of AI based on the idea that systems can learn from data, identify patterns, and make decisions with minimal human intervention.

At their core, ML algorithms predict patterns based on available data sets. Thus, they determine input factors that can influence a target outcome. For example, Amazon’s recommendation function is a result of ML that continuously analyzes the association between the likelihood a customer who buys one product might also like a related product. ML is used to continuously refine such association models. Together with metrics (which tabulate customer preferences), ML can help create AI-driven solutions that address user needs. As such, ML is important to help understand the user and pair information from the user’s digital twin with overall web patterns.

So far, our goal has been to understand the workings of the assemblage of user, metrics, content, and AI to determine the role of technical communicators in constructing the assemblage. We investigate this by exploring Google’s chatbot, Meena. We review current TC research on chatbots before revealing new dynamics of the assemblage of user, content, metrics, and AI in the construction of AI based chatbots. In turn, the analysis that follows will help us uncover new roles for technical communicators in AI application development, especially in localization contexts.

METHOD

Although localization can be conceptualized as a product of an assemblage of content, users, metrics, and AI, it can be demonstrated by exploring an AI application. In studying localization, we chose to study Meena—a chatbot application developed by Google that is built on an AI framework—to demonstrate how content, users, and metrics are at play to generate a personalized conversation experience for users. Google has engaged in chatbot development for the past few years. Meena is a result of complex data storage, retrieval, and processing that gets done through NLP and ML algorithms, trained on 40 billion words mined and filtered from public domain social media conversations (Adiwardana et al., 2020). The architecture of such algorithms is complex but helpful when understood to improve the accuracy of chatbot responses, especially those that are used for information retrieval and to ensure they are just, not biased towards specific user groups.

Before selecting Meena, we studied several chatbots and referred to previous chatbot scholarship. The primary limitation with chatbots is that, in many cases, a researcher cannot know whether the chatbot is a human or AI. Meena is a purely AI chatbot, making it a top choice over other human-driven applications such as chatbots on telecommunication websites (Ranade & Catá, 2021). Human intervention will not appear in the middle of a conversation. A second limitation is that commercial chatbot architectures are black-boxed: that is, only developers know the inner workings, making that information inaccessible to researchers. However, research on Meena’s design has been published and open for public access. The open access helped us dive deeper into the architecture and into the input and output data that impact conversations with Meena. The final challenge is access to AI chatbots. Meena is not available anymore; however, a repository with Meena is publicly shared on GitHub. Other chatbots like Cleverbot (Kim et al., 2019) are free to use, but their design and inner workings are black-boxed, rendering the output insufficient for our exploration.

Chatbots and Microcontent Responses to Human Queries

Chatbots are software programs used to interact and simulate conversations with users. They act like automated answering systems to respond to users’ questions, thereby solving users’ problems, either by giving or pointing users to where they can find more information. Conversational tools like chatbots are a common example of microcontent delivery platforms. The popularity of chatbots, especially in the customer-service industry, has encouraged technology companies to invest in chatbot development. Therefore, recent chatbots are being developed, using AI to make their interactions more human-like with higher content accuracy that adapts to users’ needs. An example of a user-centered chatbot is a flight recommender chat tool deployed on Expedia’s website: like a SERP, the chatbot uses user data and metrics to predict users’ needs and provide relevant responses. For example, if a customer (user) asks for flight recommendations, the chatbot can use the customer’s previous search history, travel itineraries, and other travel preferences (metrics) to provide recommendations that match that user’s previous searches. Such a localized response (content) has a higher chance of positive reception by the customer. The response is generally precise and short—microcontent—and thus can be quickly consumed by the customer.

To deliver such a human-like conversational experience, chatbots are using AI and ML algorithms. These algorithms are complex but are capable of learning from their interactions with users and constantly are improving their performance of comprehension and information delivery. Singh and Beniwal’s (2021) work demonstrated that data are a crucial component in the making of chatbots. Similarly, Ranade and Catà (2021) argued that, because technical communicators can work with data for information delivery purposes, they can contribute to chatbot development.

Evolution of Chatbots

Chatbot development has undergone a shift with big data storage, allowing for better memory and learning by chatbots and creating more advanced chatbots that can interact with users to various degrees of complexity. Different types of chatbots are distinguished from basic to advanced in their architecture to help the user; the more advanced, the better the user experience with chatbots.

- Decision-tree based chatbots: Similar to a guided menu, these types of chatbots are most basic and use buttons to communicate with the user. For instance, Facebook and LinkedIn use these types of chatbots to provide FAQ sections for a business; by clicking a button, the user gets pre-set conversation answers to common questions in a text chat.

- Keyword recognition-based chatbots: Similar to decision-tree chatbots, but intermediate in that they can recognize keywords, these chatbots can make decisions based on the keyword and can provide answers. For example, when refilling a prescription through a pharmacy phone system, these chatbots recognize keywords such as “speak to a person” and then connect the user with a human agent.

- Contextual chatbots: These advanced chatbots can have conversations and learn from the user. They can have mapped out conversational flow but also generate understanding of the user’s language and intent. A chatbot such as Siri has contextual capabilities (Leah, 2022).

Various distinctions can be made based on how the chatbots are classified per the degree to which they are task-oriented and their abilities to take in text or voice, their knowledge gathering is open or closed, or their design—if they are rule-based (decision-tree), retrieval based (keyword), or are more generative (contextual) (Hussain et al., 2019). Meena is a generative chatbot.

EXPLORATORY CASE STUDY

Because of the wide scope of network relations involved in an online chatbot, boundary conditions are needed to trace such assemblages (see Latour, 2005). As a result, we limit our scope to examining chatbots as a function of an assemblage of users, content, metrics, and AI. We offer an exploratory case study of Meena because the only available means of analysis is through Adiwardana et al.’s (2020) research report and transcribed sample conversations between Meena and humans. The information to which we have access provides adequate detail to explore the chatbot’s function, tracing its ability to respond to user queries via localization through the lens of assemblage theory.

Technical communicators are important for AI development because AI requires good information architecture and structured data for learning purposes (Earley, 2018). However, a gap exists in studying AI applications (i.e., chatbots) and how they enact new ways of localization. This gap is slowly being addressed in TPC scholarship (e.g., Ding et al., 2019; Ranade & Catà, 2021). It is important that technical communicators integrate chatbots with existing content management systems to allow content reuse and create metadata for a “controlled taxonomy” to recognize users’ speech acts (Ranade & Catà, 2021, p. 36). We extend that research to examine a new dynamic in TPC in which assemblages are responsible for creating new opportunities for collaboration and user involvement, contributing to a renewed method of studying localization.

Technical communicators can play an important role in the development of chatbots, like Meena, by moderating the content that is used to train them. Meena was trained using filtered conversations on social media (Adiwardana et al., 2020). This training ensures that the chatbot gives sensible responses. However, for information retrieval, content response must be accurate to meet users’ needs. Content moderation is a crucial part of technical communicators’ role and by moderating content, they can contribute to increasing chatbots’ responses accuracy.

Conversational AI Architecture

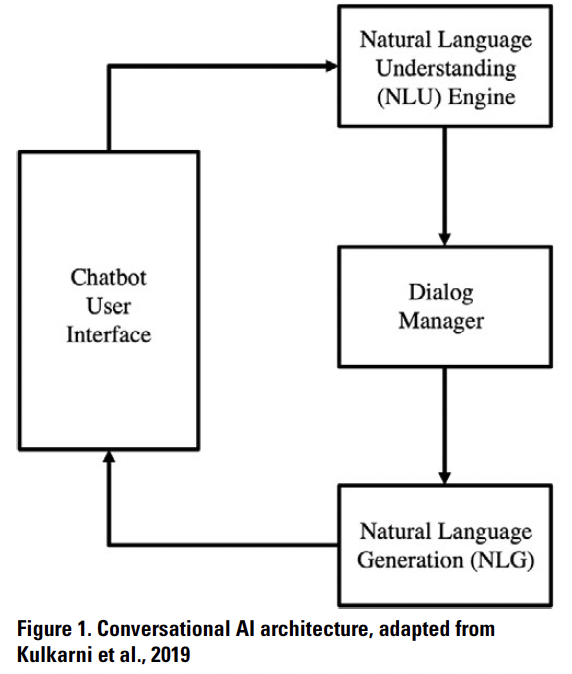

To examine other contribution aspects, we need to understand the architecture of chatbots and analyze gaps in chatbot development that can be filled by TPC contributors. Three main components make up conversational AI architecture; each component is divided into basic sections that handle preliminary tasks (see Figure 1):

- natural language understanding (NLU) unit

- dialog management (DM) system

- natural language generation (NLG) unit

NLU deals with the understanding of natural language inputs from users—a combination of two natural language understanding tasks, intent classification and entity extraction. Intent classification helps the agent understand “why users are asking those queries,” while entity extraction deals with breaking down the input to make sense of “what the user is asking.” Intent classification also helps the chatbot identify pieces of information discretely received from the user; the information and the intent, when combined, allow the agent to understand the user’s input.

Natural language understanding unit

Before NLP algorithm development, chatbots were primarily rule-based. They consisted of a series of if-else statements that compared users’ requests to the if condition and, when a match was found, returned a response, else; then, the code moved on to the next if-condition comparison. If a match was never found, a null response was returned. If the question was formulated in a way not predicted by the developers and was not present in the code, the chatbot still returned a null response. NLP helps prevent the null response by understanding natural language, using keyword classification to understand questions regardless of the framing.

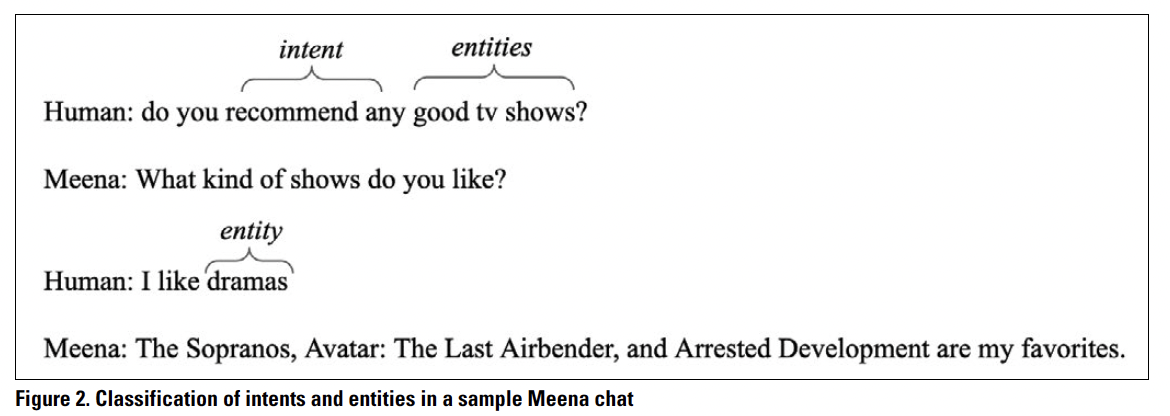

NLU is a branch of AI and a subset of NLP that uses computers to understand input made in the form of unstructured text or speech (Kulkarni et al., 2019). NLU is also programmed to understand meaning despite common human errors like transposed letters or words. The two most important functions of NLU are entity and intent recognition. Entities are particular terms that contain unique information that can help a conversational agent understand users’ requests. Intents are the phrases that the user uses to communicate their goal/need from the chatbot. For example, Meena’s responses for a question can be classified into intents and entities as shown in Figure 2. In the question, “do you recommend any good TV shows,” recommend is the users’ intent and good TV shows are entities that communicate “what” the user wants to be recommended. Neural networks (Kulkarni et al., 2019) are used to train NLU algorithms about the variety of intents and entities. Importantly, valid data that is used to train the algorithms can help to make sure that the NLU processing is error free.

For localized experiences, such data can be acquired from user comments, FAQs, forum discussions, and other spaces where user needs can be identified. Before being classified into intents and responses from a data set, users’ responses are preprocessed to eliminate unnecessary information.

For localized experiences, such data can be acquired from user comments, FAQs, forum discussions, and other spaces where user needs can be identified. Before being classified into intents and responses from a data set, users’ responses are preprocessed to eliminate unnecessary information.

Dialog management system

The DM system develops an interaction strategy that can direct the agent that is choosing its actions based on the inputs that the user has relayed. Task-oriented DM systems are responsible for guiding the user from one state of the conversation to another to successfully achieve a predefined or dynamically understood task (Kulkarni et al., 2019). Dialog delivery is as important as dialog construction; a desirable characteristic of chatbots over search algorithms is their information design (Ranade and Catà, 2021). Chatbots provide a list of options for the user to confirm an inquiry or choose a more specific one, while web searches do not provide that feature.

Such forms of user inputs are also helpful to find out whether the users’ problems are solved or if they need more help. Localization, or dialog personalization, is important to construct conversations that provide chatbots with more insights about the users, provide context-specific help, and ensure that the statements are unbiased. Rather than feeding the chatbot data from data collected through previous conversations, a more supervised dataset makes the chatbot more effective. In chatbot optimization—an assemblage of user responses, chatbot dialog content, content metrics, and AI—analyzing this new localization form is important.

Natural language generation

A subdomain of NLP, NLG focuses on the methods of how responses are generated in natural language. The NLG module receives input from the DM system in a structured format. That format is based on the dialog history and the current context; it processes the history and input and produces an output sentence that sounds natural to humans and is also specific to the context (Kulkarni et al., 2019). Researchers have developed several algorithms for NLG, such as template-based or rule-based approaches, which are more static; the N-Gram Generator, which relies on keywords only, thus struggling with contextual understanding; and the Neural Network approach and Seq2Seq, which are both ML algorithms that train using reward systems. Optimal responses get generated because the chatbot predicts a reward at the end.

The conceptual architecture of conversational AI helped us understand and explore Meena.

Exploring the Meena Chatbot

Meena is an end-to-end, neural conversational model that learns to respond sensibly to a given conversational context (Adiwardana et al., 2020). It answers open-ended questions showing that a large end-to-end model can generate almost-human-like chat responses in an open-domain setting. The training aim while developing Meena was to minimize perplexity, the uncertainty of predicting the next token (e.g., word in a conversation). Meena’s natural language quality is high, owing to the large number of datasets used to train it and the algorithms used for NLG. The Meena model has 2.6 billion parameters and is trained on 341 GB of text, filtered from public domain social media conversations (Adiwardana et al., 2020).

Deconstructing Meena’s conversations, we can identify the role of content, metrics, and user information in the responses. Because the algorithm is not publicly available, we must rely on this mapping approach to track DM by reverse social engineering. Another challenge is that this chatbot is not publicly available; therefore, we had to rely on data generated by conversations recorded by researchers while they developed and/or tested the chatbot. We used tree graphs to break down available conversations.

Meena’s Assemblage

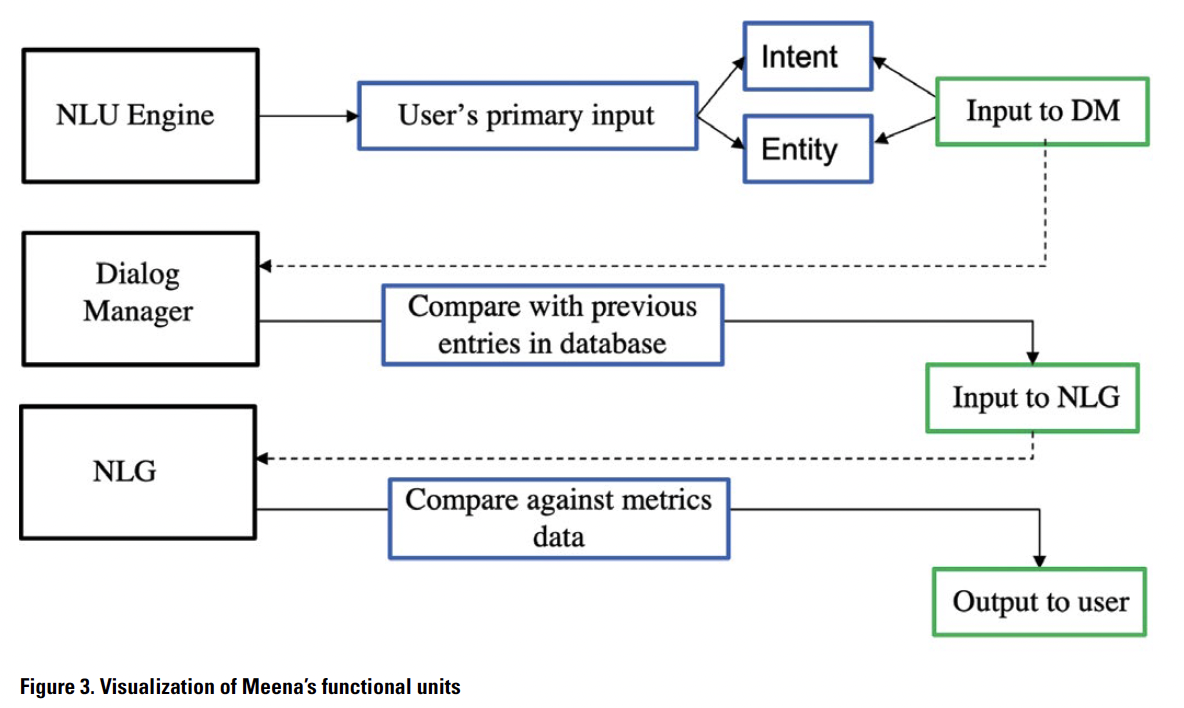

“Learning AI is often embedded, invisible, and reliant on data collected from user interactions on platforms” (Grandinetti, 2021, AI Definitions, Terminology, and History, para. 3). The various components involved in chatbot’s conversation design were necessary to separate the role of user data and algorithm design. Using the architectural components and components of the AI applications’ assemblage mentioned previously, we designed a visualization (see Figure 3) to analyze smaller functional units of Meena: chatbot conversations.

Example Conversations

Example Conversations

Conversations with Meena, and with various other chatbots, are available at https://github.com/google-research/google-research/tree/master/meena (GitHub, Inc., n.d.). These conversations were gathered during the chatbot testing process by test engineers and other stakeholders with access.

Following are three example chatbot conversations with Meena relevant to this research.

Sample conversation one

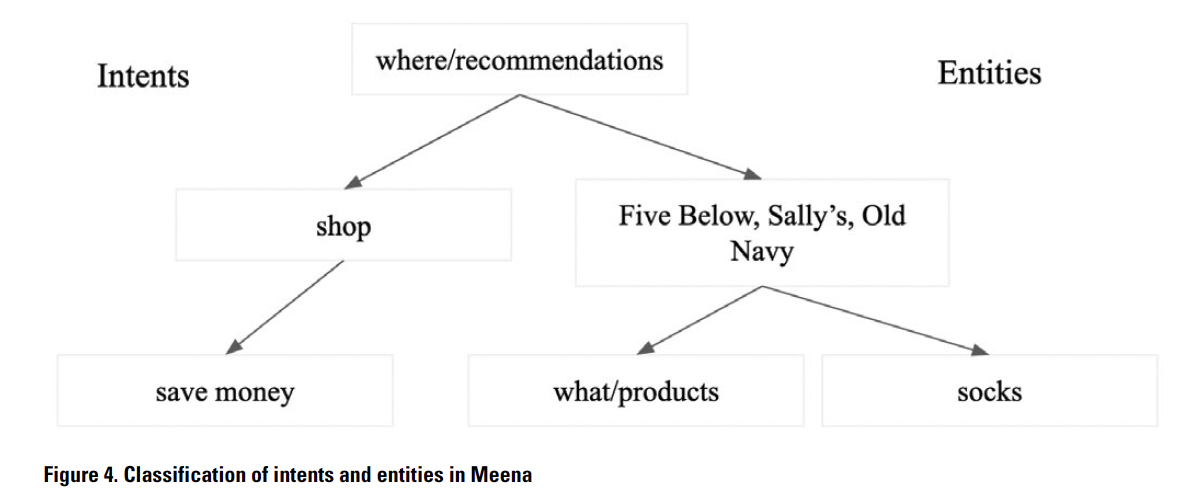

The first chatbot conversation (quoted directly from GitHub, Inc. [n.d.]) illustrates Meena’s user recommendations for budget-friendly shopping places. Meena’s suggestions (microcontent) are based on a list of shopping places (data) that are potentially worth visiting for the user based on their need (metrics). Because the user does not specifically provide what they want to shop for, Meena offers a variety of stores not selling the same commodities.

Human: Hi!

Meena: Hey! How’s it going?

Human: Good. My wallet is recovering from the spending of the holidays, haha. Hbu?

Meena: Nice! I’m doing well!

Human: I am looking to save money on any future shopping. Where do you like to shop?

Meena: I like to shop at Five Below, Sally’s Beauty Supply, and Old Navy. How about you?

Human: I love Sur La Table and Macy’s. They’re decent quality. What do you normally buy at Old Navy?

Meena: I’ll have to check out Macy’s! I like their socks, lol. What about you?

Sample conversation two

The second chatbot conversation (quoted directly from GitHub, Inc. [n.d.]) illustrates how Meena analyzes the definitions (data) of various disciplines provided by the user. Although at the beginning Meena points out the definition (microcontent), the chatbot also processes the similarities between the definitions of two different fields provided by the user and agrees to their argument.

Meena: Hello! How are you today?

Human: Which is a more useful subject, philosophy or psychology?

Meena: Psychology, definitely.

Human: Why?

Meena: Because it’s the study of human behavior and mind.

Human: I think philosophy is also a study of human behavior and mind

Meena: I’d say it’s more the study of ideas but I see what you’re saying.

Sample conversation three

The third chatbot conversation (quoted directly from GitHub, Inc. [n.d.]) illustrates Meena educating the user on the correct term (microcontent) to use for their travel. Using the right term will help the user find the correct visa for the travel destination (data) based on their current location (metrics) and determine whether a visa is required. (Note the human’s spelling error, which Meena still understands and, thus, concludes the conversation.)

Human: how about visa to dubai?

Meena: Do you live in Dubai?

Human: nope

Meena: Then you need a visa to get into Dubai, you won’t get one as an American.

Human: why so?

Meena: It’s just the way it is.

Human: ok

Meena: Glad we cleared that up 🙂

Human: how about a transit visa?

Meena: Transit visas are for tourists, not visas for travelers.

Human: am a tourist

Meena: Then you’re a tourist, not a traveler.

Human: ok.. good byte

Meena: Bye, tourist.

Parsing a Conversation

To trace the role of user, content, metrics, and AI as an assemblage, we deconstruct the conversation further to identify the NLU, DM, and NLG components, using the first conversation for analysis. (The other two conversations can also be parsed in a similar manner.) For the NLU component, we constructed a tree graph to classify conversations into intents and entities. The tree graph for the first conversation is provided in Figure 4.

This tree graph reveals that, although we can rely on NLU algorithms to process entities and intents, NLG needs to be improved for greater accuracy. More precise localization could help ensure that users’ needs are being met with their local experiences and contexts. Ultimately, the outcome does not measure whether user needs are fully met in these three conversations—a drawback of the exploratory case study. However, we can identify in the first conversation the disconnect between the user’s questions and Meena’s answers. We might consider this disconnect a problem of NLU, DM, or NLG, or we might recognize that ML remains unable to provide adequately localized responses. Examining Meena, we see such problems as an opportunity for technical communicators to understand localization as an assemblage issue requiring focus on content, users, and metrics to help create a more personalized AI-centered experience.

This tree graph reveals that, although we can rely on NLU algorithms to process entities and intents, NLG needs to be improved for greater accuracy. More precise localization could help ensure that users’ needs are being met with their local experiences and contexts. Ultimately, the outcome does not measure whether user needs are fully met in these three conversations—a drawback of the exploratory case study. However, we can identify in the first conversation the disconnect between the user’s questions and Meena’s answers. We might consider this disconnect a problem of NLU, DM, or NLG, or we might recognize that ML remains unable to provide adequately localized responses. Examining Meena, we see such problems as an opportunity for technical communicators to understand localization as an assemblage issue requiring focus on content, users, and metrics to help create a more personalized AI-centered experience.

Data analytics helps us draw from users’ information-seeking behavior to create user personas, helping us understanding their requests better and provide better solutions. Content development helps in using vocabulary that is unique, easier for chatbot algorithms to scrape, to land on the correct solution when an intent is analyzed from a problem statement. For example, in product documentation, if a user needs installation information, we can enable the chatbot’s algorithm to trace who the user is and, using geographic data, where that user is, and we can use data analytics to find out the user’s competence about the product, previous search history, and so on. An overarching view limits our ability to analyze these components. Therefore, we are using assemblage theory and characteristics of ecosystems to understand DM and NLG components of chatbots and the role of metrics, content, and users in it. From there, we can envision how technical communicators can contribute to increasing the effectiveness of chatbot responses to user needs.

Technical communicators pride themselves on being user-centered in their work and research. User experience, experience architecture, and usability studies all focus the attention of TPC on users. Chatbots and other smart products, powered by ML and AI, represent a new frontier for technical communicators’ focus. Because a human user exists on the other end of the chatbot conversation, the TPC field’s ability to represent the needs and interests of that user requires deep insight into the way chatbots receive input and generate hyper-localized responses. An AI-powered chatbot, like Meena, can localize the user using geographical metrics shared by a smartphone; connect the user to its hidden digital identity in the universe of data metrics; use algorithmic NLU to understand natural language meaning and likely intent (AI); and use its massive language training set and algorithmic NLG to provide a relevant, meaningful response to the user (microcontent). Representing the needs and interests of the human user in a Meena chatbot conversation requires developing a deep understanding of the chatbot conversation’s ecosystem and treating the chatbot’s activity as a unified, hyper-localized, contextualized assemblage. Understanding Meena as an assemblage agency whose combined actors include user, content, metrics, and AI, technical communicators can identify specific insertion points in the chatbot communication loop where they can effectively represent human user needs and interests.

Before discussing implications, we emphasize this point: human users are not independent actors in the AI-powered chatbot assemblage. Indeed, as Ranade (2020) has noted, this is the “real-time” audience of data analytics that offers our field different dynamics for localization. Though human users may initiate conversations, at the moment of their interaction with the chatbot, they are subsumed in the chatbot’s assemblage activity. Users’ locations are tracked in real time to enable meaningful, contextual, localized responses. Conversations in the chatbot include both human-generated and machine-learned algorithmic prompts and responses that are processed for intents and entities. Microcontent mined from the universe of metrics accessible to the chatbot is used to provide relevant responses in human user queries. Missing, however, is user-focused advocacy. As a result, we call on the field to identify and advocate insertion points in such ML assemblages.

IMPLICATIONS

Technical communicators need to address the new dynamics of assemblages where user, content, metrics, and AI work to address chatbot contexts. Johnson-Eilola and Selber (2022) have speculated that TC practice is itself an assemblage, and we find ourselves resonating with that speculation. As we have illustrated, chatbots provide localized microcontent that can help users in contextual ways. The intervention of technical communicators can advocate for users’ needs in algorithm-centered activities like chatbot conversations for a more natural human-machine balance.

We offer potential insertion points into assemblage agency that technical communicators might consider advocating for human users. We encourage the field to identify others so we can train technical communicators for these new workplace practices.

Insertion Point One: Structured Content for Bot Consumption

Technical communicators hold ideal positions to create structured content and information architecture that is easily accessible to bots. Technical communicators can build online content that is effectively structured for algorithmic actors like webbots to understand in context. They can use markup language and structured data to ensure that chatbots or other bots can recognize, access, and understand content. The better the training content used for ML, the more effective algorithm-generated responses will be in chat conversations.

NLP is the algorithmic building block of conversational agents like chatbots. Creating web-based information architectures for chatbots using structured data to appropriately tag online content, provides effective training corpora for conversational agent training and ML. For example, Googlebots read the Speakable beta schema markup (Google, n.d.) to identify information prepared for text-to-speech responses by conversational agents like Google Assistant. When developing content, or when designing online interfaces, including Speakable markup helps ensure that content is provided in a format easily identified and presented by conversational agents.

Insertion Point Two: Training Corpora for Data-Informed Personas

Another crucial skill that technical communicators bring to chatbots is their ability to develop stronger data-informed user personas to create more audience-aware chatbots. TC already uses a variety of tools to achieve these goals, including usability testing, user-centered design, and participatory research. Technical communicators, therefore, can be valuable in preparing an AI-powered agent using different corpora and personas so a chatbot can better address specific user interests and needs. For example, awareness of differently abled users seeking airline-travel information might ensure that text from social-media posts and online discussion boards authored by members of disability communities is used as a training corpus so the chatbot could more effectively respond to queries related to accessible resources for such users.

Technical communicators are ideally situated and trained to research and develop nuanced user profiles using existing audience analysis, data analytics from online behavior, and context-specific localized knowledge. Technical communicators could be involved in early stages of selecting appropriate text corpora for ML training for chatbots, helping identify effective training corpora for understanding and helping provide context- and user-appropriate NLG in responses. Hence, using both existing methods and novel approaches to data collection and analysis, technical communicators can develop detailed data-informed user personas that work in specific contexts. These personas can be used to identify the types of textual data that would be most useful to include more audience-aware content in chatbots.

Insertion Point Three: Chatbot Interface Design

Given that technical communicators also need to consider content in relation to the user experience, they are also ideally situated to consider how chatbot content functions regarding user-centered interface design. Although algorithms may require training to understand when a conversational agent shifts from human user to chatbot, users require ability to recognize when the chatbot is preparing a response, when the chatbot has finished speaking, and when a response is expected. Familiarity with new and emerging standards for conveying text conversation activities effectively can be employed, as can recommendations for effective prompts for calls to action on the part of the human user. For example, in a text conversation using a smartphone, using alternating left and right justification of messages, differently colored message “bubbles,” and the speaker’s identity to each message can help differentiate human and chatbot responses. Using the familiar “three dots in motion” to represent when a chatbot is preparing a response can help human users, who are accustomed to such responses in human-to-human text conversations. Subtle design tweaks identifying when messages are both read and received by each participant in the conversation can help human users recognize that the chatbot has received their message. Technical communicators can apply audience research to localize interface designs for platforms (e.g., desktop, tablet, smartphone); accessibility (e.g., text readers for blind users, audio readers for deaf users); and contexts (e.g., low-light environments, silent reading rooms, airplanes).

Insertion Point Four: Response Moderation in Conversations

As user-focused practitioners and researchers, technical communicators have unique skills to moderate chatbot transcripts and to recommend programming edits based on poor user experience, whether reported by the human user or identified by the technical communicator. Human-in-the-loop oversight of algorithm-generated content is an important aspect of ensuring human user experiences are successful and satisfactory, without unintentional or programmed bias and discrimination. Noble (2018) is among a growing chorus of scholars (for others, see Akter et al., 2021; Turner Lee et al., 2019) concerned about algorithmic bias, and while addressing that bias by encouraging more inclusive programming is important, so too is moderating machine-learned outputs in AI-powered chatbots. Engaging technical communicators in reviewing chatbot conversations with human users, especially when contextual data available to the chatbot algorithm is made available in human-readable form, can confirm the accuracy, reliability, and appropriateness of chatbot responses to human queries. Combining audience analysis and human user feedback with user data points available to the chatbot (e.g., geographical location, conversation prompt, user online behavior, perhaps prior purchasing decisions), technical communicators can review a representative sample of chatbot conversations to determine the extent to which they appropriately meet the human users’ needs or when a handoff to a human is crucially needed in the future for recurring issues. Most importantly, technical communicators should have opportunities to make recommendations to programmers and engineers to tweak responses based on their analysis, perhaps by recommending additional machine-learning training corpora or review of natural language understanding and NLG.

Insertion Point Five: Engage Stakeholders to Deploy Data in More Ethical and User-Centered Ways

Crucially, technical communicators are also well-situated to leverage more data-informed and ethical deploying of data with various stakeholders to develop intelligent content for chatbots. For example, Saunders (2018) has pointed to the convergence of digital marketing techniques in the TC field where interactive technologies are “charged with the task of creating intelligent content that is ready to serve the demands of new and yet unimagined interactive channels” (p. 10).

One of the authors works as a web manager on a school’s marketing and engagement team, bridging the gap between TC researcher and marketing professional. Indeed, Verhulsdonck et al. (2021), Verhulsdonck & Tham (2022), and Tham et al. (2022), among others, have identified the importance of developing intelligent content in TC.

Although marketing is often focused on “conversion” (getting people to make a purchase or perform a particular action), the influence of data-driven techniques employed by digital marketing analytics on TC must be recognized. Indeed, both focus on how (intelligent) content, user, metrics, and AI can be leveraged to better address various audience objectives.

Earley (2018) recognized this convergence by noting how technical communicators can leverage user-centered techniques to create a user journey by identifying 1) important touchpoints for the user; 2) the user’s wants, needs, thoughts and emotional states during an interaction; 3) sources and best channels to deploy content to help the user; 4) areas to evaluate and improve content; and 5) areas where further refining is needed or a handoff to a human operator is needed by a chatbot. As such, technical communicators are crucial to this cycle of “measure, manage, govern, and improve” to help develop better on-going processes (p. 14). Such an iterative approach is crucial to create responsible AI chatbots that continuously employ contextual and situational understandings of the user. Given that many companies now employ customer-relationship management systems that store customer data, technical communicators must be involved to guide how data can be used in ethical and responsible ways. A fruitful relationship with marcomm can help techcomm design human user experiences that are localized and contextualized appropriately while also addressing ethical issues.

CONCLUSION

The concept of an assemblage is important for technical communicators to better understand the way content is localized and enacted in AI-powered online technologies like chatbots. User, content, metrics, and AI engage in complex relations to produce meaningful conversations that respond immediately to contextual changes of the user like their location, preference, and behavior. We recommend that technical communicators play a role in advocating for human users in such content ecologies to better localize microcontent for users. To do so, we explored the Meena chatbot to reveal its architecture and reliance on ML to provide microcontent, based on training datasets selected by engineers. From this exploration, we have identified five specific insertion points in the design, development, deployment, use, and measurement of conversational agents where technical communicators better localize and meet the needs and interests of human users.

Our use of the assemblage reveals a novel conception of TC as engaging in a content ecology of user, content, metrics, and AI to localize information more meaningfully. The skills needed to navigate this interactive, dynamic ecosystem differ from those traditionally taught in technical communication courses. Technical communicators should have a general understanding of the way AI and ML are used to generate content; the way digital identities are collected, available, and used in such assemblages to localize content and responses; and the way metrics are collected, curated, and implemented in localizing responses to users. We do not call for technical communicators to become data scientists or computer programmers, but we believe that the explosion of AI and ML in communication practices, along with the availability of enormous datasets containing user behaviors in the form of metrics, requires additional skills of technical communicators. Specifically, we advocate that TC curricula consider adding foundational skills in data analytics, NLP, geolocation, and ML to their outcomes.

We should not expect ML, despite its capacity for ingesting and processing data, to communicate effectively with users (or evolve) on its own. Without intervention by technical communicators, ML will continue to train on biased discourse, continuing to marginalize those already marginalized by those discourses, or foster black-boxed processes that perpetuate discrimination or lack of explanation or transparency of decision-making to users. Indeed, chatbots are important to help localize where the user is but also to develop explainable AI that meets the user where they are. Users will benefit from technical communicators engaging with designing conversational agents, and better user experiences are in everyone’s best interests.

ABOUT THE AUTHORS

Dr. Daniel L. Hocutt serves as the web manager on the marketing & engagement team at the University of Richmond School of Professional & Continuing Studies in Richmond, VA. He also serves as an adjunct professor of liberal arts in the same school, teaching composition, technical communication, and research methods. His research interests are at the intersection of rhetoric, technology, and users, focusing on the rhetorical influence of AI-driven algorithmic processes in digital spaces on user experience. He’s published in journals including Present Tense, Computers & Composition, and the Journal of User Experience along with several edited collections. Dr. Hocutt can be reached at dhocutt@gmail.com.

Dr. Nupoor Ranade is an assistant professor of English at George Mason University. Her research addresses knowledge gaps in the fields of technical communication practice and pedagogy, and focuses on professional writing and editing, inclusive design, and ethics of AI. Through her teaching, Nupoor tries to build a bridge between academia and industry and help students develop professional networks while they also contribute to the community that they are part of. Her research has won multiple awards and is published in journals including but not limited to Technical Communication, Communication Design Quarterly, Kairos, and IEEE Transactions on Professional Communication. Dr. Ranade can be reached at nranade@gmu.edu.

Dr. Gustav Verhulsdonck is an assistant professor in business information systems at Central Michigan University. His research interests are user experience design, technical and business communication, behavioral design, and mobility. He has worked as a technical writer for International Business Machines (IBM) and as a visiting researcher for the University of Southern California’s Institute for Creative Technologies. He also worked as a consultant for clients such as the National Aeronautics and Space Administration (NASA) and the U.S. Army Research Laboratory. His research has appeared in the International Journal of Human-Computer Interaction, Journal of Technical Writing and Communication, Journal of Business and Technical Communication, Computers and Composition, ACM’s Communication Design Quarterly, and Technical Communication Quarterly. Dr. Verhulsdonck can be reached at gverhulsdonck@gmail.com.

REFERENCES

Adiwardana, D., Luong, M.T., So, D. R., Hall, J., Fiedel, N., Thoppilan, R., Yang, Z., Kulshreshtha, A., Nemade, G., Lu, Y. & Le, Q. V. (2020). Towards a human-like open-domain chatbot. arXiv. https://arxiv.org/abs/2001.09977

Agboka, G. Y. (2013). Participatory localization: A social justice approach to navigating unenfranchised/disenfranchised cultural sites. Technical Communication Quarterly, 22(1), 28–49. https://doi.org/10.1080/10572252.2013.730966

Akter, S., McCarthy, G., Sajib, S., Michael, K., Dwivedi, Y. K., D’Ambra, J., & Shen, K. N. (2021). Algorithmic bias in data-driven innovation in the age of AI. International Journal of Information Management, 60, 1–13. https://doi.org/10.1016/j.ijinfomgt.2021.102387

Andersen, R., & Batova, T. (2015). The current state of component content management: An integrative literature review. IEEE Transactions on Professional Communication 58(3), 247–270. https://doi.org/10.1109/TPC.2016.2516619

Batova, T. & Andersen, R. (2017). A systematic literature review of changes in roles/skills in component content management environments and implications for education. Technical Communication Quarterly, 26(2), 173–200. https://doi.org/10.1080/10572252.2017.1287958

Bazerman, C. (2004). Speech acts, genres, and activity systems: How texts organize activities and people. In C. Bazerman & P. Prior (Eds.), What writing does and how it does it: An introduction to analyzing texts and textual practices (pp. 309–340). Routledge.

Beck, E. N. (2015). The invisible digital identity: Assemblages in digital networks. Computers and Composition, 35, 125–140. https://doi.org/10.1016/j.compcom.2015.01.005

Bennett, J. (2010). Vibrant matter: A political ecology of things. Duke University Press.

Bridgeford, T. (Ed.). (2020). Teaching content management in technical and professional communication. Routledge.

Bruns, A. (2006). Towards produsage: Futures for user-led content production. In Proceedings of the 5th International Conference on Cultural Attitudes towards Technology and Communication (pp. 275–284).

Bryant, L. R. (2014). Onto-cartography: An ontology of machines and media. Edinburgh University Press.

Deleuze, G., & Guattari, F. (1987). A thousand plateaus: Capitalism and schizophrenia. (B. Massumi, Trans.). University of Minnesota Press. (Original work published 1980).

Ding, H., Ranade, N., & Catà, A. (2019, October). Boundary of content ecology: Chatbots, user experience, heuristics, and pedagogy. In Proceedings of the 37th ACM International Conference on the Design of Communication. https://doi.org/10.1145/3328020.3353931

Earley, S. (2018). AI, chatbots and content, oh my! (Or technical writers are doomed—to lifelong employment). Intercom, 65(1), 12–14.

Evia, C. (2018). Creating intelligent content with Lightweight DITA. Routledge.

Fuller, A., Fan, Z., Day, C., & Barlow, C. (2020). Digital twin: Enabling technologies, challenges and open research. IEEE Access, 8. https://doi.org/10.1109/ACCESS.2020.2998358

GitHub, Inc. (n.d.). Toward a human-like open-domain chatbot. https://github.com/google-research/google-research/tree/master/meena

Google. (n.d.). Speakable (BETA) schema markup. Google Search Central. https://developers.google.com/search/docs/advanced/structured-data/speakable

Grandinetti, J. (2021). Examining embedded apparatuses of AI in Facebook and TikTok. AI & Society. https://doi.org/10.1007/s00146-021-01270-5

Haas, A. M. (2012). Race, rhetoric, and technology: A case study of decolonial technical communication theory, methodology, and pedagogy. Journal of Business and Technical Communication, 26(3), 277–310. https://doi.org/10.1177/1050651912439539

Hartman, K. (2020). Digital marketing analytics: In theory and in practice. Amazon Digital Services LLC—KDP Print US.

Hocutt, D. L. (2017). The complex example of online search: Studying emergent agency in digital environments. In Proceedings of the 35th ACM International Conference on the Design of Communication (pp. 1–8). https://doi.org/10.1145/3121113.3121207

Hocutt, D. L. (2018). Algorithms as information brokers: Visualizing rhetorical agency in platform activities. Present Tense, 6(3). http://www.presenttensejournal.org/?s=Algorithms+as+information+brokers

Hocutt, D. L., & Ranade, N. (2019). Google Analytics and its exclusions. Digital Rhetoric Collaborative Blog Carnival 16. https://www.digitalrhetoriccollaborative.org/2019/12/19/google-analytics-and-its-exclusions

Hussain, S., Ameri Sianaki, O., & Ababneh, N. (2019, March). A survey on conversational agents/chatbots classification and design techniques. In Workshops of the International Conference on Advanced Information Networking and Applications (pp. 946–956). Springer.

Johnson-Eilola, J., & Selber, S. (2022). Technical communication as assemblage. Technical Communication Quarterly. Advance online publication. https://doi.org/10.1080/10572252.2022.2036815

Jones, N. N. (2016). The technical communicator as advocate: Integrating a social justice approach in technical communication. Journal of Technical Writing and Communication, 46(3), 342–361. https://doi.org/10.1177%2F0047281616639472

Joyce, A. (2020). 7 steps to benchmark your product’s UX. Nielsen Norman Group. https://www.nngroup.com/articles/product-ux-benchmarks

Kim, N. Y., Cha, Y., & Kim, H. S. (2019). Future English learning: Chatbots and artificial intelligence. Multimedia-Assisted Language Learning, 22(3), 32–53.

Kulkarni, P., Mahabaleshwarkar, A., Kulkarni, M., Sirsikar, N., & Gadgil, K. (2019). Conversational AI: An overview of methodologies, applications & future scope. In 2019 5th International Conference on Computing, Communication, Control and Automation (pp. 1–7). https://doi.org/10.1109/ICCUBEA47591.2019.9129347

Latour, B. (2005). Reassembling the social: An introduction to Actor-Network-Theory. Oxford University Press.

Leah. (2022, May 26). The ultimate guide to chatbots in business. Userlike. https://www.userlike.com/en/blog/chatbots

Loranger, H., & Nielsen, J. (2017). Microcontent: A few small words have a mega impact on business [Web log]. Nielsen Norman Group. https://www.nngroup.com/articles/microcontent-how-to-write-headlines-page-titles-and-subject-lines

McConnell, L. (2019). Microcontent and what it means for communication and technical writing [Web log]. Best practices in strategic writing. http://blogs.chatham.edu/bestpracticesinstrategiccommunication/2019/04

/18/microcontent-and-what-it-means-for-communication-and-technical-writing

Miller, C. R. (2007). What can automation tell us about agency? Rhetoric Society Quarterly, 37(2), 137–157. http://doi.org/10.1080/02773940601021197

Moore, K. R, & Richards, D. P. (Eds.). (2018). Posthuman praxis in technical communication. Routledge.

Noble, S. U. (2018). Algorithms of oppression: How search engines reinforce racism. New York University Press.

Ranade, N. (2019). World interaction design day event report on gendered AI [Web log]. Digital Rhetoric Collaborative Blog. https://www.digitalrhetoriccollaborative.org/2019/11/26/world-interaction-design-day-event-report-on-gendered-ai

Ranade, N. (2020, October). The real-time audience: Data analytics and audience measurements. In Proceedings of the 38th ACM International Conference on Design of Communication (pp. 1–2). https://doi.org/10.1145/3380851.3418613

Ranade, N., & Catá, A. (2021). Intelligent algorithms: Evaluating the design of chatbots and search. Technical Communication, 68(2), 22–40.

Rockley, A., & Cooper, C. (2012). Managing enterprise content: A unified content strategy. New Riders.

Rose, E., & Cardinal, A. (2018). Participatory video methods in UX: Sharing power with users to gain insights into everyday life. Communication Design Quarterly, 6(2), 9–20. https://doi.org/10.1145/3282665.3282667

Rose, E. J., & Walton, R. (2018). Factors to actors: Implications of posthumanism for social justice work. In K. R. Moore & D. P. Richards (Eds.), Posthuman praxis in technical communication (pp. 91–117). Routledge.

Saunders, C. (2018). A new content order for the multi-channel, multi-modal world. Intercom, 65(1), 9–11.

Singh, S., & Beniwal, H. (2021). A survey on near-human conversational agents. Journal of King Saud University-Computer and Information Sciences. Advance online publication. https://doi.org/10.1016/j.jksuci.2021.10.013

Tham, J., Howard, T., & Verhulsdonck, G. (2022). Extending design thinking, content strategy, and artificial intelligence into technical communication and user experience design programs: Further pedagogical implications. Journal of Technical Writing and Communication. Advance online publication. https://doi.org/10.1177%2F00472816211072533

Thompson, W., Li, H., & Bolen, A. (n.d.). Artificial intelligence, machine learning, deep learning and beyond. Understanding AI technologies and how they lead to smart applications. SAS Insights. https://www.sas.com/en_us/insights/articles/big-data/artificial-intelligence-machine-learning-deep-learning-and-beyond.html

Toffler, A. (1971). Future shock. Pan.

Turner Lee, N., Resnick, P., & Barton, G. (2019). Algorithmic bias detection and mitigation: Best practices and policies to reduce consumer harms. Center for Technology Innovation. Brookings.

Verhulsdonck, G., Howard, T. & Tham, J. (2021). Investigating the impact of design thinking, content strategy, and artificial intelligence: A “streams” approach for technical communication and UX. Journal of Technical Writing and Communication, 51(4), 468–492. https://doi.org/10.1177/00472816211041951

Verhulsdonck, G., & Tham, J. (2022). Tactical (dis)connection in smart cities: Postconnectivist technical communication for a datafied world. Technical Communication Quarterly. Advance online publication. https://doi.org/10.1080/10572252.2021.2024606

Verhulsdonck, G. (2018). Designing for global mobile: Considering user experience mapping with infrastructure, global openness, local user contexts and local cultural beliefs of technology use. Communication Design Quarterly Review, 5(3), 55–62. https://doi.org/10.1145/3188173.3188179

Walton, R., Moore, K. R., & Jones, N. N. (2019). Technical communication after the social justice turn: Building coalitions for action. Routledge.

Zachry, M., & Spyridakis, J. H. (2016). Human-centered design and the field of technical communication. Journal of Technical Writing and Communication, 46(4), 392–401. https://doi.org/10.1177%2F0047281616653497