By Andrea L. Beaudin | Member

Generative AI, such as GPT, can improve and enhance the instructional design process, transforming challenges into innovative learning opportunities.

As an instructor and communicator, my first tinkering with the AI chatbot put me in a bit of a panic mode. I entered an assignment prompt, and in seconds, usable text appeared on the screen, text that was not too different from the 75+ assignments that I had recently graded.

I live in a household of gamers. I think it was Dungeons and Dragons (D&D) that made me think differently about ChatGPT. If you’re not familiar with D&D, players create characters that, in part, fit along two axes: law/chaos and good/evil. With neutral acting as a midpoint along both axes, character alignments can fall somewhere between lawful good and chaotic evil. Experiencing how easily students could bypass learning and critical thinking by plugging in a prompt, I initially characterized ChatGPT as chaotic evil.

One night, however, the AI evangelist of the household demonstrated how to create storylines for D&D using ChatGPT. I began to perceive the creative opportunities generative AI could enable. Though the technology could be employed to sidestep learning, it could also be a muse for brainstorming and concept development. In the past year, I’ve discovered multiple uses for generative AI that include developing tool simulation-based learning, audience analysis, and learner assessment. While most of the applications I list focus on instructional design, some of these strategies can streamline or enhance workflows for user experience (UX) researchers and other technical communicators.

Open AI’s ChatGPT vs. the API

My use of generative AI chatbots has been primarily with OpenAI’s ChatGPT and more recently, its API developer platform (OpenAI). The platform offers greater opportunities for control and customization than the free ChatGPT, but it is not as user-friendly as the Plus or Enterprise versions . It is a paid model, but it has two major appeals: control over how OpenAI uses one’s data and the Assistant feature. With the API, data such as prompts and messages are retained for a set period (30-60 days) with the opportunity for zero data retention. Uploaded files are kept until the user deletes them (OpenAI). Unlike ChatGPT input, one’s API data is not used to train the model, so it is more suitable for sensitive information (though as always, user beware/be aware).

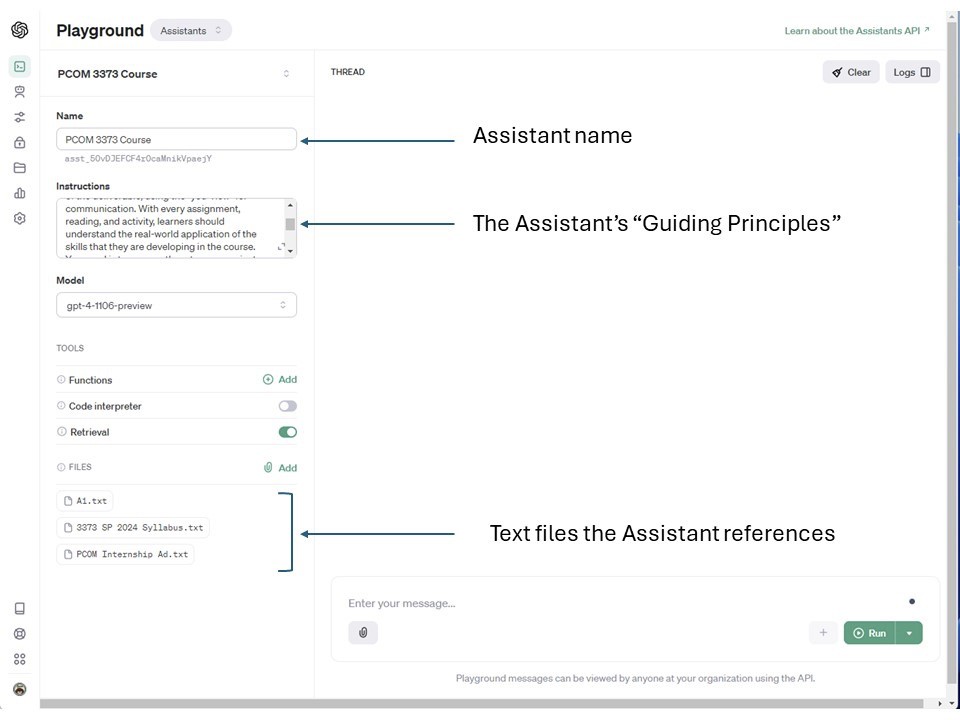

The Assistant is my favorite feature of the API. Users can create an Assistant with instructions (or guiding principles) that dictate the chatbot’s style and tone, provide output guidelines, or even frame each response from a defined standpoint. Additionally, the Assistant’s Files option enables creating a repository of files for the Assistant to reference when responding to prompts (see Figure 1).

Exploring Generative AI’s Potential for Instructional Design

Instructional designers are often challenged by constrained resources, specifically time, labor, and money. Utilizing generative AI has proven to be valuable in mitigating these impediments. The technology can assist with the creation of diverse elements including scenarios, personas, and assessment tools.

Simulations and Branching Scenarios

In the fall of 2022, I spent well over a week creating six fictional case studies for a business communication course designed as a workplace simulation. Acting as consultants, students used the case studies for their problem-solving team projects. Their “clients” ranged from a credit union to a coffee shop. Students were engaged and did well, but I felt that the context I provided was a bit too neatly packaged—in practice, problem-solving requires analyzing multiple factors. With my limited time, I simply couldn’t develop meaningful details such as organizational structure and communication artifacts.

Understanding how AI could be used to create D&D storylines, I developed a series of prompts for generating a fictional case study; a template is below with examples for some prompts that might need clarification:

Simulation Prompt 1: Design a [topic] problem-solving case study concerning [customization] for students to solve for a [timeframe], [number]-member group project. The students are [role].

Example: Design a business communication problem-solving case study concerning a credit union for students to solve for a 6-week, 4-member group project. The students are communication consultants.

Simulation Prompt 2: What are [name types of stakeholders]’s concerns?

Example: What are leadership’s concerns?

Simulation Prompt 3: What are examples of [the problem]?

Example: What are examples of customer complaints? [useful for generating artifacts for learners to analyze]

Simulation Prompt 4: What is the organizational structure of [the company]?

Simulation Prompt 5: How does [company] communicate with [stakeholder name]?

The follow-up prompts generate well-developed fictional case studies that more realistically portray a company and the complex issues it faces—and the prompt process takes minutes. Granted, revisions and edits still require time investment, but this is measured in hours rather than days.

Similarly, generative AI can assist in creating branching scenarios that enable learners to explore the consequences of their choices. Using follow-up prompts, one can develop a Choose Your Own Adventure-type simulation, a virtual escape room, or other game-type engagement.

As far as financial savings for students, I had spent hours searching for low-cost or open educational resources that would fit this class without success. Purchasing additional materials would have doubled the cost to students.

Finally, the comparative ease of generating these materials makes it easier to update existing content or create new scenarios each semester.

User/Audience Personas

Often, simulation-based learning stumbles when students present their solutions. The instructor might be the only one in the audience who has an awareness of stakeholder needs and expectations. Plus, the other students in the audience have little impetus to engage with the presenters and their presentations. However, simulation and role-playing can be more effective if presenters have a sense of the names and needs of their individual stakeholders, and student audiences are more likely to engage as representative stakeholders if stakeholder goals, needs, and preferences are clearly defined. Enter the AI-generated audience persona.

While I had experience creating user personas for UX research, I had not considered using the corollary, audience personas, in the classroom until I attended a conference presentation entitled “Making It Real” (Mangat, Dolph and Morse). During the session, the presenters handed out audience personas to attendees as part of role-playing exercise. I immediately grasped the value to learners; yet, developing approximately 18 audience personas per fictional company would have been impossible given time and energy constraints—without ChatGPT.

Creating personas is much easier with the Assistant, as each edited/revised case study can be uploaded as a file for the bot to reference. However, this can be achieved in ChatGPT by appending the revised case study to the prompt. Understandably, the more precise the prompt for audience personas, the better the results. Still, follow-up prompts are necessary to refine, and at times, set additional “guiding principles.” An example of the prompt process for this task:

Persona Prompt 1: Do you know what a user persona is? [check ChatGPT’s definition to ensure it aligns with the project/task goals, and refine if necessary]

Persona Prompt 2: Create [number of] user personas for [name a part or division of the organizational structure, such as “C-Suite” or “IT Department”].

Review the generated personas to check for issues or concerns. I noticed that some personas, while realistic in many respects, at times reflected gender and ethnic stereotypes. Revising this requires setting guiding principles, which can be done through an almost Socratic exchange:

Persona Prompt 3: Do you know what stereotypes are? [evaluate response and set additional guidelines if necessary]

Persona Prompt 4: Analyze the user personas and evaluate for potential stereotyping.

Persona Prompt 5: Revise the user personas to avoid potential reinforcement of stereotypes.

As always, one will need to review, revise, and edit ChatGPT’s responses—but using AI can save time, offering a dimension of learner-centered activities that would typically be infeasible due to time and labor constraints.

Assessment

First, I should clarify what this section is not about: it’s not about using AI for grading. For me, assessment requires human interaction, specifically for more complex deliverables incorporating research, opinion and reflection, and persuasive techniques. Generative AI, however, can be an excellent tool for evaluating assessment strategies, suggesting new assignments and activities, and generating clear assessment guidelines (such as rubrics) for both instructors and learners.

Assignments and activities are vital for both learners and instructors to determine the understanding and potential applications of course materials. Assignment and activity specifications can, however, fall prey to “insider language,” staleness from overuse, or even a tangential alignment with course learning outcomes. Generative AI can effectively evaluate and revise specifications for language and alignment, and it also can suggest new approaches for achieving learning goals.

With assignments and activities, I’ve found the Assistant provides far better results, as referential files and instructions can include the course syllabus with learning outcomes, departmental and institutional assessment criteria, and other supporting information about the course (learning materials, teaching style, class size and modality). For example, my Assistant has pre-loaded instructions listing learning outcomes and the statement, “With every assignment, reading, and activity, learners should understand the real-world application of the skills that they are developing in the course.”

Evaluating assignment and activity descriptions with an AI chatbot is straightforward, but precise language saves time and avoids potential errors or generation of unnecessary content. This prompt template has proven effective (populated example included):

Coursework Prompt 1: Evaluate [“the following assignment description” or file name] for a [audience]-level course in [subject]. Explain how the assignment can facilitate achievement of one or more of the course outcomes “[outcome 1],” “[outcome 2],” (etc.). [Paste assignment description if not using Assistant].

Example: Evaluate Individual Dashboard.txt for a college junior-level course in data visualization. Explain how the assignment can facilitate achievement in one or more course outcomes: “LO1: identify opportunities for data visualization,” “LO2: critique existing data visualizations”…

Coursework Prompt 2: Revise the assignment description for clarity and conciseness. Include an explanation of learning outcomes achieved [can add other criteria, such as practical applications].

Example: Revise the assignment description for clarity and conciseness. Include an explanation of learning outcomes achieved. Identify workplace skills learners develop completing the assignment. Use user-centered language.

AI’s evaluation of existing course materials might be surprising—standard assignments and activities might no longer fit with updated learning outcomes, or connections that seem clear or obvious to the instructor might need clarification for learners. Additionally, it is easy to include heuristics for programmatic, departmental, and institutional assessment—alignments that are often assumed but infrequently verified.

Conversational AI can suggest different strategies for assessment, notably with activities. The suggestions are not necessarily original types or formats, but they are customized and fine-tuned to whatever specifications and constraints the user defines. When prompting, the more detail the better:

Activity Idea Prompt: Design a [time limit] [modality] activity on [topic] for a [audience]-level course in [subject]. [add additional specifications if desired]

Example: Design a 30-minute synchronous online activity on chart types for a college junior-level course in data visualization. Students will work in small group breakout rooms in Microsoft Teams. Explain the alignment of the activity to course outcomes and real-world applications.

With assignments and activities, grading can be daunting. ChatGPT’s ability to synthesize information and generate content makes it incredibly useful for a task I’ve always found particularly challenging: designing grading rubrics. While instructors often have a gut feeling about what differentiates A-quality work from B-quality, C-quality, etc., defining those distinctions in learner-friendly terms can be arduous and time-consuming. Also, though assignments and activities are designed to facilitate meeting learning outcomes, at times some evaluation heuristics do not directly align with either outcomes nor clearly defined aspects of the work. Generative AI offers an opportunity to create specific and precise evaluation rubrics that better distinguish expectations and levels of achievement.

Rubric Prompt: Using [assignment information; if using the assistant, can reference the file name, but if using ChatGPT or similar, state “the following assignment” and append to the end of the prompt] create a [number of levels] rubric using [points or percentages] that ranges from [define the standards or ranges]. The assignment is worth [total points]. [Optional: specify point or percentage values within the range]. [Define any additional requirements regarding points of evaluation—such as outcomes—or structure].

Example: Using the following assignment, create a 5-level grading rubric using points that range from A/Professional to F/Unacceptable. The assignment is worth 100 points. B-level work is worth 0.85 of the A-level, C-level is worth 0.75, D-level is worth 0.65, and F-level is worth 0 points. Separate the rubric into sections that follow the specified order of the document students create, with holistic scoring for two additional sections: “Grammar, Punctuation, Mechanics, and Style” and “professional design.” [paste assignment description].

One important caveat: language models like ChatGPT can’t always count. Check the math.

Achieving “Good”

As with any tool or technology, generative AI presents opportunities and poses risks. While writing this piece, I questioned where these applications would fall according to D&D. It is my belief that instructional designers can apply these methods to achieve lawful to chaotic good. Determining lawfulness requires defining one’s responsibility regarding attribution and the extent of revision necessary to claim full or partial authorship. While I opt to provide at least partial attribution to the AI tools I use, others might perceive their responsibilities differently. For me, precise prompt generation and refining of prompts require both a level of subject matter knowledge and creative thinking that justify my taking some credit for the materials, but definitely not all.

In tandem with this consideration, however, are what I would consider three non-negotiable ethical standards for using generative AI in instructional design:

Verify and validate: AI chatbots aren’t always logical, nor are they fact-checkers. Check any generated text, whether it be for math or consistent and correct relation of information.

Revise and refine for inclusion: The texts on which many of the large language models were trained are in English, and other languages (and to an extent, cultures) are presently underrepresented or not represented at all. Furthermore, the corpus of texts used for training can reflect harmful stereotypes, further impacting realistic representation. Be aware of this possibility, act vigilantly to identify such issues, and revise for inclusion.

Honor Human Interaction: Artificial intelligence, though trained on human-created materials, has no humanity—only coded directives. Whether creating or deploying AI-generated content, assess its emotional, social, and cultural impact.

References

Mangat, Sara, Andrea Dolph and Chris Morse. “Making It Real: Strategies to Make the Business Communication Course More Like Communicating in Business.” ABC 88th Annual International Conference. Denver: Association of Business Communication, 27 October 2023.

OpenAI. Models: How we use your data. 1 March 2023. 11 January 2024. https://platform.openai.com/docs/models/how-we-use-your-data.

—. Welcome to the OpenAI Developer Platform. n.d. 10 January 2024. https://platform.openai.com/docs/overview

Dr. Andrea L. Beaudin is a lecturer in Professional Communication at Texas Tech University. Her interests include UX, accessibility, information design, and instructional design. An unabashed nerd, she is always tinkering with technologies to explore their applications and benefits. She has only played Dungeons & Dragons once in her life.

Dr. Andrea L. Beaudin is a lecturer in Professional Communication at Texas Tech University. Her interests include UX, accessibility, information design, and instructional design. An unabashed nerd, she is always tinkering with technologies to explore their applications and benefits. She has only played Dungeons & Dragons once in her life.