doi: https://doi.org/10.55177/tc124312

By Brett Oppegaard and Michael K. Rabby

ABSTRACT

Purpose: This study compares value expressions of intervention designers and participants in a hackathon-like event to research relationships between values and gamification techniques. Our research identifies and analyzes value expressions during a large-scale intervention at national parks for social inclusion of people who are blind or have low vision. Researchers and organizations can use our model to create common-ground opportunities within values-sensitive gamified designs.

Method: We collected qualitative and quantitative data via multiple methods and from different perspectives to strengthen validity and better determine what stakeholders wanted from the gamified experience. For methods—a pre-survey, a list of intervention activities, and a post-survey—we analyzed discourse and coded for values; then we compared data across sets to evaluate values and their alignment/misalignment among intervention designers and participants.

Results: Without clear and focused attention to values, designers and participants can experience underlying, unintended, and unnecessary friction.

Conclusion: Of the many ways to conceptualize and perform a socially just intervention, this research illustrates the worth of explicitly identifying values on the front end of the design intervention process and actively designing those values into the organizational aspects of the intervention. A design model like ours serves as a subtextual glue to keep people working together. The model also undergirds these complementary value systems, as they interact and combine to contribute to a cause.

KEYWORDS: Values, Gamification, Audio description, User experience, Visual impairment

Practitioner’s Takeaway

- Values are invisible and often unarticulated but also powerful and ever-present. Identifying values in social-justice contexts and tailoring designs to align could lead to better organizational cultures.

- Designers must pay attention to the intervention, such as Audio Description training, but values should also be identified before, during, and after a public intervention to project, maintain, and track organizational culture, efficiency, and effectiveness and to prevent an unnecessary undercurrent of misaligned values.

- Gamification techniques can be designed to support, heighten, and even amplify values in organizational contexts, which could lead to better empirical understandings about their efficacy.

INTRODUCTION

Public places are constantly made and remade through emerging technologies. Although aspects of built public environments may unravel in that making/remaking process, widespread and diverse improvements in media accessibility illustrate how people are coming together for common causes in public and localized contexts. Improving accessibility can help us enrich the lives of people within a media ecosystem. In improving accessibility, technical communicators have important roles, alongside urban planners, architects, engineers, and others. They can contribute through interventions and research analyses to design and create more-inclusive media. In a research-and-development environment, technical communicators in design roles offer attention to shared values of diverse stakeholders in a community and can contribute to improved environments. Technical communicators, in turn, must ask how designers can account for individual values, and they also must address how to localize and integrate values fairly and inclusively across media ecosystems.

Our research team offered well-intentioned accessibility interventions in national parks for years before considering the individual values we were including—and unintentionally excluding—in our hackathon-style events, called Descriptathons. These interventions are aimed to localize media and improve public places through better Audio Description. We sought to transform visual media into audible media, primarily to benefit people who are blind or have low vision. Improving Audio Description requires an iterative and highly collaborative process: people with sight write and rewrite descriptions, people who are blind or have low vision (as ultimate consumers) listen to descriptions and provide feedback to enhance them, and researchers coordinate and assess the interplay between these stakeholders.

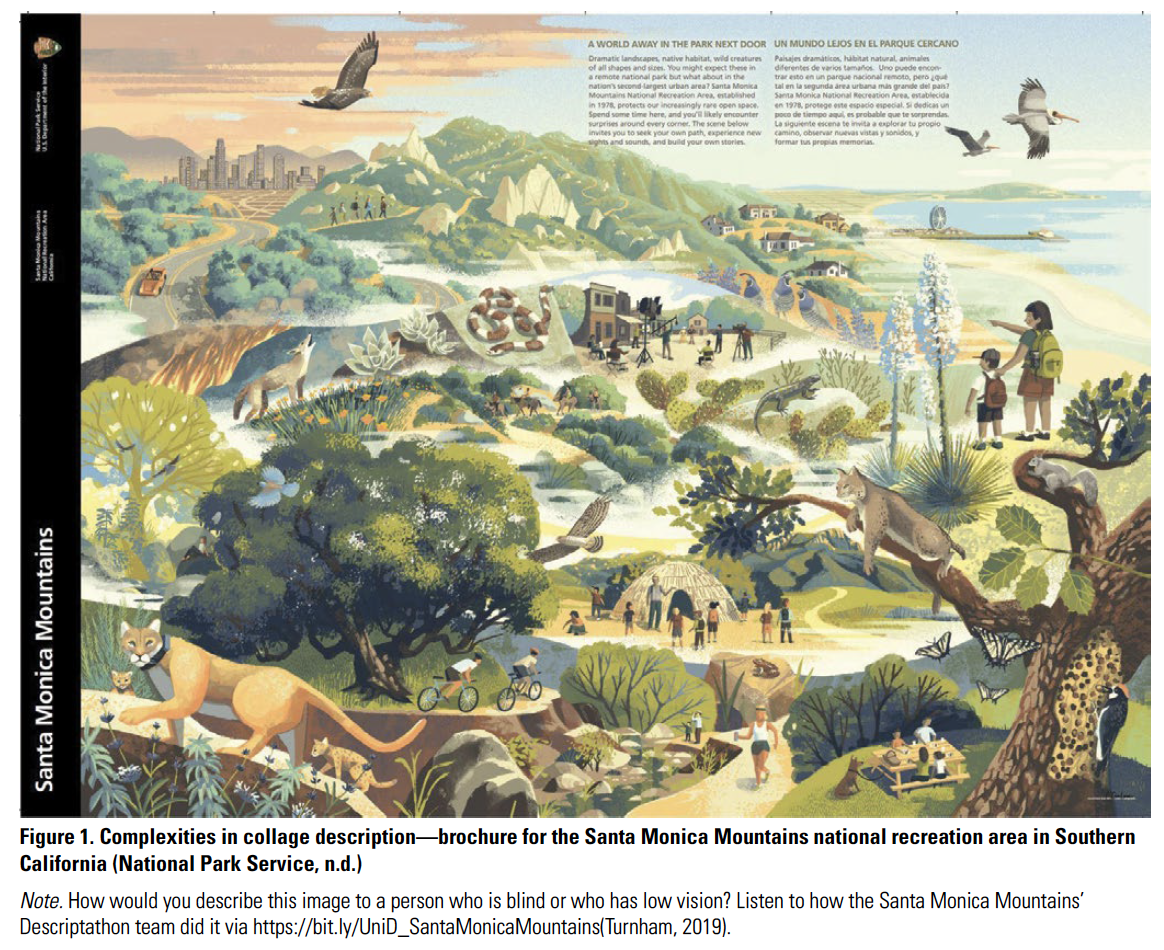

In a Descriptathon, our research identifies a public place that needs more accessible media, invites that public place to participate, and starts those describing efforts by remediating its printed brochure, the key orientation discourse for any public place. For example, with the Santa Monica Mountains National Recreation Area in southern California, its brochure featured a collage of activities and images around the area (Figure 1), including maps that needed describing. During the Descriptathon, the describing team’s members, including staff and non-affiliated people who were blind or low vision, worked together to learn about and to apply the foundations of Audio Description to the brochure. They initially practiced fundamentals related to typical genres of visual media, including collages. They also competed in friendly contests related to those fundamentals. By the end of the three-day workshop of the intervention, they had applied these new skills to the team’s brochure, including describing the collage shown in Figure 1, and they released that description to the public.

Audio Description typically is longer and more descriptive than alternative text (alt-text), which usually provides a quick description (1–2 sentences). The collage Audio Description by the Santa Monica Mountains’ team was broken into 15 distinct components with a total run time of 20 minutes. (Those descriptions can be heard on the project’s website, www.unidescription.org, and via the project’s free mobile apps.)

Audio Description typically is longer and more descriptive than alternative text (alt-text), which usually provides a quick description (1–2 sentences). The collage Audio Description by the Santa Monica Mountains’ team was broken into 15 distinct components with a total run time of 20 minutes. (Those descriptions can be heard on the project’s website, www.unidescription.org, and via the project’s free mobile apps.)

Using hackathons as our inspiration and gamification techniques as our mechanics, we designed these Descriptathons as friendly competitions to motivate and engage volunteers to make more and better descriptions. When volunteers share with teammates and the larger community, quality controls emerge organically through small-group dynamics and description contests, creating accountability and light, but competitive, tension among both individuals and teams. We also pay people who are blind or have low vision to perform independent checks on the descriptions after the Descriptathon—to meet basic professional standards before being released to the public. This “friendly competition” approach works for most people.

With this study, we considered our intervention designs, focusing our attention on individual values and whether they align among intervention designers and participants. This study generated fresh and profound insights. Most of the people volunteer for workshop activities, and researchers and practitioners alike benefit when the Audio Description process is motivating, engaging, and rewarding for those involved and when it connects across common values. Descriptathon participants are busy and have options for their time; they do not want to practice writing to be better writers or only to socialize. They want to devote their time—and to communally practice writing Audio Description—to help make more-accessible public places. As they contribute, they consider these public places as contexts, but they also maintain their individual values as subtext. Values, from this perspective, are defined as “trans-situational goals, varying in importance, that serve as guiding principles in the life of a person or group” (Schwartz et al., 2012, p. 2). Values are organized into a coherent system by each person through social and psychological conflict or congruity between values that people experience when they make everyday decisions. Values help to explain each individual’s decisions, attitudes, and behaviors. Universal values, at the top layer of importance, are grounded in the three basic needs that humans have: biological processes, social interaction, and survival. These needs are not discretely separated but part of a continuum of related motivations, which can be visualized (Schwartz et al., 2012) as concentric rings that are interrelated and reactive of other values in the system.

Scholars know little about relationships between or among values—especially localized cultural values—and gamification techniques (Usunobun et al., 2019). Many scholars hypothesize that understanding such values may help to unlock unrealized potentials in gamification techniques that relate to participant motivation and in a circular fashion may more deeply connect participants to those gamified approaches. In other words, gamification—the use of games in non-game contexts (Deterding et al., 2011)—offers a promising solution to many social-justice dilemmas. Mounting evidence indicates the effectiveness of gamification in research improves the quality and quantity of data (e.g., Cechanowicz et al., 2013; Van Berkel et al., 2017). How individual values relate to this gamification effectiveness, though, has been an underdeveloped area of interest. Gamification studies fit well in technical communication (TC) because they involve core areas of concern, including how technical communicators manage information, develop systems, align values, create better usability and user experiences, and connect producers and consumers through interfaces. Game design also adds to TC interests with an emphasis on user testing, iterative design, and rapid prototyping (deWinter & Vie, 2016).

Instead of trying to simplify the inherent complexity of our project, we sought ideas at the core of what we were trying to accomplish. We circled back to the high-level concept of individual values. Specifically, we asked, what values do our participants who do and do not see well share—among intervention designers, user/designers, and administrators—that durably engages them in the process of making, consuming, and circulating Audio Description?

Descriptathon Origins and Evolutions

For several years, our research team has been working with the U.S. National Park Service (NPS) to study and provide audio-described formats for its print brochures, including intense periods of open-access, open-source software development. During those processes, we created the robust technical tools and gathered the associated wherewithal to produce and disseminate and simultaneously research Audio Description. We thought we had overcome the toughest part of the research problem.

When we started this project, a suitable and no-cost production system for creating those connections did not exist. In response, we turned first to such immediate concerns: How could we design an accessible and useful system for this co-creation process, which gave both describers and audience members sufficient agency to collaborate and to find that collaboration worthwhile? Each step in the process raised new questions about the fundamentals of Audio Description, including best practices, usual collaborative processes, and overall efficacy. This new area of study offers vast unrealized potential (Fryer, 2016; Matamala & Orero, 2016), and our focus on the role of individual values in this work opens a fertile path for exploring.

When we first released these audio-describing tools in beta form, we turned to the NPS to test the tools with staff members at an urban recreation area, a natural landmark, and a historical monument. After three hours of phone orientation with each person about Audio Description and these tools, we asked them to make public places more accessible. That hands-off approach did not work well (Oppegaard, 2020). As these initial collaborators experimented with the tools and asked questions about Audio Description, we began to understand and appreciate what Flanagan et al. (2008) described in a playful metaphor as juggling a big project’s “balls in the air.” As a remedy, we considered an organizational focus on values to reduce tangential chaos.

That “balls in the air” metaphor (Flanagan et al., 2008) outlines an array of foreboding obstacles for researchers who want to study the human and social dimensions of technology. For this technology, we needed to not only build and maintain the software but also reconcile divergent and sometimes contradicting best practices, recruit describers and audience members to create and review, disseminate final products, attract audiences, and keep them engaged, while each image provided its own challenges. For ontological, epistemological, and philosophical reasons, we created the gamified hackathon-like Descriptathon (Oppegaard, 2020).

The UniDescription Project (UniDescription, n.d.) began in fall 2014 as a grant-funded initiative, with a concrete objective of audio describing 40 U.S. NPS Unigrid brochures. We have surpassed that benchmark, including work with 150+ NPS sites plus public places including sites managed by Parks Canada, National Parks UK, and U.S. Fish & Wildlife Service. Our research team always starts our collaborations by focusing on the description of the printed and silent site-orientation brochures, like those any visitor will find in a visitor’s center. These brochures contextualize their places and highlight attractions and amenities. They include images and often at least one map of extreme visual complexity (Conway et al., 2020) to orient visitors to the sites. Brochures may exist in alternative formats such as PDFs and sometimes include thinly developed alt-text, but without a screenreader-accessible format with accompanying Audio Description, these materials generally offer limited or no access to basic information for people who cannot see them or see them well. When our research team and government liaison began transforming the UniD concept into a public intervention in the mid 2010s, we created our designs with a foundation of greater scope, including attention to legal, ethical, and moral obligations related to media accessibility. From a practical perspective, we started by building the online tools, distributing them to staff at a few park sites, and waiting for the descriptions to materialize. That top-down-design approach was efficient but generally ineffective (Oppegaard, 2020).

In spring 2017, during the NCAA March Madness basketball tournament, we were looking to liven up our media-accessibility work. Descriptathon 1 (D1 in fall 2016) consisted of traditional online training—compressed and action packed but not “fun.” Inspired by the energy and enjoyment of the sports tournament, we reimagined our project as a serious game that was created to improve accessibility rather than for pure entertainment. This game, in other words, would be entertaining and engaging through its intellectualism, social-justice aim, and camaraderie while making public places more accessible. We could transform sites into teams that compete. From that context, a novel approach emerged. We remotely established teams of park staff from around the country, and we hired two consultants as representatives of our target audiences, knowing that a localized and inclusive approach would lead to better results and more directly serve the target audience.

The event generated a dramatic contrast to our initial outreach. The gamified Descriptathon, even at its rudimentary stages and with the same objectives as the original, was intriguing. It was exciting. Participants even called it “fun.” We could hear energy in participants’ voices, as they engaged in friendly competition. They earned acknowledgement. They described their brochures and enjoyed the process, becoming part of a bigger community with a higher purpose. After D2, we realized that we would never return to the original approach, but we knew we still needed to improve the Descriptathon idea.

We gradually made teams more diverse and inclusive, which also meant they became more complex to manage. In 2017, we welcomed members from the American Council of the Blind to participate, and we also now include members of the Blinded Veterans Association, Royal National Institute of Blind People (UK), Canadian Council of the Blind, and Helen Keller National Center for DeafBlind Youths & Adults. Teams (5–10 members each) have included hundreds of members from across the US, and we have worked internationally with public places managed by Parks Canada and National Parks U.K. What connects different people from different places with different interests and agendas and keeps them engaged in the social-justice process of making the world a more-accessible place through Audio Description? Gamification clearly plays a role. But what fundamentally keeps the players playing?

In our ongoing grounded theory (Levitt, 2021) of the Descriptathon intervention, we identified shared values as a key contributing factor. We originally did not design the Descriptathon through a values-oriented perspective, were not focused on the values present in the work, and had not adapted the intervention to better align with shared values. Bluntly, we took shared values for granted. In retrospect, we did not understand how values inherently were woven into our intervention, and we failed to understand how they were being co-created and developed or ignored by both organizers and participants or how they were supporting or opposing larger objectives of the intervention.

This study’s values-focused analysis shifted our point of view. It illustrated the presence or absence of shared values and their roles in the interdisciplinary Descriptathon process. It also alerted us to opportunities for refining relationships between organizers, intervention designers, and user/designer participants. Our findings can help others, regardless of the intervention’s aim, by showing how values and gamified techniques can operate in tandem and can align, propelling the work, or conflict, that causes misalignment and difficult-to-diagnose friction.

Gamification of Values

Precisely defining the terms gamification and gamified techniques goes beyond the scope of this paper. We will describe how we are using these terms but aim to direct related debates to other venues. For our purposes, a game is a structured activity that follows certain rules and has a beginning and an end, and the players use those rules toward a goal (Mildner & Mueller, 2016). In that definition, no specific impetus exists to generate fun or motivate participants. Such Serious Games—as called since the 1970s (Abt, 1987; Wilkinson, 2016)—have proliferated in both consumer culture and academia and typically maintain an educational or social-justice emphasis (Breuer & Bente, 2010). A key distinction of gamification is that game-design elements are integrated into non-game contexts, which can be serious and real-world endeavors (Deterding et al., 2011). “Game elements” are not “a game” on their own but instead are parts of a game that could create fun (Mildner & Mueller, 2016). Principles include “competition” as a game element (Caillois & Barash, 1961), with game elements also including “challenge,” “fellowship,” “discovery,” and “expression” (Hunicke et al., 2004) as well as “rules,” “goals,” “interactivity,” “outcome and feedback,” and “problem solving” (Prensky, 2007). Given this multitude of ideas and lack of consensus, we label our Descriptathon in general ways, as a partially developed Serious Game that includes multiple game characteristics, elements, and mechanics, including rules, a beginning and end, teams, competition, judging, points, badges, leaderboards, and clear goals.

Our larger objective goes beyond arguing for the Descriptathon’s gameful nature. Instead, our interest lies in how its gamified elements and mechanics interact with our participants’ values and potentially increase motivation and engagement in ways that neither an academic focus on gamification or on values alone could accomplish. Along this line, with recent theorizing and discussion about gamification, scholars have attempted a small amount of empirical work to provide evidence of its efficacy (Seaborn & Fels, 2015). Gamification, as an approach, has shown identifiable limits in what can be expected from its use (González-González & Navarro-Adelantdao, 2021). Yet, we now think that studies that combine values with gamification concepts could lead to novel contributions to establish empirical evidence of gamification’s efficacy. In these respects, our intent is to empirically examine values within a specific gamified context to motivate and engage participants.

Hackathon-like events inherently include game mechanics, such as teamwork, competition, and the timed pursuit of collective goals (Porras et al., 2018). They also provide an opportunity for people to collaborate and create new connections with benefits that extend beyond the short-term event (Briscoe & Mulligan, 2014). In a more-precise sense of the Descriptathon’s design, we envision its gamification aspects along the lines of punctuated play (Foxman, 2020), in which our focus is not on game design but on the players and how they play. By connecting gamification with values and studying those overlaps, we aim to dig deeper into what makes a Descriptathon successful and discover ways to create even richer experiences, combining everyday accessibility concerns with a punctuation of “meaningful, ludic moments” (p. 55). This leads to the core of value-sensitive design (Sackey, 2020), the embrace of shared values in communal activities that minimize any participant’s marginalization.

Values

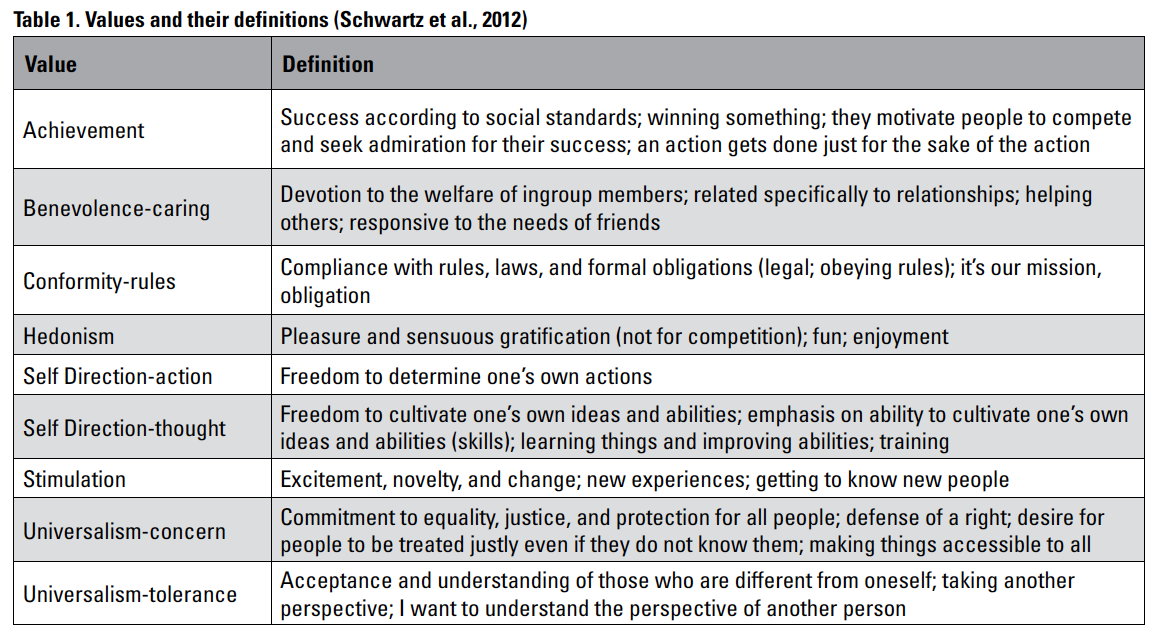

Values are another complex and highly contested arena of academic discourse. Again, our intent is not to settle these debates but to transparently outline our approach. This work was inspired and informed by Agboka (2013), Flanagan et al. (2008), and Usunobun et al. (2019) and grounded by adhering to and applying the Theory of Basic Individual Values (Schwartz et al., 2012). Values are an extension of scholarly attention to culture (Schwartz et al., 2012), developed extensively in a cultural context (Schwartz, 1992, 1999; Schwartz & Bilsky, 1987), starting with 10 basic values (Schwartz, 1992). These values were tested in an international setting (Schwartz et al., 2012), and that refinement process led to an expanded list of values, what’s now known as the Theory of Basic Individual Values, which identifies common values defined by motivational goals. The theory includes 19 values, which we tested in our analysis of values present or absent in our latest Descriptathon intervention. These values include Universalism-concern, which is defined as a commitment to equality, justice, and protection for all people. Before starting our analysis, we correctly speculated that Univeralism-concern would be present in the expressions of values we had collected, but we also discovered other values in play that were not as predictable.

In our analysis of the discourse of Descriptathon participants, we found that they expressed several values but did not express others, which led us to concentrate on values that participants expressed. This study also shows ways in which values are active and important in design decisions, including in gamified contexts, whether those are articulated or instead circulating in the subtext.

To distinguish values from other factors in our work, including the gamified techniques, we pursued the following research questions in a post-mortem analysis of D8:

RQ1: What values underlie the reasons volunteers participate in this project?

RQ2: What values underlie the reward system in this public intervention?

RQ3: How do the values expressed by participants and values expressed by intervention designers differ? How can these potential differences be addressed and realigned to create common ground?

This manuscript outlines the methods we used for the analysis, as well as our results and interpretations.

METHOD

We analyzed three data sets for the presence of value statements:

- A pre-Descriptathon survey, which asked about motivations for participation

- A during-Descriptathon list of recommended activities

- A post-Descriptathon survey about highlights and opportunities to improve the experience.

Data-collection processes were approved by the lead author’s Institutional Review Board and by the federal government’s Office of Management and Budget.

For D8, 111 adults (ages 36–65 years) participated in the remote event in the US, Canada, UK, and Nigeria. Participants were organized into 16 teams, plus an 11-person administrative group, with seven administrative members also joining teams and co-creating Audio Descriptions. All identifiable data from the administrative group members were removed from our samples before analysis, to focus on participant responses without conflict of interest.

D8 started five weeks before October 26, 2021, when recommendations for prep activities were first released; recommendations included instructions to RSVP to D8 Calendar invites, an invitation to peruse our library of online resources, and a suggestion to listen to the project’s mobile app. Each ensuing week, participants received activities to help them prepare for the intervention and to excite them about D8. Each day of D8, their “To-Do List” contained new activities to create a dynamic unfurling of the event, starting slowly, building into the intense competition phase, and culminating in the championship round for the vaunted trophy, a coconut playfully painted and personalized to celebrate the winners.

In terms of positionality, a constructivist and inductive approach (Levitt, 2021; Rennie, 2000) was undertaken to analyze data. The researchers kept duties separated to establish reliability in our findings. The first author on this manuscript (who is sighted) created the Descriptathon intervention idea in 2017 and has designed and managed each of its iterations. He collected the data in D8 and handed raw data to a two-person team who independently analyzed it. The second author, also sighted, and the lead coder has not participated in any Descriptathon and joined this study to provide an independent analysis of the D8 data. The third member of the research team, who is blind, has participated in multiple Descriptathons, including D8; she did not have a role in its design and organization or in the creation of the codebook but served as a paid research assistant. Her role involved independently coding data, based on the provided codebook, and working with the second author to attain reliability in that analysis process. The administrative team also included representatives from various disciplines including education, history, and public lands management.

We created the gamified reward system for this intervention, providing each participant a personalized, dynamic, and online to-do list. As a part of the list, each activity in the intervention was given point values (established by the organizers), and participants accumulated a score for individual efforts as well as team points, earned through collective activity (e.g., submitting a single description crafted in a collaborative session). The D8 website included a leaderboard for individuals and teams, with the top 20 names and points shown (to reward top contributors yet without showing and potentially embarrassing people who were contributing less). All participants knew individual scores by score inclusion on individualized event home pages, which only they could access. All team scores were posted on a separate leaderboard, allowing groups to compare their collective efforts. At the core of these scoring systems was the activity list, where participants were potentially mobilized and motivated by point values.

We collected data from registration of the event until the completed brochure description was shared with public audiences. As a part of data collection, we asked people why they wanted to participate in D8. Of the eligible participants, 80 wrote separate comments with reasons for participation. Using Schwartz et al.’s (2012) values and Krippendorff’s (2019) protocols, we examined the underlying values in the responses. Many of these values, such as those close to security and power, did not have relevance here and were removed. After reducing values to 10, through an initial single-coder review of data, we added a second coder to establish reliability of the codebook with its value codes.

After further reviewing and discussing how values emerged in the participants’ responses, we reduced the list to seven values present in most comments. Once the dataset was coded, two uncoded statements remained. After discussing amongst coders, we added an eighth value (Self Direction-action) back into the codes and applied to those two statements (later, we added a ninth value identified and discussed further, in during-Descriptathon list of recommended activities). The coders agreed that the responses could have more than one value. For example, “This event seemed like a fun opportunity to improve my communication skill and more importantly to level up our whole organisation’s output with regard to those needing text descriptions to access information” demonstrates two values: Self Direction-thought (the chance to improve oneself and one’s skills) and Universalism-concern (the chance to help others). For the first round of content analysis, to test reliability, both coders independently labeled 20 statements, using Schwartz et al.’s (2012) values in a closed analysis, allowing double and triple coding. That exercise resulted in 26 value codes being applied, meaning multiple statements had more than one code but each statement had at least one code. Intercoder reliabilities were sufficiently strong, as indexed by Cohen’s kappa (κ = .71); the percentage of agreement between the two independent coders was 76.9%. The remaining statements were then analyzed by the second coder, with the instructions to leave any statements uncoded if one of the seven pared-down list of values did not apply.

FINDINGS

The values that emerged from analysis and their definitions are listed in Table 1.

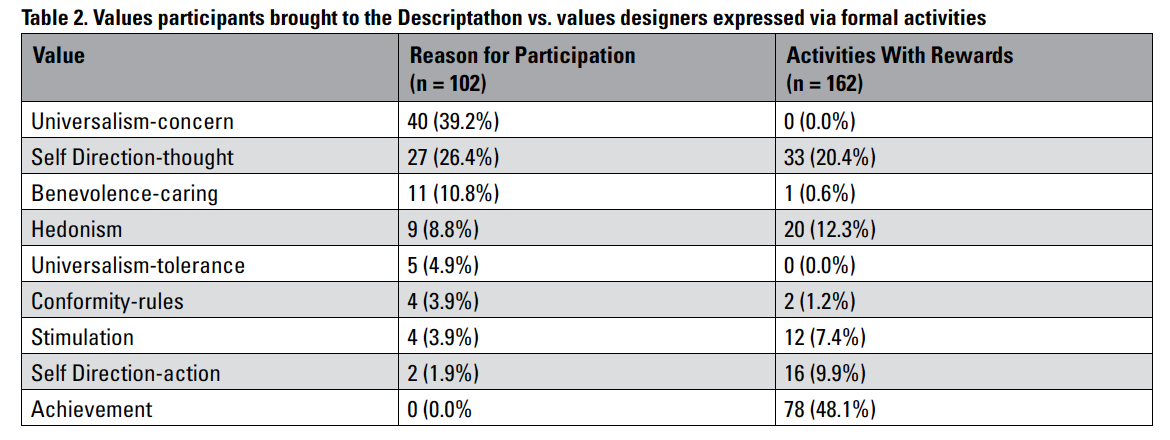

Values emerged at different points in the Descriptathon process, dependent on the stakeholder’s interests, revealing a complexity to the dynamic between values and gamification that deserves more attention.

Pre-Descriptathon Survey Findings

All D8 participants were asked to register at least one month before the event and to answer a variety of demographic and organizational questions, including a values-oriented question, added to our survey with the intent to illuminate the potential presence of values. We asked, “Reason(s) You Wanted to be Involved: To help us understand our Descriptathoners better, and to serve you better, could you please tell us the primary reason (or reasons) that motivated you to join this Descriptathon?”

Responses to this survey were voluntary. The response rate was about 72.0%. Of the 80 statements received in response, 61 had one value code, 16 had two value codes, and three had three value codes (102 identified value codes). Though the majority of statements contained one clearly implied value, multiple values could emerge in the data. For example, the following statement had values of both Self Direction-thought and Conformity-rules: “To better understand and practice AD, and accept the opportunity to help us audio describe our Ala Kahakai NHT brochure to help us towards 508 compliance and better serving all audiences.” Another statement had three values—Self Direction-thought, Stimulation, and Hedonism:

I love the idea of audio describing the world and the mission and purpose of the UniDescription Project. My audio description skills improved the last time I participated and it was really challenging and fun. I worked alone last time and am looking forward to collaborating with a team this year.

In the three statements that had three values, Self Direction-thought and Hedonism were always two of the values; people participated because they wanted to improve their skills and have fun, alongside a third motivating value. For example, one participant noted Self Direction-thought (“I’m also excited about learning UniDescription to improve my communication skills”), Hedonism (“It brings me joy that I could make a difference”), and Benevolence-caring (“… to support an initiative that can help disabled communities for so many for years to come”).

Nearly two thirds of the values that emerged in this Pre-Descriptathon Survey data were either Universalism-concern or Self Direction-thought (see Table 2). Among the eight values that motivated people to participate in this project, these two merit more discussion.

The most frequently identified value among the D8 participants was Universalism-concern. From this perspective, people inherently deserve these inalienable rights, which differ from laws. Laws only require baseline obligations; from a broader view, public places and resources should be accessible to all people, and all people should receive equal treatment. Sometimes, this value emerged in direct statements, such as “To provide the most accessible information for our visitors.” In other cases, participants noted a duty at their workplaces to improve accessibility: “To make the newly re-done Whitman Mission NHS [National Historic Site] brochure as accessible as possible” and “To help make my park’s brochure more accessible and versatile for other applications if desired.”

The most frequently identified value among the D8 participants was Universalism-concern. From this perspective, people inherently deserve these inalienable rights, which differ from laws. Laws only require baseline obligations; from a broader view, public places and resources should be accessible to all people, and all people should receive equal treatment. Sometimes, this value emerged in direct statements, such as “To provide the most accessible information for our visitors.” In other cases, participants noted a duty at their workplaces to improve accessibility: “To make the newly re-done Whitman Mission NHS [National Historic Site] brochure as accessible as possible” and “To help make my park’s brochure more accessible and versatile for other applications if desired.”

Other responses in which this value emerged focused on accessibility as part of the participant’s understanding of the world and their own personal growth. In one instance, the participant felt it important to put aside personal needs/desires/fears to improve accessibility for others: “I did not want to do this at first because writing and describing things are not easy for me. We have to go beyond our comfort zones to make a more inclusive environment for all.” More often, participants noted that their experiences drove them to improve accessibility for all e.g.:

As someone who has experienced vision loss in the past couple of years [sic] I’m navigating a world in new ways. Accessibility of public spaces and places of interest can be patchy at best [sic] and I was really keen to be a small part of improving audio description and accessibility of places which sighted people are so readily able to enjoy and “To make a difference. As I plan to study further [and] with low vision I would like more things to be accessible.”

The other frequently emerging value was Self Direction-thought, a value that focuses on freedom to cultivate one’s own ideas and abilities. Often, these statements referenced direct skill acquisition (e.g., “Gain additional skills in audio description and participate in a worthwhile project” and “To improve my audio description skills”). Other statements of these values involved skill acquisition in service of job improvement (e.g., “I work in the A/V department and our videos require Audio Description [sic] and I would like to learn all that I can about it and get better at it,” and “This project would help me understand the process for audio describing and why it is important [sic] and my supervisor thought it would be a good learning opportunity”). Much like Hedonism, another inwardly-focused value, Self Direction-thought often was mentioned with other values: in 13 of the 27 Self Direction-thought codes, participants signaled it as a value with other values.

The remaining values did not come up as frequently as Self Direction-thought and Universalism-concern. Benevolence-caring emerged in 11 responses. This value encompasses a devotion to the welfare of in-group members and appears in statements such as:

As a person who has limited amounts of vision, I know how important it can be to have audio descriptions of things that I can’t see. I want to give back since I have some vision and can provide input based on my personal experiences or those of my peers.

In a few instances, participants noted relational and connective aspects of the experience, such as “. . . I so enjoyed working with everyone [sic] and I wanted to take this opportunity to help another team” and “. . . I hope to get acquainted with another group of wonderful parks people, in this case some folks from Louisiana. . . .” The expressed desires to connect and participate were categorized as Benevolence-caring, though not a perfect fit, and we eventually consulted with Schwartz directly about this issue (presented in the Discussion section).

Hedonism, which encompasses pleasure and sensuous gratification (including mentions of fun and enjoyment), was often cited with other values: of the nine times Hedonism was identified, seven occurred alongside other values. Even in the two statements where it received the sole value code—one being “I have been part of this since Descriptathon 5, and it is educational and somewhat enjoyable”—the participant cited peripheral reasons (e.g., educational).

Universalism-tolerance, the desire to understand the perspectives of other people, emerged in statements such as, “I like to help those with vision impairment to better understand what is before them.” In three of five statements, this value was one of a cluster of values.

Stimulation as a value was presented often in a straight-forward manner, such as “I was intrigued by the project and wanted to be part of it” and “. . . it sounded very interesting. I’m up for a challenge to see what this is all about.”

Conformity-rules focuses on compliance with rules and laws (a sense of obligation) and appears in declarative statements such as, “Since 2000, I’ve been involved in federal government efforts to ensure that information and communication technology (ICT) is accessible and ‘Section 508-conformant’. . . .”

Regarding Self Direction-action, two statements needed further discussion, and this code was added in response. The two coders decided that the outlier statements (“I am blind” and “As a blind woman, AD plays a significant role in enabling my participation in, and enjoyment of, the world around me. It brings visual texture, depth and colour to my generally dark world helping me think visually, see and experience the world as multidimensional”) both fit the value of Self Direction-action. This value focuses on freedom and independence as well as the ability for one to have agency in making decisions.

Descriptathon Activities List Findings

The website hub that centralized and organized the online D8 event included a dynamic to-do list. For the most part, participants used this to-do list to guide what, how, and when they completed tasks. This list was generated by the event’s organizers, without an opportunity for participants to influence it, so we reflectively wondered what values we encoded in the list and how well it matched the values expressed by our participants.

We were interested in how the values in our Descriptathon reward system matched our participants’ stated values. We sought to analyze connections between the rewards (points) and the values participants came into the Descriptathon hoping to activate; RQ2 explored these values. D8 had 162 distinct activities during which participants could earn points. Most of those activities were generalized and open for anyone to claim, but other activities were designed to reward blind or low-vision participants for doing extra work, such as judging descriptions in the tournament. A few rewards were added at the last minute, rewarding particular people based on their specific situations (e.g., persevering through the training despite an urgent family health crisis, labeled “Grit Points—When faced with adversity, does she quit? No! She digs in, with grit.”).

We started the second stage of data collection with a codebook containing the eight values identified in pre-Descriptathon survey results. After an initial perusal by the lead coder, we added the value of Achievement to the culled list, resulting in nine relevant values for this data. Every activity could be considered an “achievement,” which would dilute meaningful information. Thus, the coders looked inside the content of each activity to better understand what it represented. The second coder was employed to establish reliability. As she was familiar with the values typology, and as a blind participant in D8, she brought insights to coding these statements that helped to clarify intent, interpretations, and practical implications of the activities. Unlike the first dataset, which had statements with multiple value codes, all D8 activities (the second phase) contained and were coded as representing a single value.

To establish reliability for this dataset, 30 activity descriptions were analyzed independently by both coders. Intercoder reliabilities were sufficiently strong, as indexed by Cohen’s kappa (κ = .62); the percentage of agreement between the two independent coders was 72.3%. As many of the activities were repetitious (e.g., “Training—Read the About Page,” “Training—Read the Academy Page”), a single difference on how to label a code could result in a large reliability discrepancy. After the above reliabilities were established, the coders conferred on how to handle particular statements and how they could relate to specific value(s). The second coder then independently coded the remaining statements.

In this dataset, the newly added value of Achievement accounted for nearly one half of the value codes for the badges (78 total). Achievement values include success according to social standards, such as winning something. Achievement values motivate people to compete and seek admiration for their success. In other instances, an action is done for the sake of the action or completion. Most badges rewarded people for completing a specific requirement, such as “Roll Call: Everyone Provided Audio-Described Profile Images” and “Completed the D8 Survey of Participants.”

Self Direction-thought accounted for 33 (20%) of the value codes, which primarily rewarded people for completing some type of optional training (e.g., “Training-Description Practice” and “Training Best Practices”).

Hedonism emerged in the activities that rewarded sharing things digitally, given the pleasure people experience when sharing on social media and receiving feedback such as likes and views (e.g., Cino et al., 2020; Quan-Haase & Young, 2010; Yoon et al., 2021). These activities include “Shared Descriptions—with Instagram Audiences,” “Shared Descriptions—with Reddit Audiences,” and “A Judge Ready to Share—Descriptathon 8: Round 1, An Artifact (The Challenge) Descriptions.”

Self Direction-action values include instances when people exercise their choice, freedom, and autonomy in decision-making, which most often occurred in instances when they judged—e.g., “Judged Round 3-Third Match” and “Judged Round 4-First Match”—or when they directly helped to decide an outcome—e.g., “Tie-Breaking Skills: You Helped Us Break a Tie in the D8 Tourney (Thank you!).”

Stimulation values emerged through engagement (e.g., “Engagement—Large-Group Discussion Contribution” and “Engagement—Creativity”).

Two other values appeared but only in negligible amounts. First, Conformity-rules involved activities acknowledging rule and social-norm following: in the “RSVPed the D8 Calendar Invites” and “Engagement—Deadlines.” Second, Benevolence-caring emerged once in the aforementioned special category. Unexpectedly, despite being central to the values in pre-Descriptathon, Universalism-concern and Universalism-tolerance values were not reflected in D8 activity badges.

We established RQ3 to connect and compare values that motivate people before the Descriptathon with values the Descriptathon rewarded. Based on RQ1 and RQ2, the values that underly why people volunteer differed from the activities they earned on the D8 website. A chi-square test further confirmed this observation: χ2 (8, N = 264) 145.54, p <.001, V = .74.

The closest match between the values that participants brought to D8 and the values in the activities was Self Direction-thought. Hedonism also aligned, though not as closely. However, Universalism-concern, the most-common value indicated before the event, was not present in D8s activity badges. Likewise, no participants indicated Achievement as a value and yet almost one half of D8 rewards appealed to it.

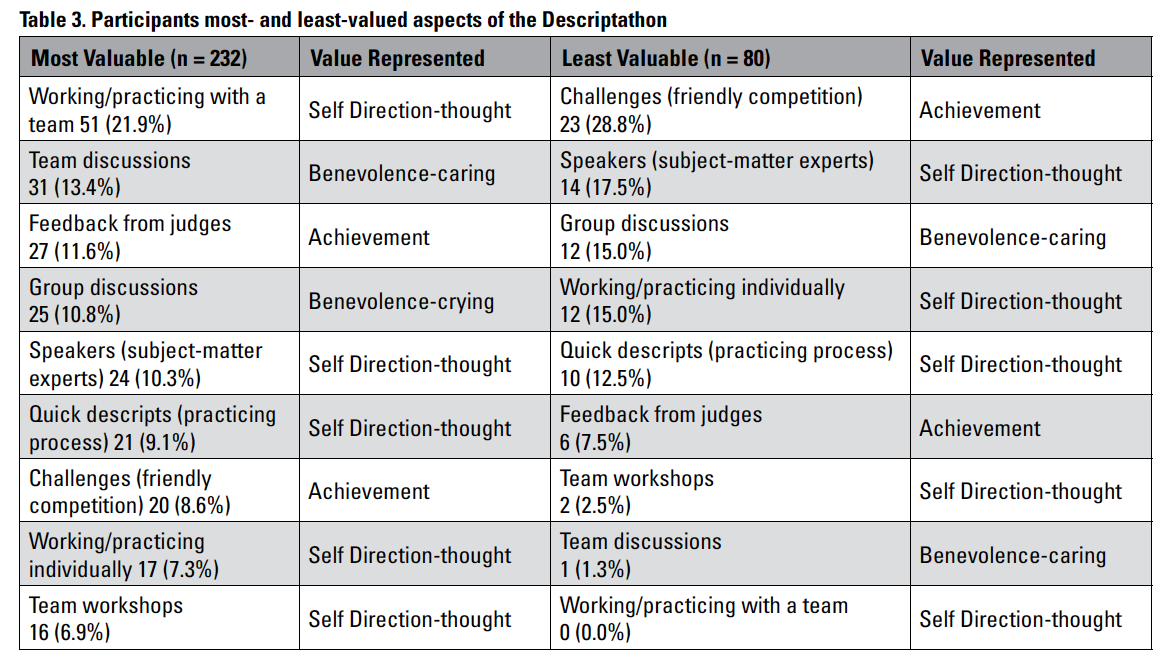

Post-Descriptathon Survey Findings

On the final day of the Descriptathon, we surveyed participants on their experiences. We asked participants to rate nine categories of activities that organizers considered core to the event as “most valuable” or “least valuable,” but with the option to choose more than one category to put into those designations. The two analysts independently coded the nine categories based on values, coming to complete agreement (see Table 3).

The friendly competition Challenges were the most polarizing of these event categories, with about one fifth of participants saying those were the most valuable and one fifth saying those were least-valuable activities. This category was labeled as Achievement. Those friendly competition Challenges also unmotivated a large number of participants.

The friendly competition Challenges were the most polarizing of these event categories, with about one fifth of participants saying those were the most valuable and one fifth saying those were least-valuable activities. This category was labeled as Achievement. Those friendly competition Challenges also unmotivated a large number of participants.

Working and practicing with a team received the most mentions for “most valuable,” labeled as Self Direction-thought. Team discussions ranked second on the list, labeled as Benevolence-caring, and feedback from judges ranked third, coded into the Achievement category. In contrast, subject-matter-expert speakers (Self Direction-thought), large-group discussions (Benevolence-caring), working independently (Self Direction-thought), and Quick Descript practice sessions (Self Direction-thought) were rated as “least valuable” but only by about 10% of participants. In that comparison, between most and least valuable, a general sentiment favored small-group work and individualized feedback. On the other end of the spectrum, participants seemed to favor less the tasks approached at large-scale levels or ones that were done independently.

Gamified contests sometimes generated tension and simultaneous engagement. One participant wrote:

Consider making the competition aspect optional, because points aren’t necessarily motivational to everyone. . . . I think it may add stress or frustration, at least it did for me and a couple of people on my team. It doesn’t correlate with either the quality of the work I want to do or the quality of my experience (learning something new with a really impressive group of people, having fun and engagement).

In contrast, in reference to the Challenges, another participant wrote:

Super fun and stressful! Love love love it! I know many people complained about the time stressors. I loved it because it forced you to focus on the important things quickly. This eventually helps people realize that audio description doesn’t have to take a long time. It’s a quick accommodation that means so much! So, keep the time crunch!

Thus, participants had different perspectives of the competitive quality of the event structure.

DISCUSSION

The diverse and cloistered areas of knowledge involved in an interdisciplinary project—like ours—plus the epistemological methodologies of those different disciplines creates a chasm that is difficult for any research team to navigate (Flanagan et al., 2008). Around the rim of that deep and dark hole, disciplines of all sorts stake claims, nearby each other but distinctly separate while rarely venturing into the center together. By positioning participant values as a design concern co-equal to computer programming and chi-square construction and the other constructivist considerations in such a project, researchers are forced to explore ideas beyond typical scientific and engineering constructs while also supporting those grounded concerns as well.

Flanagan et al. (2008) express a commitment to values as purposes, ends, or goals of human action on their own but also acknowledge the concern that not all values are universal and easy to accommodate. Sometimes participants have conflicting values. Many values are localized in a particular construct and context; therefore, the design of an intervention requires localization to align with those values. From that perspective, values are conceptualized in a hierarchy of a thin set, which all humans share, and a thicker set that applies to particular contexts. Similar sentiments and concerns about effective localization strategies have been raised and debated in technical communication circles, parallel with a social-justice turn in the field this past decade (e.g., Agboka, 2013; Getto & Sun, 2017; Shivers-McNair, 2017).

Although our Descriptathon intervention succeeded from a variety of external perspectives, including inspiring the production of new Audio Description at public places throughout the US at NPS sites, this analysis shows that we can improve in matching participants’ values with the objectives of our media-accessibility initiative. In terms of organization, managers of public places approach us about improving access to their sites. We do have a few sighted people who repeat the experience and participate multiple times, but for most, it has been a one-time event. That makes us wonder, as organizers, if a deeper focus on values and small-group team building, rather than the achievement of finishing brochure descriptions, could build a community committed to long-term participation. For the people in the Descriptathon who are blind or have low vision, we also have a small core of devoted contributors, but for each Descriptathon, we must exert energy to recruit new participants from our target audience. Because they already are well versed in Audio Description, some repeat participants may see our learning modules on that topic as remedial or unnecessary. And when the production process begins, they are laboring in ways that do not necessarily tap into common Universalism-concern and Self Direction-thought values that might have greater appeal. By identifying the Descriptathon’s current Achievement focus, we can understand better when participants develop a been-there, done-that perspective on the event. As an alternative, if we shift our focus in D9 toward participant desires and values, we hypothesize that we can forge longer-lasting relationships, improve retention, and reduce the efforts in each iteration to recruit new people.

To make this shift, we intend to rethink the Descriptathon process, from our initial recruiting messages to our event-ending survey. The key disconnects shown by this research are illustrated in two dramatic disparities on opposite ends of Tables 2 and 3. Our participants came to D8 with a relatively high percentage of values oriented toward Universalism-concern, and we offered them zero activities to engage with that value. On the other side, about one half of D8 activities were based on Achievement values, but none of our participants joined the Descriptathon with Achievement values in mind.

Another key finding of this study was the importance of the sociability aspect of the Descriptathon. D8 was conducted remotely during the COVID-19 pandemic, during which many people felt isolated. The pandemic might have skewed attention to some degree toward this aspect, but that said, volunteers expressed a high interest in participating in something greater than themselves, favoring teamwork, collaboration, and small-group interactions. This finding led us to examine the role of values more closely in sociability and the possible gaps in the current values conceptualization. We ended up coding most of the statements in this area as Benevolence-caring, but the statements did not always seem to fit how Schwartz et al. (2012) defined the value and appeared in a gap between values. We contacted Schwartz about our findings, and he acknowledged that sociability, as we defined the motivational component, was:

Probably the closest fit, but people might have other motivations that you would also describe as sociability. A desire to connect (not “need,” which does not refer to a value) with people may sometimes also be motivated by security or conformity or tradition. Even valuing Achievement may motivate a desire to collaborate when collaboration promotes one’s achievement. (S. Schwartz, personal communication, February 8, 2022)

In that respect, the gamification elements of the Descriptathon could help to support values around sociability elements to bridge motivational gaps. If the Descriptathon is a game, then it is inherently social, and participants are—and need to be—socialized to play it. For example, they must learn the rules, work with teammates, and collaborate toward a common goal. As such, socializing is a critical part of establishing high-quality gameplay (Adams, 2014). Social factors create a fun experience, build team spirit, and give participants agency that they cannot possess on their own because they can collaborate on activities that they could not complete by themselves (Mildner & Mueller, 2016). However, most of the commonly used gamification techniques appear oriented toward personal achievement, personal enjoyment, and fun or rewarding independence of thought and action, and relatively few of them reinforce or reward collaboration, collective effort, or social inclusion (Usunobun et al., 2019, p. 5). We wonder what would happen if a gamification approach was more closely integrated and aligned with values research and its activities tailored more toward values expressed by participants. In the case of the Descriptathon, what would happen if we designed the experience to reflect and emphasize the most-common values that participants bring to the event, rather than primarily imposing our Achievement-oriented values onto the participants at-large? We intend to answer that question in D9.

In the bigger picture, particularly for readers who do not study Audio Description or host Descriptathons, this research model—which gathers individual values of participants at the entry point to any organized activities and then studies ways in which those sentiments are expressed and aligned, sufficiently or not, with the individual values of participants—could be applied to any type of workshop or training or classroom or committee. We perceived that quality of work relates to alignment of values, meaning that when individual values are in alignment, the quality of the work produced by the individuals and the teams are higher. However, we did not explicitly test that relationship and other factors complicate description outcomes. That relationship, therefore, merits further testing.

We did not test the efficacy of gamification in general. Gamification as an approach has been both widely dismissed and vigorously embraced, in an intellectual clash of loosely defined abstractions that seem to avoid direct and empirical comparisons. We recommend those discussions ground themselves in practice-based research to truly determine the potential of gamification. Having tried this intervention once without gamification, and then seven times with gamification, our research team is voting with our design choices, fueled by the mostly positive responses of our participants. Gamification has potential. But the reality is more complicated.

To successfully and effectively gamify an event, participants must have a reason(s) to play the game. Not everyone wants to play, even if they support the cause. Gamification, from that viewpoint, can be a frivolous distraction. Yet, this research into individual values shows more to the dynamic.

Values as a variable not only add another layer of gamification insights but also add to the game’s potency or deficiencies. If intervention designers can know shared values of their user/designer participants and then integrate opportunities to meaningfully express those values into the experiences, then the ramifications of such insights transcend any particular application of the idea or use-case scenario. Across user-experience studies, values could be identified with other variables and examined to better understand what people do and why. From what we have learned, game aspects, at least in terms of a social-justice intervention, need to be both fun and focused on the higher purpose for people to willfully play along.

ABOUT THE AUTHORS

Dr. Brett Oppegaard is an associate professor in the School of Communications at the University of Hawai’i at Mānoa in Honolulu, HI. His scholarship focuses on the study of media accessibility and locative media. In addition to Technical Communication, his research has been published in IEEE Transactions on Professional Communication, Journal of Technical Writing and Communication, and Communication Design Quarterly. He can be reached at brett.oppegaard@hawaii.edu.

Dr. Michael K. Rabby is a scholarly associate professor at Washington State University, Vancouver, in the Creative Media and Digital Culture Program. His research explores the intersections of the human experience with technology in a variety of contexts, including online relationships and mobile app usage. He has published research in the Journal of Social and Personal Relationships, Military Behavioral Health, Digital Journalism, and Communication Studies. He can be reached via michael.rabby@wsu.edu.

REFERENCES

Abt, C. C. (1987). Serious games. University Press of America.

Adams, E. (2014). Fundamentals of game design. Pearson Education.

Agboka, G. Y. (2013). Participatory localization: A social justice approach to navigating unenfranchised/disenfranchised cultural sites. Technical Communication Quarterly, 22(1), 28–49. https://doi.org/10.1080/10572252.2013.730966

Breuer, J. S., & Bente, G. (2010). Why so serious? On the relation of serious games and learning. Journal for Computer Game Culture, 4(1), 7–24.

Briscoe, G., & Mulligan, C. (2014). Digital innovation: The hackathon phenomenon. Creativeworks London, 6, 1–13.

Caillois, R., & Barash, M. (1961). Man, play and games (M. Barash, Trans.; 2nd ed.). University of Illinois Press. (Original work published in 1958).

Cechanowicz, J., Gutwin, C., Brownell, B., & Goodfellow, L. (2013). Effects of gamification on participation and data quality in a real-world market research domain. In Proceedings of the First International Conference on Gameful Design, Research, and Applications (pp. 58–65). https://doi.org/10.1145/2583008.2583016

Cino, D., Demozzi, S., & Subrahmanyam, K. (2020). “Why post more pictures if no one is looking at them?” Parents’ perception of the Facebook Like in sharenting. The Communication Review, 23(2), 122–144. https://doi.org/10.1080/10714421.2020.1797434

Conway, M., Oppegaard, B., & Hayes, T. (2020). Audio description: Making useful maps for blind and visually impaired people. Technical Communication, 67(2), 68–85.

Deterding, S., Dixon, D., Khaled, R., & Nacke, L. (2011, September). From game design elements to gamefulness: defining “gamification.” In Proceedings of the 15th International Academic MindTrek Conference (pp. 9–15). https://doi.org/10.1145/2181037.2181040

deWinter, J., & Vie, S. (2016). Games in technical communication. Technical Communication Quarterly, 25(3), 151–154. https://doi.org/10.1080/10572252.2016.1183411

Flanagan, M., Howe, D., & Nissenbaum, H. (2008) Embodying values in technology: Theory and practice. In J. van den Hoven & J. Weckert (Eds.), Information technology and moral philosophy (pp. 322–353). Cambridge University Press.

Foxman, M. (2020). Punctuated play: Revealing the roots of gamification. Acta Ludologica, 3(2), 54–71.

Fryer, L. (2016). An introduction to audio description: A practical guide. Routledge. https://doi.org/10.4324/9781315707228

Getto, G., & Sun, H. (Eds.) (2017). Localizing user experience: Strategies, practices, and techniques for culturally sensitive design [Special section]. Technical Communication, 64(2), 89–94.

González-González, C. S., & Navarro-Adelantado, V. (2021). The limits of gamification. Convergence, 27(3), 787–804. https://doi.org/10.1177/1354856520984743

Hunicke, R., LeBlanc, M., & Zubek, R. (2004, July). MDA: A formal approach to game design and game research. In Proceedings of the AAAI Workshop on Challenges in Game AI, 4(1), 1722–1727.

Krippendorff, K. (2019). Content analysis: An introduction to its methodology. Sage.

Levitt, H. M. (2021). Essentials of critical-constructivist grounded theory research. American Psychological Association. https://doi.org/10.1037/0000231-000

Matamala, A., & Orero, P. (2016). Researching audio description: New Approaches. Palgrave McMillan.

Mildner, P., & Mueller, F. (2016). Design of serious games. In R. Dörner, S. Göbel, W. Effelsberg, & J. Wiemeyer (Eds.), Serious games (pp. 57–82). Springer. https://doi.org/10.1007/978-3-319-40612-1_3

National Park Service. (n.d.). Santa Monica Mountains [brochure].

Oppegaard, B. (2020). Unseeing solutions: From failures to feats through increasingly inclusive design. In J. Majewski, R. Marquis, N. Proctor, & B. Ziebarth (Eds.), Inclusive digital interactives: Best practices, innovative experiments, and questions for research (pp. 219–242). Access Smithsonian, The Institute for Human Centered Design, & Museweb.

Porras, J., Khakurel, J., Ikonen, J., Happonen, A., Knutas, A., Herala, A., & Drögehorn, O. (2018, June). Hackathons in software engineering education: Lessons learned from a decade of events. In Proceedings of the 2nd International Workshop on Software Engineering Education for Millennials (pp. 40–47). https://doi.org/10.1145/3194779.3194783

Prensky, M. (2007). Digital game-based learning. Paragon House. https://doi.org/10.1145/950566.950596

Quan-Haase, A., & Young, A. L. (2010). Uses and gratifications of social media: A comparison of Facebook and instant messaging. Bulletin of Science, Technology & Society, 30(5), 350–361. https://doi.org/10.1177/0270467610380009

Rennie, D. L. (2000). Grounded theory methodology as methodological hermeneutics: Reconciling realism and relativism. Theory & Psychology, 10(4), 481–502. https://doi.org/10.1177/0959354300104003

Sackey, D. J. (2020). One-size-fits-none: A heuristic for proactive value sensitive environmental design. Technical Communication Quarterly, 29(1), 33–48. https://doi.org/10.1080/10572252.2019.1634767

Schwartz, S. (1992). Universals in the content and structure of values: Theoretical advances and empirical tests in 20 countries. Advances in Experimental Social Psychology, 25, 1–65. https://doi.org/10.1016/S0065-2601(08)60281-6

Schwartz, S. (1999). A theory of cultural values and some implications for work. Applied Psychology, 48(1), 23–47.

Schwartz, S., & Bilsky, W. (1987). Toward a universal psychological structure of human values. Journal of Personality and Social Psychology, 53(3), 550–562.

Schwartz, S. H., Cieciuch, J., Vecchione, M., Davidov, E., Fischer, R., Beierlein, C., Ramos, A., Verkasalo, M., Lönnqvist, J.-E., Demirutku, K., Dirilen-Gumus, O., & Konty, M. (2012). Refining the theory of basic individual values. Journal of Personality and Social Psychology, 103(4), 663–688. https://doi.org/10.1037/a0029393

Seaborn, K., & Fels, D. (2015). Gamification in theory and action: A survey. International Journal of Human-Computer Studies, 74, 14–31. https://doi.org/10.1016/j.ijhcs.2014.09.006

Shivers-McNair, A. (2017). Localizing communities, goals, communication, and inclusion: A collaborative approach. Technical Communication, 64(2), 97–112.

Turnham, C. (2019). “Santa Monica Mountains” by National Park Service

https://bit.ly/UniD_SantaMonicaMountains

UniDescription. (n.d.). Home. www.unidescription.org

Usunobun, I., Anti, E., Hu, F., Habila, L., Sayed, R., Zhang, Y., & Tuunanen, T. (2019). Cultural values’ influences on users’ preferences for gamification techniques. In ICIS 2019 Proceedings. https://aisel.aisnet.org/icis2019/design_science/design_science/1

Van Berkel, N., Goncalves, J., Hosio, S., & Kostakos, V. (2017). Gamification of mobile experience sampling improves data quality and quantity. In Proceedings of the ACM on Interactive, Mobile, and Ubiquitous Technologies, 1(3), 1–21. https://doi.org/10.1145/3130972

Wilkinson, P. (2016). A brief history of serious games. In R. Dörner, S. Göbel, M. Kickmeier-Rust, M. Masuch, & K. Zweig (Eds.), Entertainment computing and serious games. Lecture Notes in Computer Science, 9970, Springer. https://doi.org/10.1007/978-3-319-46152-6_2

Yoon, G., Duff, B. R., & Bunker, M. P. (2021). Sensation seeking, media multitasking, and social Facebook use. Social Behavior & Personality: An International Journal, 49(1), 1–7. https://doi.org/10.2224/sbp.8918